The Five Pillars Of Longevity

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

The Five Pillars Of Longevity

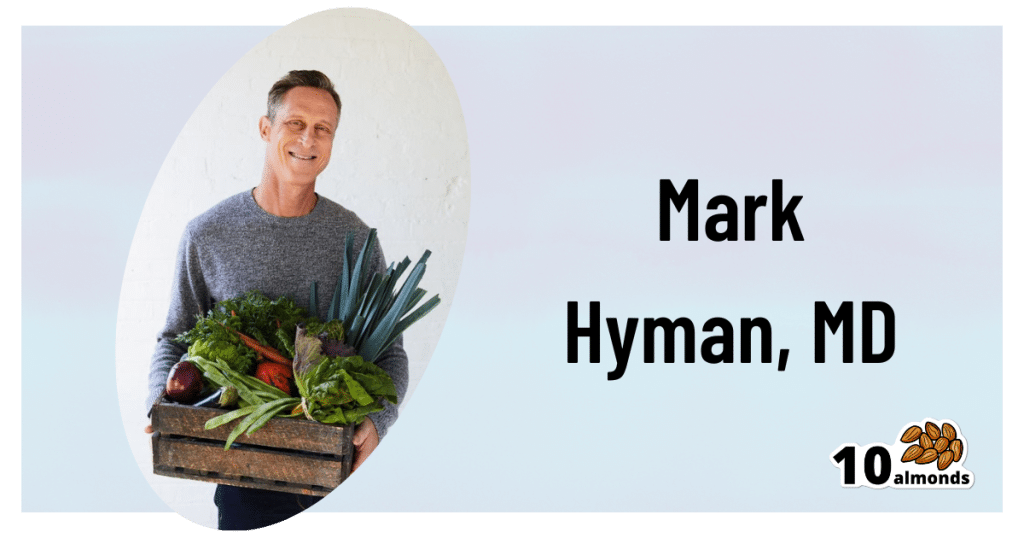

This is Dr. Mark Hyman. He’s a medical doctor, and he’s the board president of clinical affairs of the Institute for Functional Medicine. He’s also the founder and medical director of the UltraWellness Center!

What he’d like you to know about is what he calls the “Five Pillars of Longevity”.

Now, here at 10almonds, we often talk about certain things that science finds to be good for almost any health condition, and have made a habit of referencing what we call “The Usual Five Things™” (not really a trademark, by the way—just a figure of speech), which are:

- Have a good diet

- Get good exercise

- Get good sleep

- Reduce (or eliminate) alcohol consumption

- Don’t smoke

…and when we’re talking about a specific health consideration, we usually provide sources as to why each of them are particularly relevant, and pointers as to the what/how associated with them (ie what diet is good, how to get good sleep, etc).

Dr. Hyman’s “Five Pillars of Longevity” are based on observations from the world’s “Blue Zones”, the popular name for areas with an unusually high concentration of supercentenarians—Sardinia and Okinawa being famous examples, with a particular village in each being especially exemplary.

These Five Pillars of Longevity partially overlap with ours for three out of five, and they are:

- Good nutrition

- Optimized workouts

- Reduce stress

- Get quality sleep

- Find (and live) your purpose

We won’t argue against those! But what does he have to say, for each of them?

Good nutrition

Dr. Hyman advocates for a diet he calls “pegan”, which he considers to combine the paleo and vegan diets. Here at 10almonds, we generally advocate for the Mediterranean Diet because of the mountains of evidence for it, but his approach may be similar in some ways, since it looks to consume a majority plant diet, with some unprocessed meats/fish, limited dairy, and no grains.

By the science, honestly, we stand by the Mediterranean (which includes whole grains), but if for example your body may have issues of some kind with grains, his approach may be a worthy consideration.

Optimized workouts

For Dr. Hyman, this means getting in three kinds of exercise regularly:

- Aerobic/cardio, to look after your heart health

- Resistance training (e.g. weights or bodyweight strength-training) to look after your skeletal and muscular health

- Yoga or similar suppleness training, to look after your joint health

Can’t argue with that, and it can be all too easy to fall into the trap of thinking “I’m healthy because I do x” while forgetting y and/or z! Thus, a three-pronged approach definitely has its merits.

Reduce stress

Acute stress (say, a cold shower) is can confer some health benefits, but chronic stress is ruinous to our health and it ages us. So, reducing this is critical. Dr. Hyman advocates for the practice of mindfulness and meditation, as well as journaling.

Get quality sleep

Quality here, not just quantity. As well as the usual “sleep hygiene” advices, he has some more unorthodox methods, such as the use of binaural beats to increase theta-wave activity in the brain (and thus induce more restful sleep), and the practice of turning off Wi-Fi, on the grounds that Wi-Fi signals interfere with our sleep.

We were curious about these recommendations, so we checked out what the science had to say! Here’s what we found:

- Minimal Effects of Binaural Auditory Beats for Subclinical Insomnia: A Randomized Double-Blind Controlled Study

- Spending the night next to a router – Results from the first human experimental study investigating the impact of Wi-Fi exposure on sleep

In short: probably not too much to worry about in those regards. On the other hand, worrying less, unlike those two things, is a well-established way improve sleep!

(Surprised we disagreed with our featured expert on a piece of advice? Please know: you can always rely on us to stand by what the science says; we pride ourselves on being as reliable as possible!)

Find (and live!) your purpose

This one’s an ikigai thing, to borrow a word from Japanese, or finding one’s raison d’être, as we say in English using French, because English is like that. It’s about having purpose.

Dr. Hyman’s advice here is consistent with what many write on the subject, and it’d be an interesting to have more science on, but meanwhile, it definitely seems consistent with commonalities in the Blue Zone longevity hotspots, where people foster community, have a sense of belonging, know what they are doing for others and keep doing it because they want to, and trying to make the world—or even just their little part of it—better for those who will follow.

Being bitter, resentful, and self-absorbed is not, it seems a path to longevity. But a life of purpose, or even just random acts of kindness, may well be.

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Recommended

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails:

-

Palliative care as a true art form

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

How do you ease the pain from an ailment amidst lost words? How can you serve the afflicted when lines start to blur? When the foundation of communication begins to crumble, what will be the pillar health-care professionals can lean on to support patients afflicted with dementia during their final days?

The practice of medicine is both highly analytical and evidence based in nature. However, it is considered a “practice” because at the highest level, it resembles a musician navigating an instrument. It resembles art. Between lab values, imaging techniques and treatment options, the nuances for individualized patient care so often become threatened.

Dementia, a non-malignant terminal illness, involves the progressive cognitive and social decline in those afflicted. Though there is no cure, dementia is commonly met in the setting of end-of-life care. During this final stage of life, the importance of comfort via symptomatic management and communication usually is a priority in patient care. But what about the care of a patient suffering from dementia? While communication serves as the vehicle to deliver care at a high level, medical professionals are suddenly met with a roadblock. And there … behind the pieces of shattered communication and a dampened map of ethical guidelines, health-care providers are at a standstill.

It’s 4:37 a.m. You receive a text message from the overnight nurse at a care facility regarding a current seizure. After lorazepam is ordered and administered, Mr. H, a quick-witted 76-year-old, stabilizes. Phenobarbital 15mg SC qhs was also added to prevent future similar events. You exhale a sigh of relief.

Mr. H. has been admitted to the floor 36 hours earlier after having a seizure while playing poker with colleagues. Since he became your patient, he’s shared many stories from professional and family life with you, along with as many jokes as he could fit in between. However, over the course of the next seven days, Mr. H. would develop aspiration pneumonia, progressing to ventilator dependency and, ultimately, multi-organ failure with rapid cognitive decline.

What strategies and tools would you use to maximize the well-being of your patient during his decline? How would you bridge the gap of understanding between the patient’s family and health-care team to provide the standard of care that all patients are owed?

To give Mr. H. the type of care he would have wanted, upon his hospital admission, he should have been questioned about his understanding of illness along with the goals of care of the medical team. The patient should have been informed that it is imperative to adhere to the medical regimen implemented by his team along with the risks of not doing so. In the event disease-related complications arose, advanced directives should have been documented to avoid any unnecessary measures.

It is important to note, that with each change in status of the patient’s health status, the goal of treatment must be reassessed. The patient or surrogate decision-maker’s understanding of these goals is paramount in maintaining the patient’s autonomy. It is often said that effective communication is the bedrock of a healthy relationship. This is true regardless of type of relationship.

This is why I and Megan Vierhout wrote Integrated End of Life Care in Dementia: A Comprehensive Guide, a book targeted at providing a much-needed road map to navigate the many challenges involved in end-of-life care for individuals with dementia. Ultimately, our aim is to provide a compass for both health-care professionals and the families of those affected by the progressive effects of dementia. We provide practical advice on optimizing communication with individuals with dementia while taking their cognitive limitations, preferences and needs into account.

I invite you to explore the unpredictable terrain of end-of-life care for patients with dementia. Together, we can pave a smoother, sturdier path toward the practice of medicine as a true art form.

This article is republished from healthydebate under a Creative Commons license. Read the original article.

Share This Post

-

Why Adult ADHD Often Leads To Anxiety & Depression

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

ADHD’s Knock-On Effects On Mental Health

We’ve written before about ADHD in adult life, often late-diagnosed because it’s not quite what people think it is:

In women in particular, it can get missed and/or misdiagnosed:

Miss Diagnosis: Anxiety, ADHD, & Women

…but what we’re really here to talk about today is:

It’s the comorbidities that get you

When it comes to physical health conditions:

- if you have one serious condition, it will (usually) be taken seriously

- if you have two, they will still be taken seriously, but people (friends and family members, as well as yes, medical professionals) will start to back off, as it starts to get too complicated for comfort

- if you have three, people will think you are making at least one of them up for attention now

- if you have more than three, you are considered a hypochondriac and pathological liar

Yet, the reality is: having one serious condition increases your chances of having others, and this chance-increasing feature compounds with each extra condition.

Illustrative example: you have fibromyalgia (ouch) which makes it difficult for you to exercise much, shop around when grocery shopping, and do much cooking at home. You do your best, but your diet slips and it’s hard to care when you just want the pain to stop; you put on some weight, and get diagnosed with metabolic syndrome, which in time becomes diabetes with high cardiovascular risk factors. Your diabetes is immunocompromising; you get COVID and find it’s now Long COVID, which brings about Chronic Fatigue Syndrome, when you barely had the spoons to function in the first place. At this point you’ve lost count of conditions and are just trying to get through the day.

If this is you, by the way, we hope at least something in the following might ease things for you a bit:

- Stop Pain Spreading

- Managing Chronic Pain (Realistically!)

- Eat To Beat Chronic Fatigue (While Having The Limitations Of Chronic Fatigue)

- When Painkillers Aren’t Helping, These Things Might

- The 7 Approaches To Pain Management

It’s the same for mental health

In the case of ADHD as a common starting point (because it’s quite common, may or may not be diagnosed until later in life, and doesn’t require any external cause to appear), it is very common that it will lead to anxiety and/or depression, to the point that it’s perhaps more common to also have one or more of them than not, if you have ADHD.

(Of course, anxiety and/or depression can both pop up for completely unrelated reasons too, and those reasons may be physiological, environmental, or a combination of the above).

Why?

Because all the good advice that goes for good mental health (and/or life in general), gets harder to actuate when one had ADHD.

- “Strong habits are the core of a good life”, but good luck with that if your brain doesn’t register dopamine in the same way as most people’s do, making intentional habit-forming harder on a physiological level.

- “Plan things carefully and stick to the plan”, but good luck with that if you are neurologically impeded from forming plans.

- “Just do it”, but oops you have the tendency-to-overcommitment disorder and now you are seriously overwhelmed with all the things you tried to do, when each of them alone were already going to be a challenge.

Overwhelm and breakdown are almost inevitable.

And when they happen, chances are you will alienate people, and/or simply alienate yourself. You will hide away, you will avoid inflicting yourself on others, you will brood alone in frustration—or distract yourself with something mind-numbing.

Before you know it, you’re too anxious to try to do things with other people or generally show your face to the world (because how will they react, and won’t you just mess things up anyway?), and/or too depressed to leave your depression-lair (because maybe if you keep playing Kingdom Vegetables 2, you can find a crumb of dopamine somewhere).

What to do about it

How to tackle the many-headed beast? By the heads! With your eyes open. Recognize and acknowledge each of the heads; you can’t beat those heads by sticking your own in the sand.

Also, get help. Those words are often used to mean therapy, but in this case we mean, any help. Enlist your partner or close friend as your support in your mental health journey. Enlist a cleaner as your support in taking that one thing off your plate, if that’s an option and a relevant thing for you. Set low but meaningful goals for deciding what constitutes “good enough” for each life area. Decide in advance what you can safely half-ass, and what things in life truly require your whole ass.

Here’s a good starting point for that kind of thing:

When You Know What You “Should” Do (But Knowing Isn’t The Problem)

And this is an excellent way to “get the ball rolling” if you’re already in a bit of a prison of your own making:

Behavioral Activation Against Depression & Anxiety

If things are already bad, then you might also consider:

- How To Set Anxiety Aside and

- The Mental Health First-Aid That You’ll Hopefully Never Need ← this is about getting out of depression

And if things are truly at the worst they can possibly be, then:

How To Stay Alive (When You Really Don’t Want To)

Take care!

Share This Post

-

The Cold Truth About Respiratory Infections

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

The Pathogens That Came In From The Cold

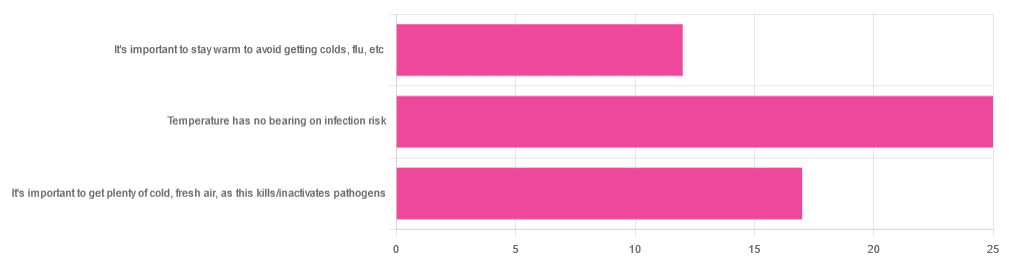

Yesterday, we asked you about your climate-themed policy for avoiding respiratory infections, and got the above-depicted, below-described, set of answers:

- About 46% of respondents said “Temperature has no bearing on infection risk”

- About 31% of respondents said “It’s important to get plenty of cold, fresh air, as this kills/inactivates pathogens”

- About 22% of respondents said “It’s important to stay warm to avoid getting colds, flu, etc”

Some gave rationales, including…

For “stay warm”:

❝Childhood lessons❞

For “get cold, fresh air”:

❝I just feel that it’s healthy to get fresh air daily. Whether it kills germs, I don’t know❞

For “temperature has no bearing”:

❝If climate issue affected respiratory infections, would people in the tropics suffer more than those in colder climates? Pollutants may affect respiratory infections, but I doubt just temperature would do so.❞

So, what does the science say?

It’s important to stay warm to avoid getting colds, flu, etc: True or False?

False, simply. Cold weather does increase the infection risk, but for reasons that a hat and scarf won’t protect you from. More on this later, but for now, let’s lay to rest the idea that bodily chilling will promote infection by cold, flu, etc.

In a small-ish but statistically significant study (n=180), it was found that…

❝There was no evidence that chilling caused any acute change in symptom scores❞

Read more: Acute cooling of the feet and the onset of common cold symptoms

Note: they do mention in their conclusion that chilling the feet “causes the onset of cold symptoms in about 10% of subjects who are chilled”, but the data does not support that conclusion, and the only clear indicator is that people who are more prone to colds generally, were more prone to getting a cold after a cold water footbath.

In other words, people who were more prone to colds remained more prone to colds, just the same.

It’s important to get plenty of cold, fresh air, as this kills/inactivates pathogens: True or False?

Broadly False, though most pathogens do have an optimal operating temperature that (for obvious reasons) is around normal human body temperature.

However, given that they don’t generally have to survive outside of a host body for long to get passed on, the fact that the pathogens may be a little sluggish in the great outdoors will not change the fact that they will be delighted by the climate in your respiratory tract as soon as you get back into the warm.

With regard to the cold air not being a reliable killer/inactivator of pathogens, we call to the witness stand…

Polar Bear Dies From Bird Flu As H5N1 Spreads Across Globe

(it was found near Utqiagvik, one of the northernmost communities in Alaska)

Because pathogens like human body temperature, raising the body temperature is a way to kill/inactivate them: True or False?

True! Unfortunately, it’s also a way to kill us. Because we, too, cannot survive for long above our normal body temperature.

So, for example, bundling up warmly and cranking up the heating won’t necessarily help, because:

- if the temperature is comfortable for you, it’s comfortable for the pathogen

- if the temperature is dangerous to the pathogen, it’s dangerous to you too

This is why the fever response evolved, and/but why many people with fevers die anyway. It’s the body’s way of playing chicken with the pathogen, challenging “guess which of us can survive this for longer!”

Temperature has no bearing on infection risk: True or False?

True and/or False, circumstantially. This one’s a little complex, but let’s break it down to the essentials.

- Temperature has no direct effect, for the reasons we outlined above

- Temperature is often related to humidity, which does have an effect

- Temperature does tend to influence human behavior (more time spent in open spaces with good ventilation vs more time spent in closed quarters with poor ventilation and/or recycled air), which has an obvious effect on transmission rates

The first one we covered, and the third one is self-evident, so let’s look at the second one:

Temperature is often related to humidity, which does have an effect

When the environmental temperature is warmer, water droplets in the air will tend to be bigger, and thus drop to the ground much more quickly.

When the environmental temperature is colder, water droplets in the air will tend to be smaller, and thus stay in the air for longer (along with any pathogens those water droplets may be carrying).

Some papers on the impact of this:

- Cold temperature and low humidity are associated with increased occurrence of respiratory tract infections

- A Decrease in Temperature and Humidity Precedes Human Rhinovirus Infections in a Cold Climate

So whatever temperature you like to keep your environment, humidity is a protective factor against respiratory infections, and dry air is a risk factor.

So, for example:

- If the weather doesn’t suit having good ventilation, a humidifier is a good option

- Being in an airplane is one of the worst places to be for this, outside of a hospital

Don’t have a humidifier? Here’s an example product on Amazon, but by all means shop around.

A crock pot with hot water in and the lid off is also a very workable workaround too

Take care!

Share This Post

Related Posts

-

Sweet Dreams Are Made Of Cheese (Or Are They?)

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

It’s Q&A Day at 10almonds!

Have a question or a request? You can always hit “reply” to any of our emails, or use the feedback widget at the bottom!

In cases where we’ve already covered something, we might link to what we wrote before, but will always be happy to revisit any of our topics again in the future too—there’s always more to say!

As ever: if the question/request can be answered briefly, we’ll do it here in our Q&A Thursday edition. If not, we’ll make a main feature of it shortly afterwards!

So, no question/request too big or small

❝In order to lose a little weight I have cut out cheese from my diet – and am finding that I am sleeping better. Would be interested in your views on cheese and sleep, and whether some types of cheese are worse for sleep than others. I don’t want to give up cheese entirely!❞

In principle, there’s nothing in cheese that, biochemically, should impair sleep. If anything, its tryptophan content could aid good sleep.

Tryptophan is found in many foods, including cheese, which (of common foods, anyway), for example cheddar cheese ranks second only to pumpkin seeds in tryptophan content.

Tryptophan can be converted by the body into 5-HTP, which you’ve maybe seen sold as a supplement. Its full name is 5-hydroxytryptophan.

5-HTP can, in turn, be used to make melatonin and/or serotonin. Which of those you will get more of, depends on what your body is being cued to do by ambient light/darkness, and other environmental cues.

If you are having cheese and then checking your phone, for instance, or otherwise hanging out where there are white/blue lights, then your body may dutifully convert the tryptophan into serotonin (calm wakefulness) instead of melatonin (drowsiness and sleep).

In short: the cheese will (in terms of this biochemical pathway, anyway) augment some sleep-inducing or wakefulness-inducing cues, depending on which are available.

You may be wondering: what about casein?

Casein is oft-touted as producing deep sleep, or disturbed sleep, or vivid dreams, or bad dreams. There’s no science to back any of this up, though the following research review is fascinating:

Dreams of the Rarebit Fiend: food and diet as instigators of bizarre and disturbing dreams

(it largely supports the null hypothesis of “not a causal factor” but does look at the many more likely alternative explanations, ranging from associated actually casual factors (such as alcohol and caffeine) and placebo/nocebo effect)

Finally, simple digestive issues may be the real thing at hand:

Worth noting that around two thirds of all people, including those who regularly enjoy dairy products, have some degree of lactose intolerance:

Lactose Intolerance in Adults: Biological Mechanism and Dietary Management

So, in terms of what cheese may be better/worse for you in this context, you might try experimenting with lactose-free cheese, which will help you identify whether that was the issue!

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails:

-

Get Rid Of Female Facial Hair Easily

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Dr. Sam Ellis, dermatologist, explains:

Hair today; gone tomorrow

While a little peach fuzz is pretty ubiquitous, coarser hairs are less common in women especially earlier in life. However, even before menopause, such hair can be caused by main things, ranging from PCOS to genetics and more. In most cases, the underlying issue is excess androgen production, for one reason or another (i.e. there are many possible reasons, beyond the scope of this article).

Options for dealing with this include…

- Topical, such as eflornithine (e.g. Vaniqa) thins terminal hairs (those are the coarse kind); a course of 6–8 weeks continued use is needed.

- Hormonal, such as estrogen (opposes testosterone and suppresses it), progesterone (downregulates 5α-reductase, which means less serum testosterone is converted to the more powerful dihydrogen testosterone (DHT) form), and spironolactone or other testosterone-blockers; not hormones themselves, but they do what it says on the tin (block testosterone).

- Non-medical, such as electrolysis, laser, and IPL. Electrolysis works on all hair colors but takes longer; laser needs to be darker hair against paler skin* (because it works by superheating the pigment of the hair while not doing the same to the skin) but takes more treatments, and IPL is a less-effective more-convenient at-home option, that works on the same principles as laser (and so has the same color-based requirements), and simply takes even longer than laser.

*so for example:

- Black hair on white skin? Yes

- Red hair on white skin? Potentially; it depends on the level of pigmentation. But it’s probably not the best option.

- Gray/blonde hair on white skin? No

- Black hair on mid-tone skin? Yes, but a slower pace may be needed for safety

- Anything else on mid-tone skin? No

- Anything on dark skin? No

For more on all of this, enjoy:

Click Here If The Embedded Video Doesn’t Load Automatically!

Want to learn more?

You might also like to read:

Too Much Or Too Little Testosterone?

Take care!

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails:

-

Over-50s Physio: What My 5 Oldest Patients (Average Age 92) Do Right

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Oftentimes, people of particularly advanced years will be asked their secret to longevity, and sometimes the answers aren’t that helpful because they don’t actually know, and ascribe it to some random thing. Will Harlow, the over-50s specialist physio, talks about the top 5 science-based things that his 5 oldest patients do, that enhances the healthy longevity that they are enjoying:

The Top 5’s Top 5

Here’s what they’re doing right:

Daily physical activity: all five patients maintain a consistent habit of daily exercise, which includes activities like exercise classes, home workouts, playing golf, or taking daily walks. They prioritize movement even when it’s difficult, rarely skipping a day unless something serious happened. A major motivator was the fear of losing mobility, as they had seen spouses, friends, or family members stop exercising and never start again.

Stay curious: a shared trait among the patients was their curiosity and eagerness to learn. They enjoy meeting new people, exploring new experiences, and taking on new challenges. Two of them attended the University of the Third Age to learn new skills, while another started playing bridge as a new hobby. The remaining two have recently made new friends. They all maintain a playful attitude, a good sense of humor, and aren’t afraid to fail or laugh at themselves.

Prioritize sleep (but not too much): the patients each average seven hours of sleep per night, aligning with research suggesting that 7–9 hours of sleep is ideal for health. They maintain consistent sleep and wake-up times, which contributes to their well-being. While they allow themselves short naps when needed, they avoid long afternoon naps to avoid disrupting their sleep patterns.

Spend time in nature: spending time outdoors is a priority for all five individuals. Whether through walking, gardening, or simply sitting on a park bench, they make it a habit to connect with nature. This aligns with studies showing that time spent in natural environments, especially near water, significantly reduces stress. When water isn’t accessible, green spaces still provide a beneficial boost to mental health.

Stick to a routine: the patients all value simple daily routines, such as enjoying an evening cup of tea, taking a daily walk, or committing to small gardening tasks. These routines offer mental and physical grounding, providing stability even when life becomes difficult sometimes. They emphasized the importance of keeping routines simple and manageable to ensure they could stick to them regardless of life’s challenges.

For more on each of these, enjoy:

Click Here If The Embedded Video Doesn’t Load Automatically!

Want to learn more?

You might also like to read:

Top 8 Habits Of The Top 1% Healthiest Over-50s ← another approach to the same question, this time with a larger sample size, and/but many younger (than 90s) respondents.

Take care!

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails: