Five Advance Warnings of Multiple Sclerosis

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Five Advance Warnings of Multiple Sclerosis

First things first, a quick check-in with regard to how much you know about multiple sclerosis (MS):

- Do you know what causes it?

- Do you know how it happens?

- Do you know how it can be fixed?

If your answer to the above questions is “no”, then take solace in the fact that modern science doesn’t know either.

What we do know is that it’s an autoimmune condition, and that it results in the degradation of myelin, the “insulator” of nerves, in the central nervous system.

- How exactly this is brought about remains unclear, though there are several leading hypotheses including autoimmune attack of myelin itself, or disruption to the production of myelin.

- Treatments look to reduce/mitigate inflammation, and/or treat other symptoms (which are many and various) on an as-needed basis.

If you’re wondering about the prognosis after diagnosis, the scientific consensus on that is also “we don’t know”:

Read: Personalized medicine in multiple sclerosis: hope or reality?

this paper, like every other one we considered putting in that spot, concludes with basically begging for research to be done to identify biomarkers in a useful fashion that could help classify many distinct forms of MS, rather than the current “you have MS, but who knows what that will mean for you personally because it’s so varied” approach.

The Five Advance Warning Signs

Something we do know! First, we’ll quote directly the researchers’ conclusion:

❝We identified 5 health conditions associated with subsequent MS diagnosis, which may be considered not only prodromal but also early-stage symptoms.

However, these health conditions overlap with prodrome of two other autoimmune diseases, hence they lack specificity to MS.❞

So, these things are a warning, five alarm bells, but not necessarily diagnostic criteria.

Without further ado, the five things are:

- depression

- sexual disorders

- constipation

- cystitis

- urinary tract infections

❝This association was sufficiently robust at the statistical level for us to state that these are early clinical warning signs, probably related to damage to the nervous system, in patients who will later be diagnosed with multiple sclerosis.

The overrepresentation of these symptoms persisted and even increased over the five years after diagnosis.❞

Read the paper for yourself:

Hot off the press! Published only yesterday!

Want to know more about MS?

Here’s a very comprehensive guide:

National clinical guideline for diagnosis and management of multiple sclerosis

Take care!

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Recommended

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails:

-

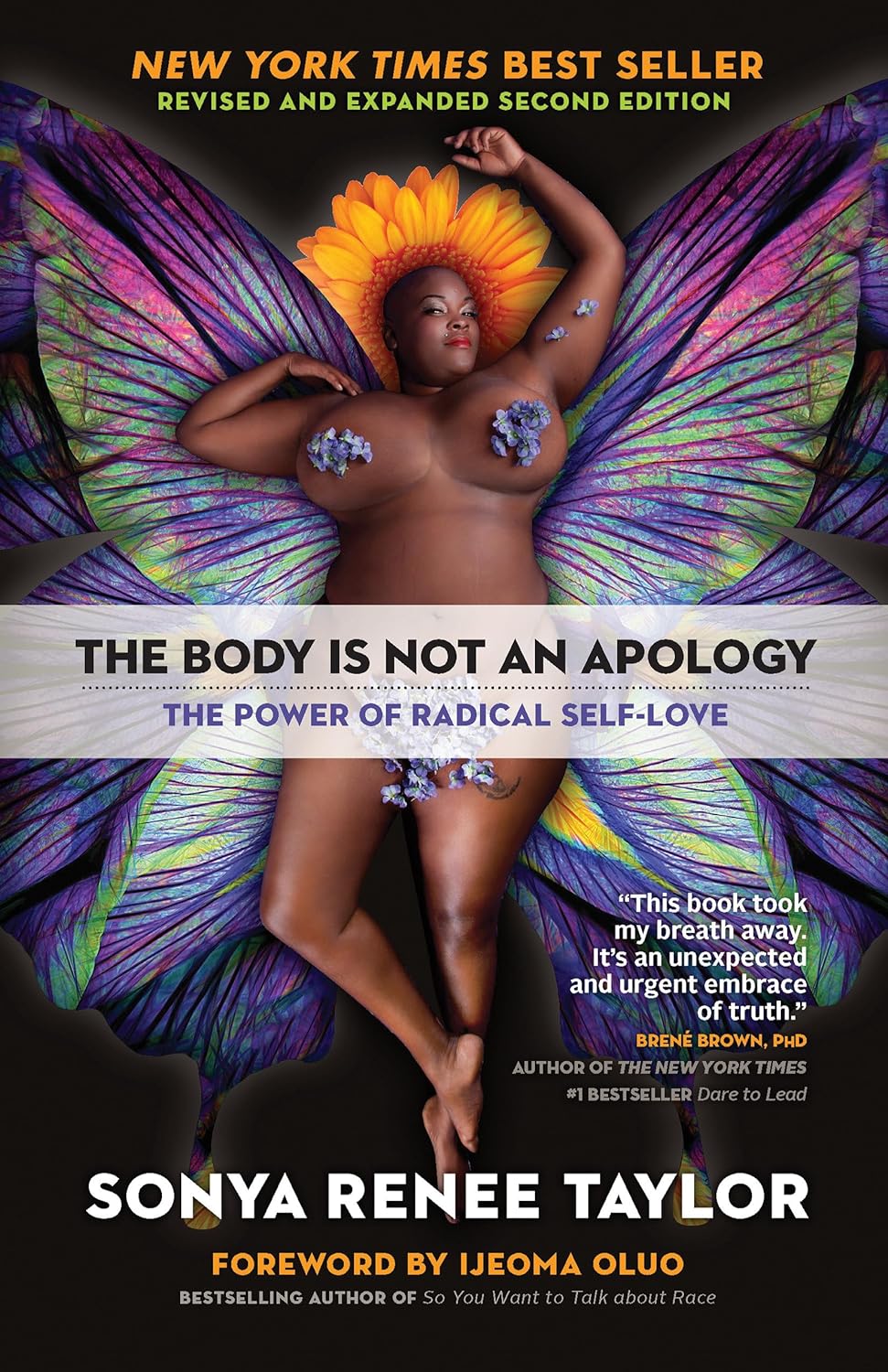

The Body Is Not an Apology – by Sonya Renee Taylor

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

First, a couple of things that this book is not about:

- Self-confidence (it’s about more than merely thinking highly of oneself)

- Self-acceptance (it’s about more than merely settling for “good enough”)

In contrast, it’s about loving and celebrating what is, while striving for better, for oneself and for others.

You may be wondering: whence this “radical” in the title?

The author argues that often, the problem with our bodies is not actually our bodies. If we have cancer, or diabetes, then sure, that’s a problem with the body. But most of the time, the “problem with our bodies” is simply society’s rejection of our “imperfect” bodies as somehow “less than”, and something we must invest time and money to correct. Hence, the need for a radical uprooting of ideas, to fix the real problem.

Bottom line: if, like most of us, you have a body that would not entirely pass for that of a Marvel Comics superhero, this is a book for you. And if you do have a MCU body? This is also a book for you, because we have bad news for you about what happens with age.

Click here to check out The Body Is Not An Apology, and appreciate more about yours!

Share This Post

-

Ginger Does A Lot More Than You Think

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Ginger’s benefits go deep!

You are doubtlessly already familiar with what ginger is, so let’s skip right into the science.

The most relevant active compound in the ginger root is called gingerol, and people enjoy it not just for its taste, but also a stack of health reasons, such as:

- For weight loss

- Against nausea

- Against inflammation

- For cardiovascular health

- Against neurodegeneration

Quite a collection! So, what does the science say?

For weight loss

This one’s quite straightforward. It not only helps overall weight loss, but also specifically improves waist-hip ratio, which is a much more important indicator of health than BMI.

Against nausea & pain

Ginger has proven its effectiveness in many high quality clinical trials, against general nausea, post-surgery nausea, chemotherapy-induced nausea, and pregnancy-related nausea.

Source: Ginger on Human Health: A Comprehensive Systematic Review of 109 Randomized Controlled Trials

However! While it very clearly has been shown to be beneficial in the majority of cases, there are some small studies that suggest it may not be safe to take close to the time of giving birth, or in people with a history of pregnancy loss, or unusual vaginal bleeding, or clotting disorders.

See specifically: Ginger for nausea and vomiting of pregnancy

As a side note on the topic of “trouble down there”, ginger has also been found to be as effective as Novafen (a combination drug of acetaminophen (Tylenol), caffeine, and ibuprofen), in the task of relieving menstrual pain:

See: Effect of Ginger and Novafen on menstrual pain: A cross-over trial

Against inflammation & pain

Ginger has well-established anti-inflammatory (and, incidentally, which affects many of the same systems, antioxidant) effects. Let’s take a look at that first:

Read: Effect of Ginger on Inflammatory Diseases

Attentive readers will note that this means that ginger is not merely some nebulous anti-inflammatory agent. Rather, it also specifically helps alleviate delineable inflammatory diseases, ranging from colitis to Crohn’s, arthritis to lupus.

We’ll be honest (we always are!), the benefits in this case are not necessarily life-changing, but they are a statistically significant improvement, and if you are living with one of those conditions, chances are you’ll be glad of even things described in scientific literature as “modestly efficacious”.

What does “modestly efficacious” look like? Here are the numbers from a review of 593 patients’ results in clinical trials (against placebo):

❝Following ginger intake, a statistically significant pain reduction SMD = −0.30 ([95% CI: [(−0.50, −0.09)], P = 0.005]) with a low degree of inconsistency among trials (I2 = 27%), and a statistically significant reduction in disability SMD = −0.22 ([95% CI: ([−0.39, −0.04)]; P = 0.01; I2 = 0%]) were seen, both in favor of ginger.❞

To de-mathify that:

- Ginger reduced pain by 30%

- Ginger reduced disability by 22%

Read the source: Efficacy and safety of ginger in osteoarthritis patients: a meta-analysis of randomized placebo-controlled trials

Because (in part) of the same signalling pathways, it also has benefits against cancer (and you’ll remember, it also reduces the symptoms of chemotherapy).

See for example: Ginger’s Role in Prevention and Treatment of Gastrointestinal Cancer

For cardiovascular health

In this case, its benefits are mostly twofold:

- It significantly reduces triglycerides and LDL cholesterol, while increasing HDL cholesterol

- It significantly reduces fasting blood sugar levels and HbA1c levels (both risk factors for CVD)

Against neurodegeneration

This is in large part because it reduces inflammation, which we discussed earlier.

But, not everything passes the blood-brain barrier, so it’s worth noting when something (like gingerol) does also have an effect on brain health as well as the rest of the body.

You do not want inflammation in your brain; that is Bad™ and strongly associated with Alzheimer’s and Parkinson’s.

As well as reducing neuroinflammation, ginger has other relevant mechanisms too:

❝Its bioactive compounds may improve neurological symptoms and pathological conditions by modulating cell death or cell survival signaling molecules.

The cognitive enhancing effects of ginger might be partly explained via alteration of both the monoamine and the cholinergic systems in various brain areas.

Moreover, ginger decreases the production of inflammatory related factors❞

Check it out in full, as this is quite interesting:

Role of Ginger in the Prevention of Neurodegenerative Diseases

How much to take?

In most studies, doses of 1–3 grams/day were used.

Where to get it?

Your local supermarket, as a first port-of-call. Especially given the dose you want, it may be nicer for you to have a touch of sliced ginger root in your cooking, rather than taking 2–6 capsules per day to get the same dose.

Obviously, this depends on your culinary preferences, and ginger certainly doesn’t go with everything!

If you do want it as a supplement, here is an example product on Amazon, for your convenience.

Enjoy!

Share This Post

-

What’s Your Plant Diversity Score?

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

We speak often about the importance of dietary diversity, and of that, especially diversity of plants in one’s diet, but we’ve never really focused on it as a main feature, so that’s what we’re going to do today.

Specifically, you may have heard the advice to “eat 30 different kinds of plants per week”. But where does that come from, and is it just a number out of a hat?

The magic number?

It is not, in fact, a number out of a hat. It’s from a big (n=11,336) study into what things affect the gut microbiome for better or for worse. It was an observational population study, championing “citizen science” in which volunteers tracked various things and collected and sent in various samples for analysis.

The most significant finding of this study was that those who consumed more than 30 different kinds of plants per week, had a much better gut microbiome than those who consumed fewer than 10 different kinds of plants per week (there is a bell curve at play, and it gets steep around 10 and 30):

American Gut: an Open Platform for Citizen Science Microbiome Research

Why do I care about having a good gut microbiome?

Gut health affects almost every other kind of health; it’s been called “the second brain” for the various neurotransmitters and other hormones it directly makes or indirectly regulates (which in turn affect every part of your body), and of course there is the vagus nerve connecting it directly to the brain, impacting everything from food cravings to mood swings to sleep habits.

See also:

Any other benefits?

Yes there are! Let’s not forget: as we see often in our “This or That” section, different foods can be strong or weak in different areas of nutrition, so unless we want to whip out a calculator and database every time we make food choices, a good way to cover everything is to simply eat a diverse diet.

And that goes not just for vitamins and minerals (which would be true of animal products also), but in the case of plants, a wide range of health-giving phytochemicals too:

Measuring Dietary Botanical Diversity as a Proxy for Phytochemical Exposure

Ok, I’m sold, but 30 is a lot!

It is, but you don’t have to do all 30 in your first week of focusing on this, if you’re not already accustomed to such diversity. You can add in one or two new ones each time you go shopping, and build it up.

As for “what counts”: we’re counting unprocessed or minimally-processed plants. So for example, an apple is an apple, as are dried apple slices, as is apple sauce. Any or all of those would count as 1 plant type.

Note also that we’re counting types, not totals. If you’re having apple slices with apple sauce, for some reason? That still only counts as 1.

However, while apple sauce still counts as apples (minimally processed), you cannot eat a cake and say “that’s 2 because there was wheat and sugar cane somewhere in its dim and distant history”.

Nor is your morning espresso a fruit (by virtue of coffee beans being the fruit of the plant, botanically speaking). However, it would count as 1 plant type if you eat actual coffee beans—this writer has been known to snack on such; they’re only healthy in very small portions though, because their saturated fat content is a little high.

You, however, count grains in general, as well as nuts and seeds, not just fruits and vegetables. As for herbs and spices, they count for ¼ each, except for salt, which might get lumped in with spices but is of course not a plant.

How to do it

There’s a reason we’re doing this in our Saturday Life Hacks edition. Here are some tips for getting in far more plants than you might think, a lot more easily than you might think:

- Buy things ready-mixed. This means buying the frozen mixed veg, the frozen mixed chopped fruit, the mixed nuts, the mixed salad greens etc. This way, when you’re reaching for one pack of something, you’re getting 3–5 different plants instead of one.

- Buy things individually, and mix them for storage. This is a more customized version of the above, but in the case of things that keep for at least a while, it can make lazy options a lot more plentiful. Suddenly, instead of rice with your salad you’re having sorghum, millet, buckwheat, and quinoa. This trick also works great for dried berries that can just be tipped into one’s morning oatmeal. Or, you know, millet, oats, rye, and barley. Suddenly, instead of 1 or 2 plants for breakfast you have maybe 7 or 8.

- Keep a well-stocked pantry of shelf-stable items. This is good practice anyway, in case of another supply-lines shutdown like at the start of the COVID-19 pandemic. But for plant diversity, it means that if you’re making enchiladas, then instead using kidney beans because that’s what’s in the cupboard, you can raid your pantry for kidney beans, black beans, pinto beans, fava beans, etc etc. Yes, all of them; that’s a list, not a menu.

- Shop in the discount section of the supermarket. You don’t have shop exclusively there, but swing by that area, see what plants are available for next to nothing, and buy at least one of each. Figure out what to do with it later, but the point here is that it’s a good way to get suggestions of plants that you weren’t actively looking for—and novelty is invariably a step into diversity.

- Shop in a different store. You won’t be able to beeline the products you want on autopilot, so you’ll see other things on the way. Also, they may have things your usual store doesn’t.

- Shop in person, not online—at least as often as is practical. This is because when shopping for groceries online, the store will tend to prioritize showing you items you’ve bought before, or similar items to those (i.e. actually the same item, just a different brand). Not good for trying new things!

- Consider a meal kit delivery service. Because unlike online grocery shopping, this kind of delivery service will (usually) provide you with things you wouldn’t normally buy. Our sometimes-sponsor Purple Carrot is a fine option for this, but there are plenty of others too.

- Try new recipes, especially if they have plants you don’t normally use. Make a note of the recipe, and go out of your way to get the ingredients; if it seems like a chore, reframe it as a little adventure instead. Honestly, it’s things like this that keep us young in more ways than just what polyphenols can do!

- Hide the plants. Whether or not you like them; hide them just because it works in culinary terms. By this we mean; blend beans into that meaty sauce; thicken the soup with red lentils, blend cauliflower into the gravy. And so on.

One more “magic 30”, while we’re at it…

30g fiber per day makes a big (positive) difference to many aspects of health. Obviously, plants are where that comes from, so there’s a big degree of overlap here, but most of the tips we gave are different, so for double the effectiveness, check out:

Level-Up Your Fiber Intake! (Without Difficulty Or Discomfort)

Enjoy!

Share This Post

Related Posts

-

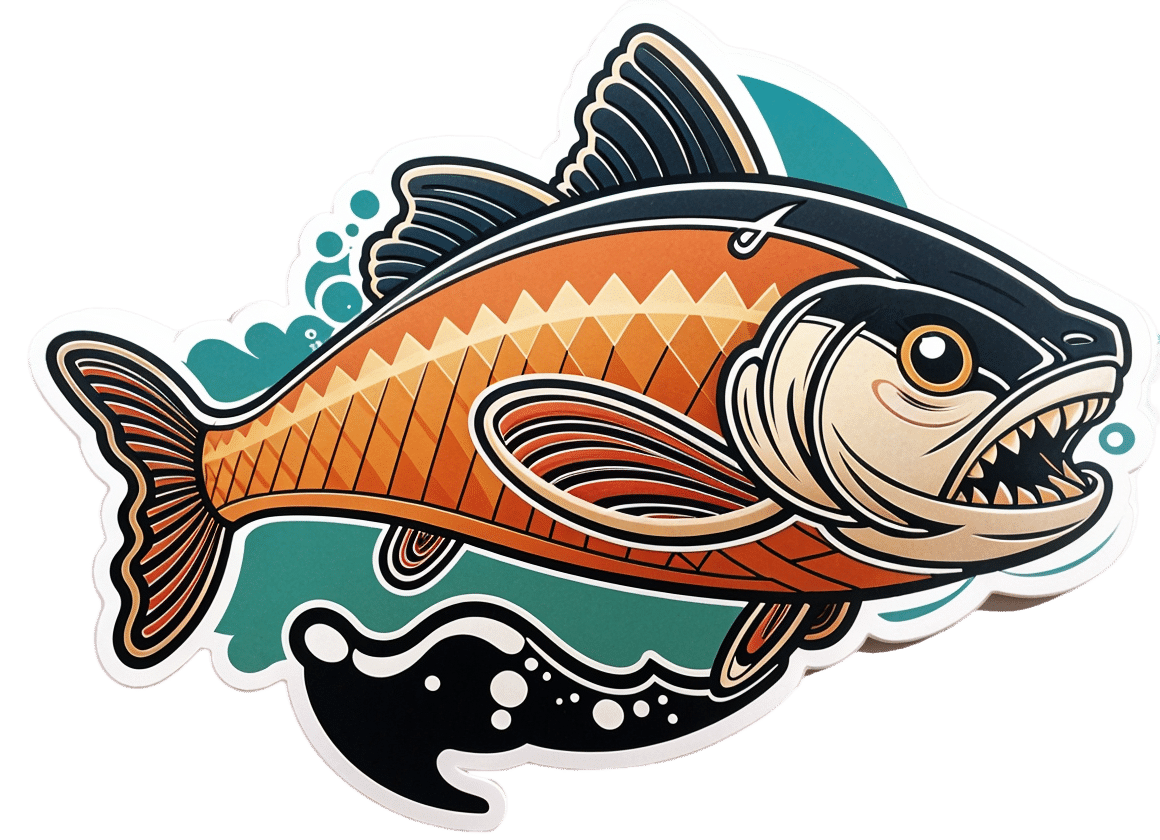

Green Curry Salmon Burgers

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

These lean and healthy burgers are as quick and easy to make as they are good for entertaining. The serving-bed has its nutritional secrets too! All in all, an especially heart-healthy and brain-healthy dish.

You will need

- 4 skinless salmon fillets, cubed (Vegetarian/Vegan? Consider this Plant-Based Salmon Recipe or, since they are getting blended, simply substitute 1½ cups cooked chickpeas instead with 1 tbsp tahini)

- 2 cloves garlic, chopped

- 2 tbsp thai green curry paste

- juice of two limes, plus wedges to serve

- 1 cup quinoa

- ½ cup edamame beans, thawed if they were frozen

- large bunch fresh cilantro (or parsley if you have the “soap “cilantro tastes like soap” gene), chopped

- extra virgin olive oil, for frying

- 1 tbsp chia seeds

- 1 tbsp nutritional yeast

- 2 tsp black pepper, coarse ground

Method

(we suggest you read everything at least once before doing anything)

1) Put the salmon, garlic, curry paste, nutritional yeast, and half the lime juice into a food processor, and blend until smooth.

2) Remove, divide into four parts, and shape into burger patty shapes. Put them in the fridge where they can firm up while we do the next bit.

3) Cook the quinoa with the tablespoon of chia seeds added (which means boiling water and then letting it simmer for 10–15 minutes; when the quinoa is tender and unfurled a little, it’s done).

4) Drain the quinoa with a sieve, and stir in the edamame beans, the rest of the lime juice, the cilantro, and the black pepper. Set aside.

5) Using the olive oil, fry the salmon burgers for about 5 minutes on each side.

6) Serve; we recommend putting the burgers atop the rest, and adding a dash of lime at the table.

(it can also be served this way!)

Enjoy!

Want to learn more?

For those interested in some of the science of what we have going on today:

- Farmed Fish vs Wild–Caught

- Level-Up Your Fiber Intake! (Without Difficulty Or Discomfort)

- What Omega-3 Fatty Acids Really Do For Us

- If You’re Not Taking Chia, You’re Missing Out

- Our Top 5 Spices: How Much Is Enough For Benefits?

Take care!

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails:

-

Tooth Remineralization: How To Heal Your Teeth Naturally

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Dr. Michelle Jorgensen, dentist, explains:

The bare-bones details:

Teeth cannot be regrown (yet!) but can be remineralized, which simply involves restoring lost minerals. When we’re talking about health, “minerals” is usually used to mean elemental minerals, like calcium, magnesium, phosphorus, etc, but the specific mineral that’s needed here is hydroxyapatite (a calcium phosphate mineral, the same as is found in bones).

Not only can acids from food and bacteria dissolve the minerals from the teeth, but also, the body itself may extract minerals from the teeth if it needs them for other functions it considers more critical and/or more urgent.

Cavities occur when acids create porous holes in teeth by dissolving minerals, which allows bacteria to invade, which means more acid, and cavities.

Remineralization can be achieved by doing the following things:

- Use hydroxyapatite-based products (tooth powder, mouthwash).

- Improve gut health to ensure proper mineral absorption.

- Reduce acidic food and drink intake.

- Maintain good oral hygiene to prevent bacteria build-up.

- Eat foods rich in vitamins A, D, E, and K, which help direct minerals to teeth and bones.

For more on all of the above, enjoy:

Click Here If The Embedded Video Doesn’t Load Automatically!

Want to learn more?

You might also like to read:

- Less Common Oral Hygiene Options

- Fluoride Toothpaste vs Non-Fluoride Toothpaste – Which is Healthier?

Take care!

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails:

-

Blueberries vs Banana – Which is Healthier?

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Our Verdict

When comparing blueberries to banana, we picked the banana.

Why?

Surprise, that which is more expensive is not always commensurately more healthy! A lot of the price difference between bananas and blueberries comes down to:

- ease of transport (unripe bananas can be transported quite easily without too much risk of bruising; unripe blueberries can’t even be usefully picked)

- shelf-life (unripe bananas will take their time to ripen; the already-ripe blueberries will often go bad very quickly)

For this reason, frozen blueberries are a great option for budget-friendly berries. But, onto the comparisons:

In terms of macros, bananas have slightly more protein, carbs, and fiber, and the slightly lower glycemic index. Really, both are good, but by the numbers, bananas win.

When it comes to vitamins, blueberries have more of vitamins B1, C, E, and K, while bananas have more of vitamins A, B2, B3, B5, B6, B9, and choline. Another win for bananas, though of course we could quibble which vitamins are most likely to be not found in sufficient abundance in the rest of one’s diet, but as it is, we just compared the nutrients head-to-head without trying to guess the rest of someone’s diet.

In the category of minerals, blueberries have more calcium and manganese, while bananas have more copper, magnesium, phosphorus, potassium, and selenium. Another win for bananas.

As for polyphenols, this is where blueberries shine, with a lot more than bananas (difficult to calculate exactly due to variations, but, in the order of hundreds of times more). A win for blueberries this time.

Adding up the section gives us an overall win for bananas, but by all means enjoy either or both; perhaps even together!

Want to learn more?

You might like to read:

Blueberry & Banana Collagen Baked Oats ← You will love this recipe! And… Good news for vegans/vegetarians: while we include an optional tablespoon of collagen powder in this recipe, the whole recipe is already geared around collagen synthesis, so it’s very collagen-boosting even with just the plants, providing collagen’s building blocks of protein, zinc, and vitamins C and D (your miraculous body will use these to assemble the collagen inside you).

Enjoy!

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails: