10% Human – by Dr. Alanna Collen

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

The title, of course, is a nod to how by cell count, we are only about 10% human, and the other 90% are assorted microbes.

Dr. Collen starts with the premise that “all diseases begin in the gut” which is perhaps a little bold, but as a general rule of thumb, the gut is, in fairness, implicated in most things—even if not being the cause, it generally plays at least some role in the pathogenesis of disease.

The book talks us through the various ways that our trillions of tiny friends (and some foes) interact with us, from immune-related considerations, to nutrient metabolism, to neurotransmitters, and in some cases, direct mind control, which may sound like a stretch but it has to do with the vagus nerve “gut-brain highway”, and how microbes have evolved to tug on its strings just right. Bearing in mind, most of these microbes have very short life cycles, which means evolution happens for them so much more rapidly than it does for us—something that Dr. Collen, with her PhD in evolutionary biology, has plenty to say about.

There is a practical element too: advice on how to avoid the many illnesses that come with having our various microbiomes (it’s not just the gut!) out of balance, and how to keep everything working together as a team.

The style is quite light pop-science and, once we get past the first chapter (which is about the history of the field), quite a pleasant read as Dr. Collen has an enjoyable and entertaining tone.

Bottom line: if you’d like to understand more about all the things that come together to make us functionally 100% human, then this book is an excellent guide to that.

Click here to check out 10% Human, and learn about how we interact with ourselves!

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Recommended

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails:

-

The Circadian Diabetes Code – by Dr. Satchin Panda

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

We have previously reviewed Dr. Panda’s “The Circadian Code” which pertains to the circadian rhythms (yes, plural) in general; this one uses much of the same research, but with a strong focus on the implications for blood sugar management.

It’s first a primer in diabetes (and prediabetes, and, in contrast, what things should look like if healthy). You’ll understand about glucose metabolism and glycogen and insulin and more; you’ll understand what blood sugar readings mean, and you’ll know what an Hb1AC count actually is and what it should look like too, things like that.

After that, it’s indeed about what the subtitle promises: the right times to eat (and what to eat), when to exercise (and how, at which time), and how to optimize your sleep in the context of circadian rhythm and blood sugar management.

You may be wondering: why does circadian rhythm matter for blood sugars? And the answer is explained at some length in the first part of the book, but to oversimplify greatly: your body needs energy all the time, no matter when it was that you last ate. Thus, it has to organize its energy reserves to that at all times you can 1) function, on a cellular level 2) maintain a steady balance of sugar in your blood despite using it at slightly higher or lower levels at different times of day. Because the basal metabolic rate accounts for most of our energy use, the body has to plan for a base rate of so much energy per day, and to do that, it needs to know what a day is. Dr. Panda explains this in detail (the marvels of PER proteins and all that), but basically, that’s the relevance of circadian rhythm.

However, it’s not all theory and biochemistry; there is also a 12-week program to reverse prediabetes and type 2 diabetes (it will not, of course, reverse Type 1 Diabetes, sorry—but the program will still be beneficial even in that case, since more even blood sugars means fewer woes).

They style is friendly and clear, explaining the science simply, yet without patronizing the reader. References are given, with claims sourced in an extensive bibliography.

Bottom line: if you or a loved one have diabetes or prediabetes, or just have a strong desire to avoid getting such and generally keep your metabolic health in good order, this book will definitely help.

Click here to check out The Diabetes Code, and enjoy better blood sugar health than ever!

Share This Post

-

Rethinking Exercise: The Workout Paradox

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

The notion of running a caloric deficit (i.e., expending more calories than we consume) to reduce bodyfat is appealing in its simplicity, but… we’d say “it doesn’t actually work outside of a lab”, but honestly, it doesn’t actually work outside of a calculator.

Why?

For a start, exercise calorie costs are quite small numbers compared to metabolic base rate. Our brain alone uses a huge portion of our daily calories, and the rest of our body literally never stops doing stuff. Even if we’re lounging in bed and ostensibly not moving, on a cellular level we stay incredibly busy, and all that costs (and the currency is: calories).

Since that cost is reflected in the body’s budget per kg of bodyweight, a larger body (regardless of its composition) will require more calories than a smaller one. We say “regardless of its composition” because this is true regardless—but for what it’s worth, muscle is more “costly” to maintain than fat, which is one of several reasons why the average man requires more daily calories than the average woman, since on average men will tend to have more muscle.

And if you do exercise because you want to run out the budget so the body has to “spend” from fat stores?

Good luck, because while it may work in the very short term, the body will quickly adapt, like an accountant seeing your reckless spending and cutting back somewhere else. That’s why in all kinds of exercise except high-intensity interval training, a period of exercise will be followed by a metabolic slump, the body’s “austerity measures”, to balance the books.

You may be wondering: why is it different for HIIT? It’s because it changes things up frequently enough that the body doesn’t get a chance to adapt. To labor the financial metaphor, it involves lying to your accountant, so that the compensation is not made. Congratulations: you’re committing calorie fraud (but it’s good for the body, so hey).

That doesn’t mean other kinds of exercise are useless (or worse, necessarily counterproductive), though! Just, that we must acknowledge that other forms of exercise are great for various aspects of physical health (strengthening the body, mobilizing blood and lymph, preventing disease, enjoying mental health benefits, etc) that don’t really affect fat levels much (which are decided more in the kitchen than the gym—and even in the category of diet, it’s more about what and how and when you eat, rather than how much).

For more information on metabolic balance in the context of exercise, enjoy:

Click Here If The Embedded Video Doesn’t Load Automatically!

Want to learn more?

You might also like to read:

- Are You A Calorie-Burning Machine?

- Burn! How To Boost Your Metabolism

- How To Do HIIT (Without Wrecking Your Body)

- Lose Weight, But Healthily

- Build Muscle (Healthily!)

- How To Gain Weight (Healthily!)

Take care!

Share This Post

-

How do science journalists decide whether a psychology study is worth covering?

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Complex research papers and data flood academic journals daily, and science journalists play a pivotal role in disseminating that information to the public. This can be a daunting task, requiring a keen understanding of the subject matter and the ability to translate dense academic language into narratives that resonate with the general public.

Several resources and tip sheets, including the Know Your Research section here at The Journalist’s Resource, aim to help journalists hone their skills in reporting on academic research.

But what factors do science journalists look for to decide whether a social science research study is trustworthy and newsworthy? That’s the question researchers at the University of California, Davis, and the University of Melbourne in Australia examine in a recent study, “How Do Science Journalists Evaluate Psychology Research?” published in September in Advances in Methods and Practices in Psychological Science.

Their online survey of 181 mostly U.S.-based science journalists looked at how and whether they were influenced by four factors in fictitious research summaries: the sample size (number of participants in the study), sample representativeness (whether the participants in the study were from a convenience sample or a more representative sample), the statistical significance level of the result (just barely statistically significant or well below the significance threshold), and the prestige of a researcher’s university.

The researchers found that sample size was the only factor that had a robust influence on journalists’ ratings of how trustworthy and newsworthy a study finding was.

University prestige had no effect, while the effects of sample representativeness and statistical significance were inconclusive.

But there’s nuance to the findings, the authors note.

“I don’t want people to think that science journalists aren’t paying attention to other things, and are only paying attention to sample size,” says Julia Bottesini, an independent researcher, a recent Ph.D. graduate from the Psychology Department at UC Davis, and the first author of the study.

Overall, the results show that “these journalists are doing a very decent job” vetting research findings, Bottesini says.

Also, the findings from the study are not generalizable to all science journalists or other fields of research, the authors note.

“Instead, our conclusions should be circumscribed to U.S.-based science journalists who are at least somewhat familiar with the statistical and replication challenges facing science,” they write. (Over the past decade a series of projects have found that the results of many studies in psychology and other fields can’t be reproduced, leading to what has been called a ‘replication crisis.’)

“This [study] is just one tiny brick in the wall and I hope other people get excited about this topic and do more research on it,” Bottesini says.

More on the study’s findings

The study’s findings can be useful for researchers who want to better understand how science journalists read their research and what kind of intervention — such as teaching journalists about statistics — can help journalists better understand research papers.

“As an academic, I take away the idea that journalists are a great population to try to study because they’re doing something really important and it’s important to know more about what they’re doing,” says Ellen Peters, director of Center for Science Communication Research at the School of Journalism and Communication at the University of Oregon. Peters, who was not involved in the study, is also a psychologist who studies human judgment and decision-making.

Peters says the study was “overall terrific.” She adds that understanding how journalists do their work “is an incredibly important thing to do because journalists are who reach the majority of the U.S. with science news, so understanding how they’re reading some of our scientific studies and then choosing whether to write about them or not is important.”

The study, conducted between December 2020 and March 2021, is based on an online survey of journalists who said they at least sometimes covered science or other topics related to health, medicine, psychology, social sciences, or well-being. They were offered a $25 Amazon gift card as compensation.

Among the participants, 77% were women, 19% were men, 3% were nonbinary and 1% preferred not to say. About 62% said they had studied physical or natural sciences at the undergraduate level, and 24% at the graduate level. Also, 48% reported having a journalism degree. The study did not include the journalists’ news reporting experience level.

Participants were recruited through the professional network of Christie Aschwanden, an independent journalist and consultant on the study, which could be a source of bias, the authors note.

“Although the size of the sample we obtained (N = 181) suggests we were able to collect a range of perspectives, we suspect this sample is biased by an ‘Aschwanden effect’: that science journalists in the same professional network as C. Aschwanden will be more familiar with issues related to the replication crisis in psychology and subsequent methodological reform, a topic C. Aschwanden has covered extensively in her work,” they write.

Participants were randomly presented with eight of 22 one-paragraph fictitious social and personality psychology research summaries with fictitious authors. The summaries are posted on Open Science Framework, a free and open-source project management tool for researchers by the Center for Open Science, with a mission to increase openness, integrity and reproducibility of research.

For instance, one of the vignettes reads:

“Scientists at Harvard University announced today the results of a study exploring whether introspection can improve cooperation. 550 undergraduates at the university were randomly assigned to either do a breathing exercise or reflect on a series of questions designed to promote introspective thoughts for 5 minutes. Participants then engaged in a cooperative decision-making game, where cooperation resulted in better outcomes. People who spent time on introspection performed significantly better at these cooperative games (t (548) = 3.21, p = 0.001). ‘Introspection seems to promote better cooperation between people,’ says Dr. Quinn, the lead author on the paper.”

In addition to answering multiple-choice survey questions, participants were given the opportunity to answer open-ended questions, such as “What characteristics do you [typically] consider when evaluating the trustworthiness of a scientific finding?”

Bottesini says those responses illuminated how science journalists analyze a research study. Participants often mentioned the prestige of the journal in which it was published or whether the study had been peer-reviewed. Many also seemed to value experimental research designs over observational studies.

Considering statistical significance

When it came to considering p-values, “some answers suggested that journalists do take statistical significance into account, but only very few included explanations that suggested they made any distinction between higher or lower p values; instead, most mentions of p values suggest journalists focused on whether the key result was statistically significant,” the authors write.

Also, many participants mentioned that it was very important to talk to outside experts or researchers in the same field to get a better understanding of the finding and whether it could be trusted, the authors write.

“Journalists also expressed that it was important to understand who funded the study and whether the researchers or funders had any conflicts of interest,” they write.

Participants also “indicated that making claims that were calibrated to the evidence was also important and expressed misgivings about studies for which the conclusions do not follow from the evidence,” the authors write.

In response to the open-ended question, “What characteristics do you [typically] consider when evaluating the trustworthiness of a scientific finding?” some journalists wrote they checked whether the study was overstating conclusions or claims. Below are some of their written responses:

- “Is the researcher adamant that this study of 40 college kids is representative? If so, that’s a red flag.”

- “Whether authors make sweeping generalizations based on the study or take a more measured approach to sharing and promoting it.”

- “Another major point for me is how ‘certain’ the scientists appear to be when commenting on their findings. If a researcher makes claims which I consider to be over-the-top about the validity or impact of their findings, I often won’t cover.”

- “I also look at the difference between what an experiment actually shows versus the conclusion researchers draw from it — if there’s a big gap, that’s a huge red flag.”

Peters says the study’s findings show that “not only are journalists smart, but they have also gone out of their way to get educated about things that should matter.”

What other research shows about science journalists

A 2023 study, published in the International Journal of Communication, based on an online survey of 82 U.S. science journalists, aims to understand what they know and think about open-access research, including peer-reviewed journals and articles that don’t have a paywall, and preprints. Data was collected between October 2021 and February 2022. Preprints are scientific studies that have yet to be peer-reviewed and are shared on open repositories such as medRxiv and bioRxiv. The study finds that its respondents “are aware of OA and related issues and make conscious decisions around which OA scholarly articles they use as sources.”

A 2021 study, published in the Journal of Science Communication, looks at the impact of the COVID-19 pandemic on the work of science journalists. Based on an online survey of 633 science journalists from 77 countries, it finds that the pandemic somewhat brought scientists and science journalists closer together. “For most respondents, scientists were more available and more talkative,” the authors write. The pandemic has also provided an opportunity to explain the scientific process to the public, and remind them that “science is not a finished enterprise,” the authors write.

More than a decade ago, a 2008 study, published in PLOS Medicine, and based on an analysis of 500 health news stories, found that “journalists usually fail to discuss costs, the quality of the evidence, the existence of alternative options, and the absolute magnitude of potential benefits and harms,” when reporting on research studies. Giving time to journalists to research and understand the studies, giving them space for publication and broadcasting of the stories, and training them in understanding academic research are some of the solutions to fill the gaps, writes Gary Schwitzer, the study author.

Advice for journalists

We asked Bottesini, Peters, Aschwanden and Tamar Wilner, a postdoctoral fellow at the University of Texas, who was not involved in the study, to share advice for journalists who cover research studies. Wilner is conducting a study on how journalism research informs the practice of journalism. Here are their tips:

1. Examine the study before reporting it.

Does the study claim match the evidence? “One thing that makes me trust the paper more is if their interpretation of the findings is very calibrated to the kind of evidence that they have,” says Bottesini. In other words, if the study makes a claim in its results that’s far-fetched, the authors should present a lot of evidence to back that claim.

Not all surprising results are newsworthy. If you come across a surprising finding from a single study, Peters advises you to step back and remember Carl Sagan’s quote: “Extraordinary claims require extraordinary evidence.”

How transparent are the authors about their data? For instance, are the authors posting information such as their data and the computer codes they use to analyze the data on platforms such as Open Science Framework, AsPredicted, or The Dataverse Project? Some researchers ‘preregister’ their studies, which means they share how they’re planning to analyze the data before they see them. “Transparency doesn’t automatically mean that a study is trustworthy,” but it gives others the chance to double-check the findings, Bottesini says.

Look at the study design. Is it an experimental study or an observational study? Observational studies can show correlations but not causation.

“Observational studies can be very important for suggesting hypotheses and pointing us towards relationships and associations,” Aschwanden says.

Experimental studies can provide stronger evidence toward a cause, but journalists must still be cautious when reporting the results, she advises. “If we end up implying causality, then once it’s published and people see it, it can really take hold,” she says.

Know the difference between preprints and peer-reviewed, published studies. Peer-reviewed papers tend to be of higher quality than those that are not peer-reviewed. Read our tip sheet on the difference between preprints and journal articles.

Beware of predatory journals. Predatory journals are journals that “claim to be legitimate scholarly journals, but misrepresent their publishing practices,” according to a 2020 journal article, published in the journal Toxicologic Pathology, “Predatory Journals: What They Are and How to Avoid Them.”

2. Zoom in on data.

Read the methods section of the study. The methods section of the study usually appears after the introduction and background section. “To me, the methods section is almost the most important part of any scientific paper,” says Aschwanden. “It’s amazing to me how often you read the design and the methods section, and anyone can see that it’s a flawed design. So just giving things a gut-level check can be really important.”

What’s the sample size? Not all good studies have large numbers of participants but pay attention to the claims a study makes with a small sample size. “If you have a small sample, you calibrate your claims to the things you can tell about those people and don’t make big claims based on a little bit of evidence,” says Bottesini.

But also remember that factors such as sample size and p-value are not “as clear cut as some journalists might assume,” says Wilner.

How representative of a population is the study sample? “If the study has a non-representative sample of, say, undergraduate students, and they’re making claims about the general population, that’s kind of a red flag,” says Bottesini. Aschwanden points to the acronym WEIRD, which stands for “Western, Educated, Industrialized, Rich, and Democratic,” and is used to highlight a lack of diversity in a sample. Studies based on such samples may not be generalizable to the entire population, she says.

Look at the p-value. Statistical significance is both confusing and controversial, but it’s important to consider. Read our tip sheet, “5 Things Journalists Need to Know About Statistical Significance,” to better understand it.

3. Talk to scientists not involved in the study.

If you’re not sure about the quality of a study, ask for help. “Talk to someone who is an expert in study design or statistics to make sure that [the study authors] use the appropriate statistics and that methods they use are appropriate because it’s amazing to me how often they’re not,” says Aschwanden.

Get an opinion from an outside expert. It’s always a good idea to present the study to other researchers in the field, who have no conflicts of interest and are not involved in the research you’re covering and get their opinion. “Don’t take scientists at their word. Look into it. Ask other scientists, preferably the ones who don’t have a conflict of interest with the research,” says Bottesini.

4. Remember that a single study is simply one piece of a growing body of evidence.

“I have a general rule that a single study doesn’t tell us very much; it just gives us proof of concept,” says Peters. “It gives us interesting ideas. It should be retested. We need an accumulation of evidence.”

Aschwanden says as a practice, she tries to avoid reporting stories about individual studies, with some exceptions such as very large, randomized controlled studies that have been underway for a long time and have a large number of participants. “I don’t want to say you never want to write a single-study story, but it always needs to be placed in the context of the rest of the evidence that we have available,” she says.

Wilner advises journalists to spend some time looking at the scope of research on the study’s specific topic and learn how it has been written about and studied up to that point.

“We would want science journalists to be reporting balance of evidence, and not focusing unduly on the findings that are just in front of them in a most recent study,” Wilner says. “And that’s a very difficult thing to as journalists to do because they’re being asked to make their article very newsy, so it’s a difficult balancing act, but we can try and push journalists to do more of that.”

5. Remind readers that science is always changing.

“Science is always two steps forward, one step back,” says Peters. Give the public a notion of uncertainty, she advises. “This is what we know today. It may change tomorrow, but this is the best science that we know of today.”

Aschwanden echoes the sentiment. “All scientific results are provisional, and we need to keep that in mind,” she says. “It doesn’t mean that we can’t know anything, but it’s very important that we don’t overstate things.”

Authors of a study published in PNAS in January analyzed more than 14,000 psychology papers and found that replication success rates differ widely by psychology subfields. That study also found that papers that could not be replicated received more initial press coverage than those that could.

The authors note that the media “plays a significant role in creating the public’s image of science and democratizing knowledge, but it is often incentivized to report on counterintuitive and eye-catching results.”

Ideally, the news media would have a positive relationship with replication success rates in psychology, the authors of the PNAS study write. “Contrary to this ideal, however, we found a negative association between media coverage of a paper and the paper’s likelihood of replication success,” they write. “Therefore, deciding a paper’s merit based on its media coverage is unwise. It would be valuable for the media to remind the audience that new and novel scientific results are only food for thought before future replication confirms their robustness.”

Additional reading

Uncovering the Research Behaviors of Reporters: A Conceptual Framework for Information Literacy in Journalism

Katerine E. Boss, et al. Journalism & Mass Communication Educator, October 2022.The Problem with Psychological Research in the Media

Steven Stosny. Psychology Today, September 2022.Critically Evaluating Claims

Megha Satyanarayana, The Open Notebook, January 2022.How Should Journalists Report a Scientific Study?

Charles Binkley and Subramaniam Vincent. Markkula Center for Applied Ethics at Santa Clara University, September 2020.What Journalists Get Wrong About Social Science: Full Responses

Brian Resnick. Vox, January 2016.From The Journalist’s Resource

8 Ways Journalists Can Access Academic Research for Free

5 Things Journalists Need to Know About Statistical Significance

5 Common Research Designs: A Quick Primer for Journalists

5 Tips for Using PubPeer to Investigate Scientific Research Errors and Misconduct

What’s Standard Deviation? 4 Things Journalists Need to Know

This article first appeared on The Journalist’s Resource and is republished here under a Creative Commons license.

Share This Post

Related Posts

-

When Age Is A Flexible Number

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Aging, Counterclockwise!

In the late 1970s, Dr. Ellen Langer hypothesized that physical markers of aging could be affected by psychosomatic means.

Note: psychosomatic does not mean “it’s all in your head”.

Psychosomatic means “your body does what your brain tells it to do, for better or for worse”

She set about testing that, in what has been referred to since as…

The Counterclockwise Study

A small (n=16) sample of men in their late 70s and early 80s were recruited in what they were told was a study about reminiscing.

Back in the 1970s, it was still standard practice in the field of psychology to outright lie to participants (who in those days were called “subjects”), so this slight obfuscation was a much smaller ethical aberration than in some famous studies of the same era and earlier (cough cough Zimbardo cough Milgram cough).

Anyway, the participants were treated to a week in a 1950s-themed retreat, specifically 1959, a date twenty years prior to the experiment’s date in 1979. The environment was decorated and furnished authentically to the date, down to the food and the available magazines and TV/radio shows; period-typical clothing was also provided, and so forth.

- The control group were told to spend the time reminiscing about 1959

- The experimental group were told to pretend (and maintain the pretense, for the duration) that it really was 1959

The results? On many measures of aging, the experimental group participants became quantifiably younger:

❝The experimental group showed greater improvement in joint flexibility, finger length (their arthritis diminished and they were able to straighten their fingers more), and manual dexterity.

On intelligence tests, 63 percent of the experimental group improved their scores, compared with only 44 percent of the control group. There were also improvements in height, weight, gait, and posture.

Finally, we asked people unaware of the study’s purpose to compare photos taken of the participants at the end of the week with those submitted at the beginning of the study. These objective observers judged that all of the experimental participants looked noticeably younger at the end of the study.❞

Remember, this was after one week.

Her famous study was completed in 1979, and/but not published until eleven years later in 1990, with the innocuous title:

Higher stages of human development: Perspectives on adult growth

You can read about it much more accessibly, and in much more detail, in her book:

Counterclockwise: A Proven Way to Think Yourself Younger and Healthier – by Dr. Ellen Langer

We haven’t reviewed that particular book yet, so here’s Linda Graham’s review, that noted:

❝Langer cites other research that has made similar findings.

In one study, for instance, 650 people were surveyed about their attitudes on aging. Twenty years later, those with a positive attitude with regard to aging had lived seven years longer on average than those with a negative attitude to aging.

(By comparison, researchers estimate that we extend our lives by four years if we lower our blood pressure and reduce our cholesterol.)

In another study, participants read a list of negative words about aging; within 15 minutes, they were walking more slowly than they had before.❞

Read the review in full:

Aging in Reverse: A Review of Counterclockwise

The Counterclockwise study has been repeated since, and/but we are still waiting for the latest (exciting, much larger sample, 90 participants this time) study to be published. The research proposal describes the method in great detail, and you can read that with one click over on PubMed:

It was approved, and has now been completed (as of 2020), but the results have not been published yet; you can see the timeline of how that’s progressing over on ClinicalTrials.gov:

Clinical Trials | Ageing as a Mindset: A Counterclockwise Experiment to Rejuvenate Older Adults

Hopefully it’ll take less time than the eleven years it took for the original study, but in the meantime, there seems to be nothing to lose in doing a little “Citizen Science” for ourselves.

Maybe a week in a 20 years-ago themed resort (writer’s note: wow, that would only be 2004; that doesn’t feel right; it should surely be at least the 90s!) isn’t a viable option for you, but we’re willing to bet it’s possible to “microdose” on this method. Given that the original study lasted only a week, even just a themed date-night on a regular recurring basis seems like a great option to explore (if you’re not partnered then well, indulge yourself how best you see fit, in accord with the same premise; a date-night can be with yourself too!).

Just remember the most important take-away though:

Don’t accidentally put yourself in your own control group!

In other words, it’s critically important that for the duration of the exercise, you act and even think as though it is the appropriate date.

If you instead spend your time thinking “wow, I miss the [decade that does it for you]”, you will dodge the benefits, and potentially even make yourself feel (and thus, potentially, if the inverse hypothesis holds true, become) older.

This latter is not just our hypothesis by the way, there is an established potential for nocebo effect.

For example, the following study looked at how instructions given in clinical tests can be worded in a way that make people feel differently about their age, and impact the results of the mental and/or physical tests then administered:

❝Our results seem to suggest how manipulations by instructions appeared to be more largely used and capable of producing more clear performance variations on cognitive, memory, and physical tasks.

Age-related stereotypes showed potentially stronger effects when they are negative, implicit, and temporally closer to the test of performance. ❞

(and yes, that’s the same Dr. Francesco Pagnini whose name you saw atop the other study we cited above, with the 90 participants recreating the Counterclockwise study)

Want to know more about [the hard science of] psychosomatic health?

Check out Dr. Langer’s other book, which we reviewed recently:

The Mindful Body: Thinking Our Way to Chronic Health – by Dr. Ellen Langer

Enjoy!

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails:

-

Fast Diet, Fast Exercise, Fast Improvements

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Diet & Exercise, Optimized

This is Dr. Michael Mosley. He originally trained in medicine with the intention of becoming a psychiatrist, but he grew disillusioned with psychiatry as it was practised, and ended up pivoting completely into being a health educator, in which field he won the British Medical Association’s Medical Journalist of the Year Award.

He also died under tragic circumstances very recently (he and his wife were vacationing in Greece, he went missing while out for a short walk on the 5th of June, appears to have got lost, and his body was found 100 yards from a restaurant on the 9th). All strength and comfort to his family; we offer our small tribute here today in his honor.

The “weekend warrior” of fasting

Dr. Mosley was an enjoyer (and proponent) of intermittent fasting, which we’ve written about before:

Fasting Without Crashing? We Sort The Science From The Hype

However, while most attention is generally given to the 16:8 method of intermittent fasting (fast for 16 hours, eat during an 8 hour window, repeat), Dr. Mosley preferred the 5:2 method (which generally means: eat at will for 5 days, then eat a reduced calorie diet for the other 2 days).

Specifically, he advocated putting that cap at 800 kcal for each of the weekend days (doesn’t have to be specifically the weekend).

He also tweaked the “eat at will for 5 days” part, to “eat as much as you like of a low-carb Mediterranean diet for 5 days”:

❝The “New 5:2” approach involves restricting calories to 800 on fasting days, then eating a healthy lower carb, Mediterranean-style diet for the rest of the week.

The beauty of intermittent fasting means that as your insulin sensitivity returns, you will feel fuller for longer on smaller portions. This is why, on non-fasting days, you do not have to count calories, just eat sensible portions. By maintaining a Mediterranean-style diet, you will consume all of the healthy fats, protein, fibre and fresh plant-based food that your body needs.❞

Read more: The Fast 800 | The New 5:2

And about that tweaked Mediterranean Diet? You might also want to check out:

Four Ways To Upgrade The Mediterranean Diet

Knowledge is power

Dr. Mosley encouraged the use of genotyping tests for personal health, not just to know about risk factors, but also to know about things such as, for example, whether you have the gene that makes you unable to gain significant improvements in aerobic fitness by following endurance training programs:

The Real Benefit Of Genetic Testing

On which note, he himself was not a fan of exercise, but recognised its importance, and instead sought to minimize the amount of exercise he needed to do, by practising High Intensity Interval Training. We reviewed a book of his (teamed up with a sports scientist) not long back; here it is:

Fast Exercise: The Simple Secret of High Intensity Training – by Dr. Michael Mosley & Peta Bee

You can also read our own article on the topic, here:

How To Do HIIT (Without Wrecking Your Body)

Just One Thing…

As well as his many educational TV shows, Dr. Mosley was also known for his radio show, “Just One Thing”, and a little while ago we reviewed his book, effectively a compilation of these:

Just One Thing: How Simple Changes Can Transform Your Life – by Dr. Michael Mosley

Enjoy!

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails:

-

Black Beans vs Pinto Beans – Which is Healthier?

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Our Verdict

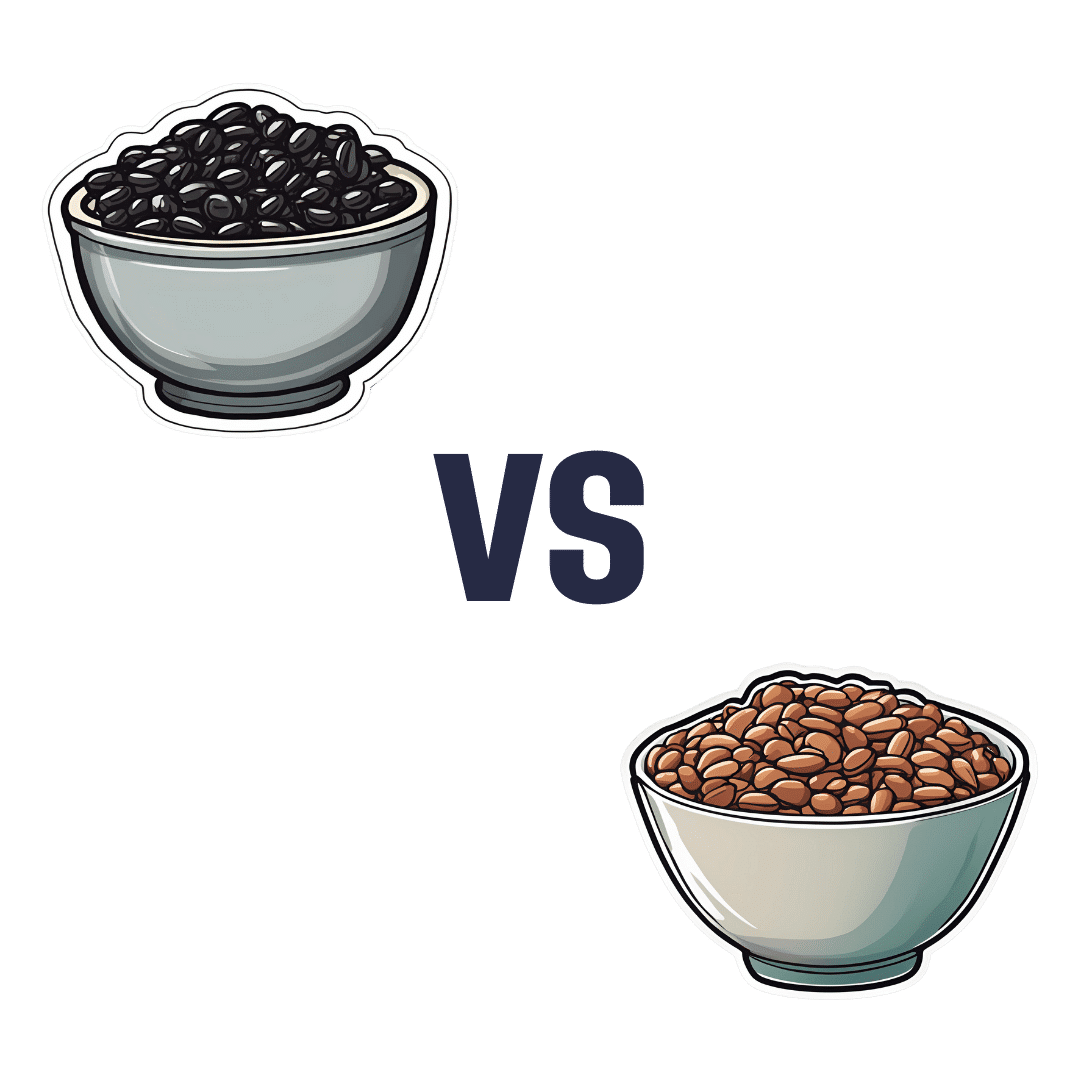

When comparing black beans to pinto beans, we picked the pinto beans.

Why?

Both of these beans have won all their previous comparisons, so it’s no surprise that this one was very close. Despite their different appearance, taste, and texture, their nutritional profiles are almost identical:

In terms of macros, pinto beans have a tiny bit more protein, carbs, and fiber. So, a nominal win for pinto beans, but again, the difference is very slight.

When it comes to vitamins, black beans have more of vitamins A, B1, B3, and B5, while pinto beans have more of vitamins B2, B6, B9, C, E, K and choline. Superficially, again this is nominally a win for pinto beans, but in most cases the differences are so slight as to be potentially the product of decimal place rounding.

In the category of minerals, black beans have more calcium, copper, iron, and phosphorus, while pinto beans have more magnesium, manganese, selenium, and zinc. That’s a 4:4 tie, but the only one with a meaningful margin of difference is selenium (of which pinto beans have 4x more), so we’re calling this one a very modest win for pinto beans.

All in all, adding these up makes for a “if we really are pressed to choose” win for pinto beans, but honestly, enjoy either in accordance with your preference (this writer prefers black beans!), or better yet, both.

Want to learn more?

You might like to read:

What’s Your Plant Diversity Score?

Take care!

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails: