Is chocolate milk a good recovery drink after a workout? A dietitian reviews the evidence

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Whether you enjoy chocolate milk regularly, as a weekend treat, or as an occasional dose of childhood nostalgia, it probably wouldn’t be the first option you think of for post-workout recovery.

Unless you’re on TikTok, perhaps. According to many people on the social media platform, chocolate milk is not only delicious, but it offers benefits comparable to sports drinks after a workout.

So is there any evidence to support this? Let’s take a look.

Rehydrating after a workout is important

Water accounts for somewhere between 50% and 60% of our body weight. Water has many important functions in the body, including helping to keep our body at the right temperature through sweating.

We lose water naturally from our bodies when we sweat, as well as through our breathing and when we go to the toilet. So it’s important to stay hydrated to replenish the water we lose.

When we don’t, we become dehydrated, which can put a strain on our bodies. Signs and symptoms of dehydration can range from thirst and dizziness to low blood pressure and confusion.

Athletes, because of their higher levels of exertion, lose more water through sweating and from respiration (when their breathing rate gets faster). If they’re training or competing in hot or humid environments they will sweat even more.

Dehydration impacts athletes’ performance and like for all of us, can affect their health.

So finding ways to ensure athletes rehydrate quickly during and after they train or compete is important. Fortunately, sports scientists and dietitians have done research looking at the composition of different fluids to understand which ones rehydrate athletes most effectively.

The beverage hydration index

The best hydrating drinks are those the body retains the most of once they’ve been consumed. By doing studies where they give people different drinks in standardised conditions, scientists have been able to determine how various options stack up.

To this end, they’ve developed something called the beverage hydration index, which measures to what degree different fluids hydrate a person compared to still water.

According to this index beverages with similar fluid retention to still water include sparkling water, sports drinks, cola, diet cola, tea, coffee, and beer below 4% alcohol. That said, alcohol is probably best avoided when recovering from exercise.

Beverages with superior fluid retention to still water include milk (both full-fat and skim), soy milk, orange juice and oral rehydration solutions.

This body of research indicates that when it comes to rehydration after exercise, unflavoured milk (full fat, skim or soy) is better than sports drinks.

But what about chocolate milk?

A small study looked at the effects of chocolate milk compared to plain milk on rehydration and exercise performance in futsal players (futsal is similar to soccer but played on a court indoors). The researchers found no difference in rehydration between the two. There’s no other published research to my knowledge looking at how chocolate milk compares to regular milk for rehydration during or after exercise.

But rehydration isn’t the only thing athletes look for in sports drinks. In the same study, drinking chocolate milk after play (referred to as the recovery period) increased the time it took for the futsal players to become exhausted in further exercise (a shuttle run test) four hours later.

This was also shown in a review of several clinical trials. The analysis found that, compared to different placebos (such as water) or other drinks containing fat, protein and carbohydrates, chocolate milk lengthened the time to exhaustion during exercise.

What’s in chocolate milk?

Milk contains protein, carbohydrates and electrolytes, each of which can affect hydration, performance, or both.

Protein is important for building muscle, which is beneficial for performance. The electrolytes in milk (including sodium and potassium) help to replace electrolytes lost through sweating, so can also be good for performance, and aid hydration.

Compared to regular milk, chocolate milk contains added sugar. This provides extra carbohydrates, which are likewise beneficial for performance. Carbohydrates provide an immediate source of energy for athletes’ working muscles, where they’re stored as glycogen. This might contribute to the edge chocolate milk appears to have over plain milk in terms of athletic endurance.

Coffee-flavoured milk has an additional advantage. It contains caffeine, which can improve athletic performance by reducing the perceived effort that goes into exercise.

One study showed that a frappe-type drink prepared with filtered coffee, skim milk and sugar led to better muscle glycogen levels after exercise compared to plain milk with an equivalent amount of sugar added.

So what’s the verdict?

Evidence shows chocolate milk can rehydrate better than water or sports drinks after exercise. But there isn’t evidence to suggest it can rehydrate better than plain milk. Chocolate milk does appear to improve athletic endurance compared to plain milk though.

Ultimately, the best drink for athletes to consume to rehydrate is the one they’re most likely to drink.

While many TikTok trends are not based on evidence, it seems chocolate milk could actually be a good option for recovery from exercise. And it will be cheaper than specialised sports nutrition products. You can buy different brands from the supermarket or make your own at home with a drinking chocolate powder.

This doesn’t mean everyone should look to chocolate milk when they’re feeling thirsty. Chocolate milk does have more calories than plain milk and many other drinks because of the added sugar. For most of us, chocolate milk may be best enjoyed as an occasional treat.

Evangeline Mantzioris, Program Director of Nutrition and Food Sciences, Accredited Practising Dietitian, University of South Australia

This article is republished from The Conversation under a Creative Commons license. Read the original article.

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Recommended

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails:

-

Cooling Bulgarian Tarator

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

The “Bulgarian” qualifier is important here because the name “tarator” is used to refer to several different dishes from nearby-ish countries, and they aren’t the same. Today’s dish (a very healthy and deliciously cooling cucumber soup) isn’t well-known outside of Bulgaria, but it should be, and with your help we can share it around the world. It’s super-easy and takes only about 10 minutes to prepare:

You will need

- 1 large cucumber, cut into small (¼” x ¼”) cubes or small (1″ x ⅛”) batons (the size is important; any smaller and we lose texture; any larger and we lose the balance of the soup, and also make it very different to eat with a spoon)

- 2 cups plain unsweetened yogurt (your preference what kind; live-cultured of some kind is best, and yes, vegan is fine too)

- 1½ cup water, chilled but not icy (fridge-temperature is great)

- ½ cup chopped walnuts (substitutions are not advised; omit if allergic)

- ½ bulb garlic, minced

- 3 tbsp fresh dill, chopped

- 2 tbsp extra virgin olive oil

- 1 tsp black pepper, coarse ground

- ½ tsp MSG* or 1 tsp low-sodium salt

Method

(we suggest you read everything at least once before doing anything)

1) Mix the cucumber, garlic, 2 tbsp of the dill, oil, MSG-or-salt and pepper in a big bowl

2) Add the yogurt and mix it in too

3) Add the cold water slowly and stir thoroughly; it may take a minute to achieve smooth consistency of the liquid—it should be creamy but thin, and definitely shouldn’t stand up by itself

4) Top with the chopped nuts, and the other tbsp of dill as a garnish

5) Serve immediately, or chill in the fridge until ready to serve. It’s perfect as a breakfast or a light lunch, by the way.

Enjoy!

Want to learn more?

For those interested in some of the science of what we have going on today:

- How To Really Look After Your Joints ← this is about how cucumber has phytochemicals that outperform glucosamine and chondroitin by 200%, at 1/135th of the dose

- Making Friends With Your Gut (You Can Thank Us Later)

- Is Dairy Scary? ← short answer in terms of human health is “not if it’s fermented”

- Why You Should Diversify Your Nuts!

- The Many Health Benefits Of Garlic

- Is “Extra Virgin” Worth It?

- Black Pepper’s Impressive Anti-Cancer Arsenal (And More)

- Monosodium Glutamate: Sinless Flavor-Enhancer Or Terrible Health Risk? ← *for those who are worried about the health aspects of MSG; it is healthier and safer than table salt

Take care!

Share This Post

-

I’ve been diagnosed with cancer. How do I tell my children?

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

With around one in 50 adults diagnosed with cancer each year, many people are faced with the difficult task of sharing the news of their diagnosis with their loved ones. Parents with cancer may be most worried about telling their children.

It’s best to give children factual and age-appropriate information, so children don’t create their own explanations or blame themselves. Over time, supportive family relationships and open communication help children adjust to their parent’s diagnosis and treatment.

It’s natural to feel you don’t have the skills or knowledge to talk with your children about cancer. But preparing for the conversation can improve your confidence.

Benjamin Manley/Unsplash Preparing for the conversation

Choose a suitable time and location in a place where your children feel comfortable. Turn off distractions such as screens and phones.

For teenagers, who can find face-to-face conversations confronting, think about talking while you are going for a walk.

Consider if you will tell all children at once or separately. Will you be the only adult present, or will having another adult close to your child be helpful? Another adult might give your children a person they can talk to later, especially to answer questions they might be worried about asking you.

Choose the time and location when your children feel comfortable. Craig Adderley/Pexels Finally, plan what to do after the conversation, like doing an activity with them that they enjoy. Older children and teenagers might want some time alone to digest the news, but you can suggest things you know they like to do to relax.

Also consider what you might need to support yourself.

Preparing the words

Parents might be worried about the best words or language to use to make sure the explanations are at a level their child understands. Make a plan for what you will say and take notes to stay on track.

The toughest part is likely to be saying to your children that you have cancer. It can help to practise saying those words out aloud.

Ask family and friends for their feedback on what you want to say. Make use of guides by the Cancer Council, which provide age-appropriate wording for explaining medical terms like “cancer”, “chemotherapy” and “tumour”.

Having the conversation

Being open, honest and factual is important. Consider the balance between being too vague, and providing too much information. The amount and type of information you give will be based on their age and previous experiences with illness.

Remember, if things don’t go as planned, you can always try again later.

Start by telling your children the news in a few short sentences, describing what you know about the diagnosis in language suitable for their age. Generally, this information will include the name of the cancer, the area of the body affected and what will be involved in treatment.

Let them know what to expect in the coming weeks and months. Balance hope with reality. For example:

The doctors will do everything they can to help me get well. But, it is going to be a long road and the treatments will make me quite sick.

Check what your child knows about cancer. Young children may not know much about cancer, while primary school-aged children are starting to understand that it is a serious illness. Young children may worry about becoming unwell themselves, or other loved ones becoming sick.

Young children might worry about other loved ones becoming sick. Pixabay/Pexels Older children and teenagers may have experiences with cancer through other family members, friends at school or social media.

This process allows you to correct any misconceptions and provides opportunities for them to ask questions. Regardless of their level of knowledge, it is important to reassure them that the cancer is not their fault.

Ask them if there is anything they want to know or say. Talk to them about what will stay the same as well as what may change. For example:

You can still do gymnastics, but sometimes Kate’s mum will have to pick you up if I am having treatment.

If you can’t answer their questions, be OK with saying “I’m not sure”, or “I will try to find out”.

Finally, tell children you love them and offer them comfort.

How might they respond?

Be prepared for a range of different responses. Some might be distressed and cry, others might be angry, and some might not seem upset at all. This might be due to shock, or a sign they need time to process the news. It also might mean they are trying to be brave because they don’t want to upset you.

Children’s reactions will change over time as they come to terms with the news and process the information. They might seem like they are happy and coping well, then be teary and clingy, or angry and irritable.

Older children and teenagers may ask if they can tell their friends and family about what is happening. It may be useful to come together as a family to discuss how to inform friends and family.

What’s next?

Consider the conversation the first of many ongoing discussions. Let children know they can talk to you and ask questions.

Resources might also help; for example, The Cancer Council’s app for children and teenagers and Redkite’s library of free books for families affected by cancer.

If you or other adults involved in the children’s lives are concerned about how they are coping, speak to your GP or treating specialist about options for psychological support.

Cassy Dittman, Senior Lecturer/Head of Course (Undergraduate Psychology), Research Fellow, Manna Institute, CQUniversity Australia; Govind Krishnamoorthy, Senior Lecturer, School of Psychology and Wellbeing, Post Doctoral Fellow, Manna Institute, University of Southern Queensland, and Marg Rogers, Senior Lecturer, Early Childhood Education; Post Doctoral Fellow, Manna Institute, University of New England

This article is republished from The Conversation under a Creative Commons license. Read the original article.

Share This Post

-

What’s The Difference Between Minoxidil For Men vs For Women?

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

It’s Q&A Day at 10almonds!

Have a question or a request? We love to hear from you!

In cases where we’ve already covered something, we might link to what we wrote before, but will always be happy to revisit any of our topics again in the future too—there’s always more to say!

As ever: if the question/request can be answered briefly, we’ll do it here in our Q&A Thursday edition. If not, we’ll make a main feature of it shortly afterwards!

So, no question/request too big or small 😎

❝I’m confused, does minoxidil work the same for women and for men? The label on the minoxidil I was looking at says it is only for men❞

Great question!

Simple answer: yes, it works (or not, as the case may be for some people, more on that later) exactly the same for men and women.

You may be wondering: what, then, is the difference between minoxidil for men and minoxidil for women?

And the answer is: the packaging/marketing. That’s literally it.

It’s like with razors, there are razors marketed to men and razors marketed to women, and both come with advertising/marketing promising to be enhance your masculine/feminine appearance (as applicable), but at the end of the day, in both cases it’s just sharp steel blades that cut through hairs as closely as possible to the skin. The sharp steel neither knows nor cares about your gender.

When it comes to minoxidil, in both cases the active ingredient is indeed minoxidil, usually at 2% or 5% strength (though other options exist, and all these get marketed to men and women), and in both cases it works in the same ways, by:

- dilating the blood vessels that feed the hair follicles and thus allowing them to perform better

- kicking the follicles into anagen (growth phase) and keeping them there for longer

Note: this is why we mentioned that it won’t work for all people, and it’s because (regardless of sex/gender), it cannot do those things for your hair follicles if you do not have hair follicles to treat. In the case of someone who has had hair loss for a long time, sometimes there will not be enough living follicles remaining to do anything useful with. As a general rule of thumb, provided you have some hairs there (even if they are little downy baby hairs), they can usually be coaxed back to full life.

In both cases, it’s for treating “pattern hair loss”, the pattern being “male pattern” or “female pattern”, respectively, but in both cases it’s androgenetic alopecia, and in both cases it’s caused by the corresponding genetic factors and hormone-mediated gene expression (the physical pattern therefore is usually a little different for men and women; that’s because of the “hormone-mediated gene expression”, or to put it into lay terms “the hormones tell the body which genes to turn on and off”.

Fun fact: it’s the same resultant phenotype as for PCOS, though usually occurring at different stages in life; PCOS earlier and AGA later—sometimes people (including people with both ovaries and hair) can get one without the other, though, as there may be other considerations going on besides the genetic and hormonal.

Limitation: if the hair loss is for reasons other than androgenetic alopecia, it’s unlikely to work. In fact, it is usually flat-out stated that it won’t work, but since one of the common listed side effects of minoxidil is “hair growth in other places”, it seems fair to say that the scalp is not really the only place it can cause hair to grow.

Want to know more?

You can read about the science of various pharmaceutical options (including minoxidil) here:

Hair-Loss Remedies, By Science ← this also goes more into the pros and cons of minoxidil than we have today, so if you’re considering minoxidil, you might want to read this first, to make the most informed decision.

And if you want to be a bit less pharmaceutical about it:

Take care!

Share This Post

Related Posts

-

Walnut, Apricot, & Sage Nut Roast

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

It’s important to have at least one good nut roast recipe in your repertoire. It’s something that’s very good for making a good dish out of odds and ends that are in your house, and done well, it’s not only filling and nutritious, but a tasty treat too. Done badly, everyone knows the results can be unfortunate… Making this the perfect way to show off your skills!

You will need

- 1 cup walnuts

- ½ cup almonds

- ¼ cup whole mixed seeds (chia, pumpkin, & poppy are great)

- ¼ cup ground flax (also called flax meal)

- 1 medium onion, finely chopped

- 1 large carrot, grated

- 4 oz dried apricots, chopped

- 3 oz mushrooms, chopped

- 1 oz dried goji berries

- ½ bulb garlic, crushed

- 2 tbsp fresh sage, chopped

- 1 tbsp nutritional yeast

- 2 tsp dried rosemary

- 2 tsp dried thyme

- 2 tsp black pepper, coarse ground

- 1 tsp yeast extract (even if you don’t like it; trust us; it will work) dissolved in ¼ cup hot water

- ½ tsp MSG or 1 tsp low-sodium salt

- Extra virgin olive oil

Method

(we suggest you read everything at least once before doing anything)

1) Preheat the oven to 350℉ / 180℃, and line a 2 lb loaf tin with baking paper.

2) Heat some oil in a skillet over a moderate heat, and fry the onion for a few minutes until translucent. Add the garlic, carrot, and mushrooms, cooking for another 5 minutes, stirring well. Set aside to cool a little once done.

3) Process the nuts in a food processor, pulsing until they are well-chopped but not so much that they turn into flour.

4) Combine the nuts, vegetables, and all the other ingredients in a big bowl, and mix thoroughly. If it doesn’t have enough structural integrity to be thick and sticky and somewhat standing up by itself if you shape it, add more ground flax. If it is too dry, add a little water but be sparing.

5) Spoon the mixture into the loaf tin, press down well (or else it will break upon removal), cover with foil and bake for 30 minutes. Remove the foil, and bake for a further 15 minutes, until firm and golden. When done, allow it to rest in the tin for a further 15 minutes, before turning it out.

6) Serve, as part of a roast dinner (roast potatoes, vegetables, gravy, etc).

Enjoy!

Want to learn more?

For those interested in some of the science of what we have going on today:

- Why You Should Diversify Your Nuts!

- Chia Seeds vs Pumpkin Seeds – Which is Healthier?

- Apricots vs Peaches – Which is Healthier?

- Goji Berries: Which Benefits Do They Really Have?

- Ergothioneine: “The Longevity Vitamin” (That’s Not A Vitamin)

Take care!

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails:

-

Over-50s Physio: What My 5 Oldest Patients (Average Age 92) Do Right

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Oftentimes, people of particularly advanced years will be asked their secret to longevity, and sometimes the answers aren’t that helpful because they don’t actually know, and ascribe it to some random thing. Will Harlow, the over-50s specialist physio, talks about the top 5 science-based things that his 5 oldest patients do, that enhances the healthy longevity that they are enjoying:

The Top 5’s Top 5

Here’s what they’re doing right:

Daily physical activity: all five patients maintain a consistent habit of daily exercise, which includes activities like exercise classes, home workouts, playing golf, or taking daily walks. They prioritize movement even when it’s difficult, rarely skipping a day unless something serious happened. A major motivator was the fear of losing mobility, as they had seen spouses, friends, or family members stop exercising and never start again.

Stay curious: a shared trait among the patients was their curiosity and eagerness to learn. They enjoy meeting new people, exploring new experiences, and taking on new challenges. Two of them attended the University of the Third Age to learn new skills, while another started playing bridge as a new hobby. The remaining two have recently made new friends. They all maintain a playful attitude, a good sense of humor, and aren’t afraid to fail or laugh at themselves.

Prioritize sleep (but not too much): the patients each average seven hours of sleep per night, aligning with research suggesting that 7–9 hours of sleep is ideal for health. They maintain consistent sleep and wake-up times, which contributes to their well-being. While they allow themselves short naps when needed, they avoid long afternoon naps to avoid disrupting their sleep patterns.

Spend time in nature: spending time outdoors is a priority for all five individuals. Whether through walking, gardening, or simply sitting on a park bench, they make it a habit to connect with nature. This aligns with studies showing that time spent in natural environments, especially near water, significantly reduces stress. When water isn’t accessible, green spaces still provide a beneficial boost to mental health.

Stick to a routine: the patients all value simple daily routines, such as enjoying an evening cup of tea, taking a daily walk, or committing to small gardening tasks. These routines offer mental and physical grounding, providing stability even when life becomes difficult sometimes. They emphasized the importance of keeping routines simple and manageable to ensure they could stick to them regardless of life’s challenges.

For more on each of these, enjoy:

Click Here If The Embedded Video Doesn’t Load Automatically!

Want to learn more?

You might also like to read:

Top 8 Habits Of The Top 1% Healthiest Over-50s ← another approach to the same question, this time with a larger sample size, and/but many younger (than 90s) respondents.

Take care!

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails:

-

Studies of Parkinson’s disease have long overlooked Pacific populations – our work shows why that must change

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

A form of Parkinson’s disease caused by mutations in a gene known as PINK1 has long been labelled rare. But our research shows it’s anything but – at least for some populations.

Our meta-analysis revealed that people in specific Polynesian communities have a much higher rate of PINK1-linked Parkinson’s than expected. This finding reshapes not only our understanding of who is most at risk, but also how soon symptoms may appear and what that might mean for treatment and testing.

Parkinson’s disease is often thought of as a single condition. In reality, it is better understood as a group of syndromes caused by different factors – genetic, environmental or a combination of both.

These varying causes lead to differences in disease patterns, progression and subsequent diagnosis. Recognising this distinction is crucial as it paves the way for targeted interventions and may even help prevent the disease altogether.

Shutterstock/sfam_photo Why we focus on PINK1-linked Parkinson’s

We became interested in this gene after a 2021 study highlighted five people of Samoan and Tongan descent living in New Zealand who shared the same PINK1 mutation.

Previously, this mutation had been spotted only in a few more distant places –Malaysia, Guam and the Philippines. The fact it appeared in people from Samoan and Tongan backgrounds suggested a historical connection dating back to early Polynesian migrations.

One person in 1,300 West Polynesians carries this mutation. This is a frequency well above what scientists usually classify as rare (below one in 2,200). This discovery means we may be overlooking entire communities in Parkinson’s research if we continue to assume PINK1-linked cases are uncommon.

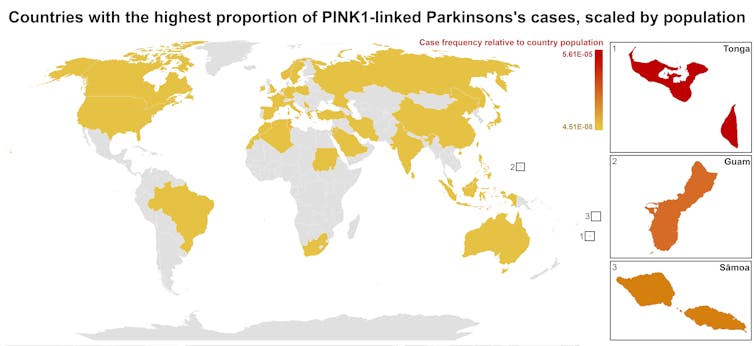

This world map shows people in some Polynesian communities have a much higher rate of PINK1-linked Parkinson’s than the global population. Eden Yin, CC BY-SA Traditional understanding says PINK1-linked Parkinson’s is both rare and typically strikes younger people, mostly in their 30s or 40s, if they inherit two faulty copies of the gene. In other words, it’s considered a recessive condition, needing two matching puzzle pieces before the disease can unfold.

Our work challenges this view. We show that even one defective PINK1 gene can cause Parkinson’s at an average age of 43, much earlier than the typical onset after 65. That’s a significant departure from the standard belief that only people with two defective gene copies are at risk.

Why this matters for people with the disease

It’s not just genetics that challenge long-held views. Historically, PINK1-linked Parkinson’s was thought to lack some of the classic features of the disease, such as toxic clumps of alpha-synuclein protein.

In typical Parkinson’s, alpha-synuclein builds up in the brain, forming sticky clumps known as Lewy bodies. Our results, contrary to prior beliefs, show that alpha-synuclein pathology is present in 87.5% of PINK1 cases. This finding opens up a promising new avenue for future treatment development.

The biggest concern is early onset. PINK1-linked Parkinson’s can begin as early as 11 years old, although a more common starting point is around the mid-30s. This early onset means living longer with the disease, which can profoundly affect education, work opportunities and family life.

Current treatments (such as levodopa, a precursor of dopamine) help manage symptoms, but they’re not designed to address the root cause. If we know someone has a PINK1 mutation, scientists and clinicians can explore therapies for specific genetic pathways, potentially delivering relief beyond symptom management.

Sex differences add a layer of complexity

In Parkinson’s, generally, men are at higher risk and tend to develop symptoms earlier. However, our findings suggest the opposite pattern for PINK1-linked cases. Particularly, women with two defective copies of the gene experience onset earlier than men.

This highlights the need to consider sex-related factors in Parkinson’s research. Overlooking them risks missing key elements of the disease.

Genetic testing could be a game-changer for PINK1-linked Parkinson’s. Because it often appears earlier, doctors may not recognise it immediately, especially if they are more familiar with the common, later-onset form of Parkinson’s.

Early genetic testing could lead to a faster, more accurate diagnosis, allowing treatment to begin when interventions are most effective. It would help families understand how the disease is inherited, enabling relatives to get tested.

In some cases, where appropriate and culturally acceptable, embryo screening may be considered to prevent the passing of the faulty gene.

Knowing you have a PINK1 mutation could also make finding the right treatment more efficient. Instead of a lengthy trial-and-error process with different medications, doctors could use emerging therapies designed to target the underlying PINK1 mutation rather than relying on general Parkinson’s treatments meant for the broader population.

Addressing research gaps

These findings underscore how crucial it is to include diverse populations in health research.

Many communities, such as those in Samoa, Tonga and other Pacific nations, have had little to no involvement in global Parkinson’s genetics studies. This has created gaps in knowledge and real-world consequences for people who may not receive timely or accurate diagnoses.

Researchers, funding bodies and policymakers must prioritise projects beyond the usual focus on European or industrialised countries to ensure research findings and treatments are relevant to all affected populations.

To better diagnose and treat Parkinson’s, we need a more inclusive approach. Recognising that PINK1-linked Parkinson’s is not as rare as previously thought – and that genetics, sex differences and cultural factors all play a role – allows us to improve care for everyone.

By expanding genetic testing, refining treatments and ensuring research reflects the full spectrum of Parkinson’s, we can move closer to more precise diagnoses, targeted therapies and better support systems for all.

Victor Dieriks, Research Fellow in Health Sciences, University of Auckland, Waipapa Taumata Rau and Eden Paige Yin, PhD candidate in Health Sciences, University of Auckland, Waipapa Taumata Rau

This article is republished from The Conversation under a Creative Commons license. Read the original article.

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails: