Walden Farms Caesar Dressing vs. Primal Kitchen Caesar Dressing – Which is Healthier?

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Our Verdict

When comparing Walden Farms Caesar Dressing to Primal Kitchen Caesar Dressing, we picked the Primal Kitchen.

Why?

As you can see from the front label, the Walden Farms product has 0 net carbs, 0 calories, and 0 fat. In fact, its ingredients list begins:

Water, white distilled vinegar, erythritol, corn fiber, salt, microcrystalline cellulose, xanthan gum, titanium dioxide (color)

…before it gets to something interesting (garlic purée), by which point the amount must be miniscule.

The Primal Kitchen product, meanwhile, has 140 calories per serving and 15g fat (of which, 1.5g is saturated). However! The ingredients list this time begins:

Avocado oil, water, organic coconut aminos (organic coconut sap, sea salt), organic apple cider vinegar, organic distilled vinegar, mushroom extract, organic gum acacia, organic guar gum

…before it too gets to garlic, which this time, by the way, is organic roasted garlic.

In case you’re wondering about the salt content in both, they add up to 190mg for the Walden Farms product, and 240mg for the Primal Kitchen product. We don’t think that the extra 50mg (out of a daily allowance of 2300–5000mg, depending on whom you ask) is worthy of note.

In short, the Walden Farms product is made of mostly additives of various kinds, whereas the Primal Kitchen product is made of mostly healthful ingredients.

So, the calories and fat are nothing to fear.

For this reason, we chose the product with more healthful ingredients—but we acknowledge that if you are specifically trying to keep your calories down, then the Walden Farms product may be a valid choice.

Read more:

• Can Saturated Fats Be Healthy?

• Caloric Restriction with Optimal Nutrition

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Recommended

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails:

-

Gentler Hair Health Options

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Hair, Gently

We have previously talked about the medicinal options for combatting the thinning hair that comes with age especially for men, but also for a lot of women. You can read about those medicinal options here:

Hair-Loss Remedies, By Science

We also did a whole supplement spotlight research review for saw palmetto! You can read about how that might help you keep your hair present and correct, here:

One Man’s Saw Palmetto Is Another Woman’s Serenoa Repens

Today we’re going to talk options that are less “heavy guns”, and/but still very useful.

Supplementation

First, the obvious. Taking vitamins and minerals, especially biotin, can help a lot. This writer takes 10,000µg (that’s micrograms, not milligrams!) biotin gummies, similar to this example product on Amazon (except mine also has other vitamins and minerals in, but the exact product doesn’t seem to be available on Amazon).

When thinking “what vitamins and minerals help hair?”, honestly, it’s most of them. So, focus on the ones that count for the most (usually: biotin and zinc), and then cover your bases for the rest with good diet and additional supplementation if you wish.

Caffeine (topical)

It may feel silly, giving one’s hair a stimulant, but topical caffeine application really does work to stimulate hair growth. And not “just a little help”, either:

❝Specifically, 0.2% topical caffeine-based solutions are typically safe with very minimal adverse effects for long-term treatment of AGA, and they are not inferior to topical 5% minoxidil therapy❞

(AGA = Androgenic Alopecia)

Argan oil

As with coconut oil, argan oil is great on hair. It won’t do a thing to improve hair growth or decrease hair shedding, but it will help you hair stay moisturized and thus reduce breakage—thus, may not be relevant for everyone, but for those of us with hair long enough to brush, it’s important.

Bonus: get an argan oil based hair serum that also contains keratin (the protein used to make hair), as this helps strengthen the hair too.

Here’s an example product on Amazon

Silk pillowcases

Or a silk hair bonnet to sleep in! They both do the same thing, which is prevent damaging the hair in one’s sleep by reducing the friction that it may have when moving/turning against the pillow in one’s sleep.

- Pros of the bonnet: if you have lots of hair and a partner in bed with you, your hair need not be in their face, and you also won’t get it caught under you or them.

- Pros of the pillowcase: you don’t have to wear a bonnet

Both are also used widely by people without hair loss issues, but with easily damaged and/or tangled hair—Black people especially with 3C or tighter curls in particular often benefit from this. Other people whose hair is curly and/or gray also stand to gain a lot.

Here are Amazon example products of a silk pillowcase (it’s expensive, but worth it) and a silk bonnet, respectively

Want to read more?

You might like this article:

From straight to curly, thick to thin: here’s how hormones and chemotherapy can change your hair

Take care!

Share This Post

-

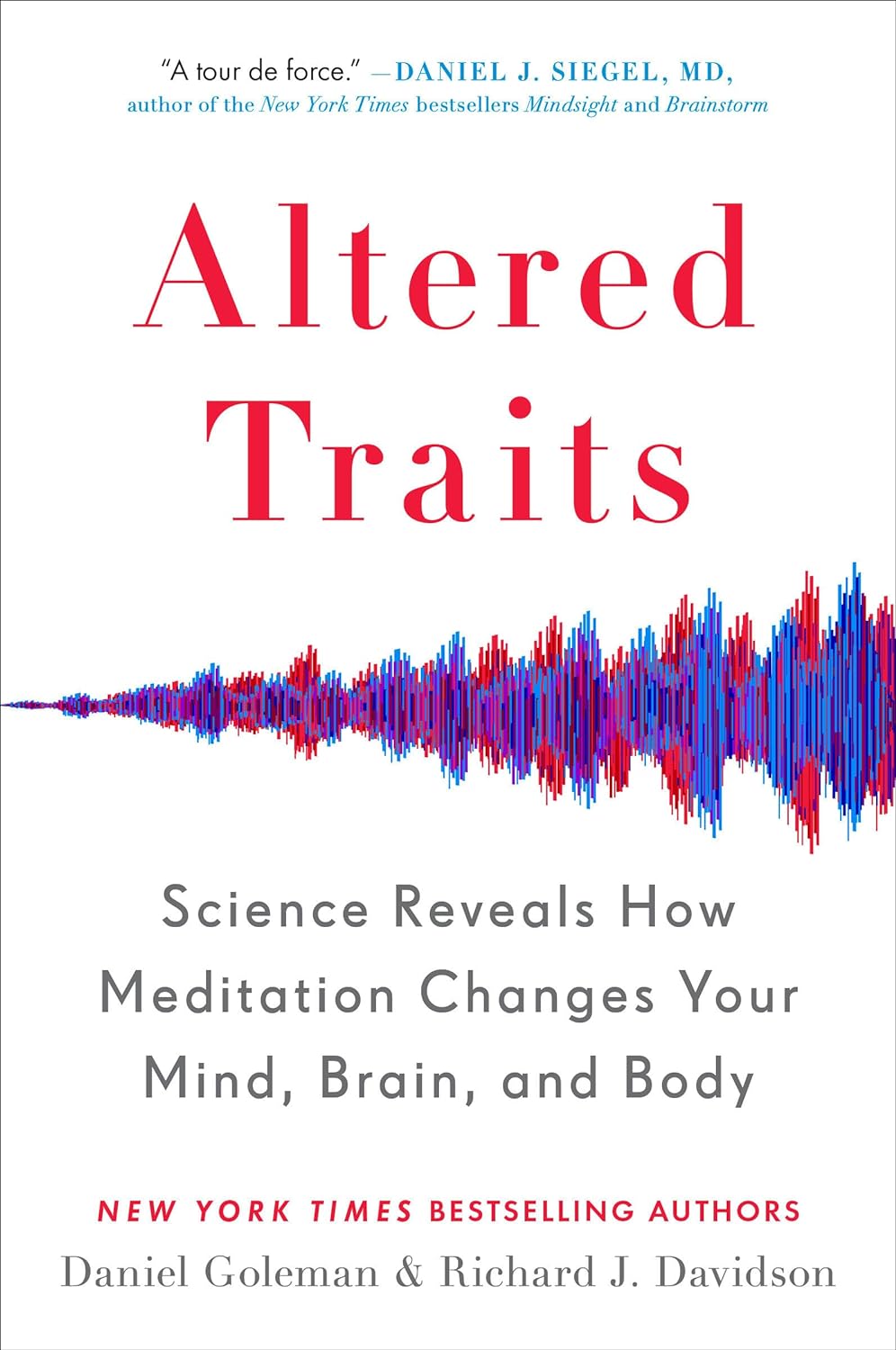

Altered Traits – by Dr. Daniel Goleman & Dr. Richard Davidson

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

We know that meditation helps people to relax, but what more than that?This book explores the available science.

We say “explore the available science”, but it’d be remiss of us not to note that the authors have also expanded the available science, conducting research in their own lab.

From stress tests and EEGs to attention tests and fMRIs, this book looks at the hard science of what different kinds of meditation do to the brain. Not just in terms of brain state, either, but gradual cumulative anatomical changes, too. Powerful stuff!

The style is very pop-science in presentation, easily comprehensible to all. Be aware though that this is an “if this, then that” book of science, not a how-to manual. If you want to learn to meditate, this isn’t the book for that.

Bottom line: if you’d like to understand more about how different kinds of meditation affect the brain differently, this is the book for you.

Share This Post

-

Breathe; Don’t Vent (At Least In The Moment)

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Zen And The Art Of Breaking Things

We’ve talked before about identifying emotions and the importance of being able to express them:

Answering The Most Difficult Question: How Are You?

However, there can be a difference between “expressing how we feel” and “being possessed by how we feel and bulldozing everything in our path”

…which is, of course, primarily a problem in the case of anger—and by extension, emotions that are often contemporaneous with anger, such as jealousy, shame, fear, etc.

How much feeling is too much?

While this is in large part a subjective matter, clinically speaking the key question is generally: is it adversely affecting daily life to the point of being a problem?

For example, if you have to spend half an hour every day actively managing a certain emotion, that’s probably indicative of something unusual, but “unusual” is not inherently bad. If you’re managing it safely and in a way that doesn’t negatively affect the rest of your life, then that is generally considered fine, unless you feel otherwise about it.

A good example of this is complicated grief and/or prolonged grief.

But what about when it comes to anger? How much is ok?

When it comes to those around you, any amount of anger can seem like too much. Anger often makes us short-tempered even with people who are not the object of our anger, and it rarely brings out the best in us.

We can express our feelings in non-aggressive ways, for example:

and

Seriously Useful Communication Skills!

Sometimes, there’s another way though…

Breathe; don’t vent

That’s a great headline, but we can’t take the credit for it, because it came from:

Breathe, don’t vent: turning down the heat is key to managing anger

…in which it was found that, by all available metrics, the popular wisdom of “getting it off your chest” doesn’t necessarily stand up to scrutiny, at least in the short term:

❝The work was inspired in part by the rising popularity of rage rooms that promote smashing things (such as glass, plates and electronics) to work through angry feelings.

I wanted to debunk the whole theory of expressing anger as a way of coping with it,” she said. “We wanted to show that reducing arousal, and actually the physiological aspect of it, is really important.❞

And indeed, he and his team did find that various arousal-increasing activities (such as hitting a punchbag, breaking things, doing vigorous exercise) did not help as much as arousal-decreasing activities, such as mindfulness-based relaxation techniques.

If you’d like to read the full paper, then so would we, but we couldn’t get full access to this one yet. However, the abstract includes representative statistics, so that’s worth a once-over:

Caveat!

Did you notice the small gap between their results and their conclusion?

In a lab or similar short-term observational setting, their recommendation is clearly correct.

However, if the source of your anger is something chronic and persistent, it could well be that calming down without addressing the actual cause is just “kicking the can down the road”, and will still have to actually be dealt with eventually.

So, while “here be science”, it’s not a mandate for necessarily suffering in silence. It’s more about being mindful about how we go about tackling our anger.

As for a primer on mindfulness, feel free to check out:

No-Frills, Evidence-Based Mindfulness

Take care!

Share This Post

Related Posts

-

How Useful Is Peppermint, Really?

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Peppermint For Digestion & Against Nausea

Peppermint is often enjoyed to aid digestion, and sometimes as a remedy for nausea, but what does the science say about these uses?

Peppermint and digestion

In short: it works! (but beware)

Most studies on peppermint and digestion, that have been conducted with humans, have been with regard to IBS, but its efficacy seems quite broad:

❝Peppermint oil is a natural product which affects physiology throughout the gastrointestinal tract, has been used successfully for several clinical disorders, and appears to have a good safety profile.❞

However, and this is important: if your digestive problem is GERD, then you may want to skip it:

❝The univariate logistic regression analysis showed the following risk factors: eating 1–2 meals per day (OR = 3.50, 95% CI: 1.75–6.98), everyday consumption of peppermint tea (OR = 2.00, 95% CI: 1.14–3.50), and eating one, big meal in the evening instead of dinner and supper (OR = 1.80, 95% CI: 1.05–3.11).

The multivariate analysis confirmed that frequent peppermint tea consumption was a risk factor (OR = 2.00, 95% CI: 1.08–3.70).❞

~ Dr. Jarosz & Dr. Taraszewska

Source: Risk factors for gastroesophageal reflux disease: the role of diet

Peppermint and nausea

Peppermint is also sometimes recommended as a nausea remedy. Does it work?

The answer is: maybe

The thing with nausea is it is a symptom with a lot of possible causes, so effectiveness of remedies may vary. But for example:

- Aromatherapy for treatment of postoperative nausea and vomiting ← no better than placebo

- The Effect of Combined Inhalation Aromatherapy with Lemon and Peppermint on Nausea and Vomiting of Pregnancy: A Double-Blind, Randomized Clinical Trial ← initially no better than placebo, then performed better on subsequent days

- The Effects of Peppermint Oil on Nausea, Vomiting and Retching in Cancer Patients Undergoing Chemotherapy: An Open Label Quasi-Randomized Controlled Pilot Study ← significant benefit immediately

Summary

Peppermint is useful against wide variety of gastrointestinal disorders, including IBS, but very definitely excluding GERD (in the case of GERD, it may make things worse)

Peppermint may help with nausea, depending on the cause.

Where can I get some?

Peppermint tea, and peppermint oil, you can probably find in your local supermarket (as well as fresh mint leaves, perhaps).

For the “heavy guns” that is peppermint essential oil, here’s an example product on Amazon for your convenience

Enjoy!

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails:

-

Ikigai – by Héctor García and Francesc Miralles

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Ikigai is the Japanese term for what in English we often call “raison d’être”… in French, because English is like that.

But in other words: ikigai is one’s purpose in life, one’s reason for living.

The authors of this work spend some chapters extolling the virtues of finding one’s ikigai, and the health benefits that doing so can convey. It is, quite clearly, an important and relevant factor.

The rest of the book goes beyond that, though, and takes a holistic look at why (and how) healthy longevity is enjoyed by:

- Japanese people in general,

- Okinawans in particular,

- Residents of Okinawa’s “blue zone” village with the highest percentage of supercentenarians, most of all.

Covering considerations from ikigai to diet to small daily habits to attitudes to life, we’re essentially looking at a blueprint for healthy longevity.

For a book whose title and cover suggests a philosophy-heavy content, there’s a lot of science in here too, by the way! From microbiology to psychiatry to nutrition science to cancer research, this book covers all bases.

In short: this book gives a lot of good science-based suggestions for adjustments we can make to our lives, without moving to an Okinawan village!

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails:

-

The Optimal Morning Routine, Per Neuroscience

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Dr. Andrew Huberman, neuroscientist and professor of neurobiology, has insights:

The foundations of a good day

Here are some key things to consider:

- The role of light: get sunlight exposure within an hour of waking to anchor your body’s cortisol pulse, set your circadian rhythm, and boost mood-regulating dopamine. Light exposure on the skin also boost hormone levels like testosterone and estrogen, contributing to energy, motivation, and overall wellbeing.

- The role of caffeine: delay caffeine intake for 60–90 minutes after waking to allow adenosine to clear naturally, preventing afternoon energy crashes. Otherwise, caffeine will block the adenosine for 4–8 hours, causing the wave of adenosine-induced sleepiness to resurge later.

- The role of exercise: morning exercise helps clear adenosine, raise core body temperature, and improve wakefulness

- The role of cold: cold showers or ice baths trigger adrenaline and dopamine surges, enhancing mood and drive for hours.

For more on each of these, enjoy:

Click Here If The Embedded Video Doesn’t Load Automatically!

Want to learn more?

You might also like to read:

Morning Routines That Just Flow

Take care!

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails: