Which Magnesium? (And: When?)

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

It’s Q&A Day at 10almonds!

Have a question or a request? We love to hear from you!

In cases where we’ve already covered something, we might link to what we wrote before, but will always be happy to revisit any of our topics again in the future too—there’s always more to say!

As ever: if the question/request can be answered briefly, we’ll do it here in our Q&A Thursday edition. If not, we’ll make a main feature of it shortly afterwards!

So, no question/request too big or small

❝Good morning! I have been waiting for this day to ask: the magnesium in my calcium supplement is neither of the two versions you mentioned in a recent email newsletter. Is this a good type of magnesium and is it efficiently bioavailable in this composition? I also take magnesium that says it is elemental (oxide, gluconate, and lactate). Are these absorbable and useful in these sources? I am not interested in taking things if they aren’t helping me or making me healthier. Thank you for your wonderful, informative newsletter. It’s so nice to get non-biased information❞

Thank you for the kind words! We certainly do our best.

For reference: the attached image showed a supplement containing “Magnesium (as Magnesium Oxide & AlgaeCal® l.superpositum)”

Also for reference: the two versions we compared head-to-head were these very good options:

Magnesium Glycinate vs Magnesium Citrate – Which is Healthier?

Let’s first borrow from the above, where we mentioned: magnesium oxide is probably the most widely-sold magnesium supplement because it’s cheapest to make. It also has woeful bioavailability, to the point that there seems to be negligible benefit to taking it. So we don’t recommend that.

As for magnesium gluconate and magnesium lactate:

- Magnesium lactate has very good bioavailability and in cases where people have problems with other types (e.g. gastrointestinal side effects), this will probably not trigger those.

- Magnesium gluconate has excellent bioavailability, probably coming second only to magnesium glycinate.

The “AlgaeCal® l.superpositum” supplement is a little opaque (and we did ntoice they didn’t specify what percentage of the magnesium is magnesium oxide, and what percentage is from the algae, meaning it could be a 99:1 ratio split, just so that they can claim it’s in there), but we can say Lithothamnion superpositum is indeed an algae and magnesium from green things is usually good.

Except…

It’s generally best not to take magnesium and calcium together (as that supplement contains). While they do work synergistically once absorbed, they compete for absorption first so it’s best to take them separately. Because of magnesium’s sleep-improving qualities, many people take calcium in the morning, and magnesium in the evening, for this reason.

Some previous articles you might enjoy meanwhile:

- Pinpointing The Usefulness Of Acupuncture

- Science-Based Alternative Pain Relief

- Peripheral Neuropathy: How To Avoid It, Manage It, Treat It

- What Does Lion’s Mane Actually Do, Anyway?

Take care!

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Recommended

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails:

-

The Lupus Solution – by Dr. Tiffany Caplan & Dr. Brent Caplan

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Lupus is not fun, and this book sets out to make it easier.

Starting off by explaining the basics of autoimmunity and how lupus works, the authors go on the address the triggers of lupus and how to avoid them—which if you’ve been suffering from lupus for a while, you probably know this part already, but it’s as well to give them a look over just in case you missed something.

The real value of the book though comes in the 8 chapters of the section “Tools & Therapies” which are mostly lifestyle adjustments though there are additionally some pharmaceutical approaches that can also help, and they are explained too. And no, it’s not just “reduce inflammation” (but yes, also that); rather, a whole array of things are examined that often aren’t thought of as related to lupus, but in fact can have a big impact.

The style is to-the-point and informational, and formatted for ease of reading. It doesn’t convey more hard science than necessary, but it does have a fair bibliography at the back.

It’s a short book, weighing in at 182 pages. If you want something more comprehensive, check out our review of The Lupus Encyclopedia, which is 848 pages of information-dense text and diagrams.

Bottom line: if you have lupus and would like fewer symptoms, this book can help you with that quite a bit without getting so technical as the aforementioned encyclopedia.

Click here to check out The Lupus Solution, and live more comfortably!

Share This Post

-

Tasty Hot-Or-Cold Soup

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Full of fiber as well as vitamins and minerals, this versatile “serve it hot or cold” soup is great whatever the weather—give it a try!

You will need

- 1 quart low-sodium vegetable stock—ideally you made this yourself from vegetable offcuts you kept in the freezer until you had enough to boil in a big pan, but failing that, a large supermarket will generally be able to sell you low-sodium stock cubes.

- 2 medium potatoes, peeled and diced

- 2 leeks, chopped

- 2 stalks celery, chopped

- 1 large onion, diced

- 1 large carrot, diced, or equivalent small carrots, sliced

- 1 zucchini, diced

- 1 red bell pepper, diced

- 1 tsp rosemary

- 1 tsp thyme

- ¼ bulb garlic, minced

- 1 small piece (equivalent of a teaspoon) ginger, minced

- 1 tsp red chili flakes

- 1 tsp black pepper, coarse ground

- ½ tsp turmeric

- Extra virgin olive oil, for frying

- Optional: ½ tsp MSG or 1 tsp low-sodium salt

About the MSG/salt: there should be enough sodium already from the stock and potatoes, but in case there’s not (since not all stock and potatoes are made equal), you might want to keep this on standby.

Method

(we suggest you read everything at least once before doing anything)

1) Heat some oil in a sauté pan, and add the diced onion, frying until it begins to soften.

2) Add the ginger, potato, carrot, and leek, and stir for about 5 minutes. The hard vegetables won’t be fully cooked yet; that’s fine.

3) Add the zucchini, red pepper, celery, and garlic, and stir for another 2–3 minutes.

4) Add the remaining ingredients; seasonings first, then vegetable stock, and let it simmer for about 15 minutes.

5) Check the potatoes are fully softened, and if they are, it’s ready to serve if you want it hot. Alternatively, let it cool, chill it in the fridge, and enjoy it cold:

Enjoy!

Want to learn more?

For those interested in some of the science of what we have going on today:

- Eat More (Of This) For Lower Blood Pressure

- Our Top 5 Spices: How Much Is Enough For Benefits? ← 5/5 in our recipe today!

- Monosodium Glutamate: Sinless Flavor-Enhancer Or Terrible Health Risk?

Take care!

Share This Post

-

Anti-Aging Risotto With Mushrooms, White Beans, & Kale

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

This risotto is made with millet, which as well as being gluten-free, is high in resistant starch that’s great for both our gut and our blood sugars. Add the longevity-inducing ergothioneine in the shiitake and portobello mushrooms, as well as the well-balanced mix of macro- and micronutrients, polyphenols such as lutein (important against neurodegeneration) not to mention more beneficial phytochemicals in the seasonings, and we have a very anti-aging dish!

You will need

- 3 cups low-sodium vegetable stock

- 3 cups chopped fresh kale, stems removed (put the removed stems in the freezer with the vegetable offcuts you keep for making low-sodium vegetable stock)

- 2 cups thinly sliced baby portobello mushrooms

- 1 cup thinly sliced shiitake mushroom caps

- 1 cup millet, as yet uncooked

- 1 can white beans, drained and rinsed (or 1 cup white beans, cooked, drained, and rinsed)

- ½ cup finely chopped red onion

- ½ bulb garlic, finely chopped

- ¼ cup nutritional yeast

- 1 tbsp balsamic vinegar

- 2 tsp ground black pepper

- 1 tsp white miso paste

- ½ tsp MSG or 1 tsp low-sodium salt

- Extra virgin olive oil

Method

(we suggest you read everything at least once before doing anything)

1) Heat a little oil in a sauté or other pan suitable for both frying and volume-cooking. Fry the onion for about 5 minutes until soft, and then add the garlic, and cook for a further 1 minute, and then turn the heat down low.

2) Add about ¼ cup of the vegetable stock, and stir in the miso paste and MSG/salt.

3) Add the millet, followed by the rest of the vegetable stock. Cover and allow to simmer for 30 minutes, until all the liquid is absorbed and the millet is tender.

4) Meanwhile, heat a little oil to a medium heat in a skillet, and cook the mushrooms (both kinds), until lightly browned and softened, which should only take a few minutes. Add the vinegar and gently toss to coat the mushrooms, before setting side.

5) Remove the millet from the heat when it is done, and gently stir in the mushrooms, nutritional yeast, white beans, and kale. Cover, and let stand for 10 minutes (this will be sufficient to steam the kale in situ).

6) Uncover and fluff the risotto with a fork, sprinkling in the black pepper as you do so.

7) Serve. For a bonus for your tastebuds and blood sugars, drizzle with aged balsamic vinegar.

Enjoy!

Want to learn more?

For those interested in some of the science of what we have going on today:

- The Magic Of Mushrooms: The “Longevity Vitamin” (That’s Not A Vitamin)

- Brain Food? The Eyes Have It!

- The Many Health Benefits Of Garlic

- Black Pepper’s Impressive Anti-Cancer Arsenal (And More)

- 10 Ways To Balance Blood Sugars

Take care!

Share This Post

Related Posts

-

Why are my muscles sore after exercise? Hint: it’s nothing to do with lactic acid

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

As many of us hit the gym or go for a run to recover from the silly season, you might notice a bit of extra muscle soreness.

This is especially true if it has been a while between workouts.

A common misunderstanding is that such soreness is due to lactic acid build-up in the muscles.

Research, however, shows lactic acid has nothing to do with it. The truth is far more interesting, but also a bit more complex.

It’s not lactic acid

We’ve known for decades that lactic acid has nothing to do with muscle soreness after exercise.

In fact, as one of us (Robert Andrew Robergs) has long argued, cells produce lactate, not lactic acid. This process actually opposes not causes the build-up of acid in the muscles and bloodstream.

Unfortunately, historical inertia means people still use the term “lactic acid” in relation to exercise.

Lactate doesn’t cause major problems for the muscles you use when you exercise. You’d probably be worse off without it due to other benefits to your working muscles.

Lactate isn’t the reason you’re sore a few days after upping your weights or exercising after a long break.

So, if it’s not lactic acid and it’s not lactate, what is causing all that muscle soreness?

Muscle pain during and after exercise

When you exercise, a lot of chemical reactions occur in your muscle cells. All these chemical reactions accumulate products and by-products which cause water to enter into the cells.

That causes the pressure inside and between muscle cells to increase.

This pressure, combined with the movement of molecules from the muscle cells can stimulate nerve endings and cause discomfort during exercise.

The pain and discomfort you sometimes feel hours to days after an unfamiliar type or amount of exercise has a different list of causes.

If you exercise beyond your usual level or routine, you can cause microscopic damage to your muscles and their connections to tendons.

Such damage causes the release of ions and other molecules from the muscles, causing localised swelling and stimulation of nerve endings.

This is sometimes known as “delayed onset muscle soreness” or DOMS.

While the damage occurs during the exercise, the resulting response to the injury builds over the next one to two days (longer if the damage is severe). This can sometimes cause pain and difficulty with normal movement.

The upshot

Research is clear; the discomfort from delayed onset muscle soreness has nothing to do with lactate or lactic acid.

The good news, though, is that your muscles adapt rapidly to the activity that would initially cause delayed onset muscle soreness.

So, assuming you don’t wait too long (more than roughly two weeks) before being active again, the next time you do the same activity there will be much less damage and discomfort.

If you have an exercise goal (such as doing a particular hike or completing a half-marathon), ensure it is realistic and that you can work up to it by training over several months.

Such training will gradually build the muscle adaptations necessary to prevent delayed onset muscle soreness. And being less wrecked by exercise makes it more enjoyable and more easy to stick to a routine or habit.

Finally, remove “lactic acid” from your exercise vocabulary. Its supposed role in muscle soreness is a myth that’s hung around far too long already.

Robert Andrew Robergs, Associate Professor – Exercise Physiology, Queensland University of Technology and Samuel L. Torrens, PhD Candidate, Queensland University of Technology

This article is republished from The Conversation under a Creative Commons license. Read the original article.

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails:

-

Pasteurization: What It Does And Doesn’t Do

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Pasteurization’s Effect On Risks & Nutrients

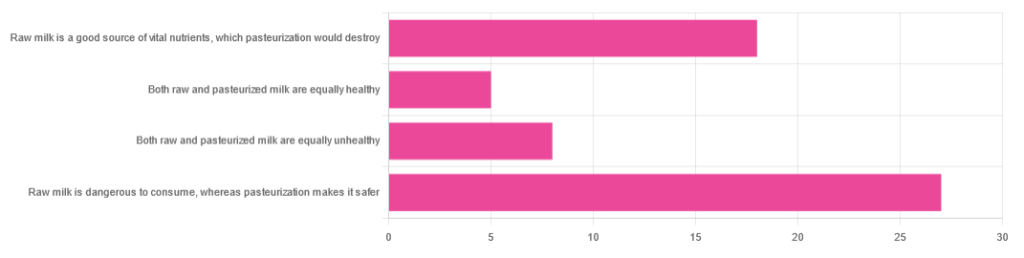

In Wednesday’s newsletter, we asked you for your health-related opinions of raw (cow’s) milk, and got the above-depicted, below-described, set of responses:

- About 47% said “raw milk is dangerous to consume, whereas pasteurization makes it safer”

- About 31% said “raw milk is a good source of vital nutrients which pasteurization would destroy”

- About 14% said “both raw milk and pasteurized milk are equally unhealthy”

- About 9% said “both raw milk and pasteurized milk are equally healthy”

Quite polarizing! So, what does the science say?

“Raw milk is dangerous to consume, whereas pasteurization makes it safer: True or False?”

True! Coincidentally, the 47% who voted for this are mirrored by the 47% of the general US population in a similar poll, deciding between the options of whether raw milk is less safe to drink (47%), just as safe to drink (15%), safer to drink (9%), or not sure (30%):

Public Fails to Appreciate Risk of Consuming Raw Milk, Survey Finds

As for what those risks are, by the way, unpasteurized dairy products are estimated to cause 840x more illness and 45x more hospitalizations than pasteurized products.

This is because unpasteurized milk can (and often does) contain E. coli, Listeria, Salmonella, Cryptosporidium, and other such unpleasantries, which pasteurization kills.

Source for both of the above claims:

(we know the title sounds vague, but all this information is easily visible in the abstract, specifically, the first two paragraphs)

Raw milk is a good source of vital nutrients which pasteurization would destroy: True or False?

False! Whether it’s a “good” source can be debated depending on other factors (e.g., if we considered milk’s inflammatory qualities against its positive nutritional content), but it’s undeniably a rich source. However, pasteurization doesn’t destroy or damage those nutrients.

Incidentally, in the same survey we linked up top, 16% of the general US public believed that pasteurization destroys nutrients, while 41% were not sure (and 43% knew that it doesn’t).

Note: for our confidence here, we are skipping over studies published by, for example, dairy farming lobbies and so forth. Those do agree, by the way, but nevertheless we like sources to be as unbiased as possible. The FDA, which is not completely unbiased, has produced a good list of references for this, about half of which we would consider biased, and half unbiased; the clue is generally in the journal names. For example, Food Chemistry and the Journal of Food Science and Journal of Nutrition are probably less biased than the International Dairy Association and the Journal of Dairy Science:

FDA | Raw Milk Misconceptions and the Danger of Raw Milk Consumption

this page covers a lot of other myths too, more than we have room to “bust” here, but it’s very interesting reading and we recommend to check it out!

Notably, we also weren’t able to find any refutation by counterexample on PubMed, with the very slight exception that some studies sometimes found that in the case of milks that were of low quality, pasteurization can reduce the vitamin E content while increasing the vitamin A content. For most milks however, no significant change was found, and in all cases we looked at, B-vitamins were comparable and vitamin D, popularly touted as a benefit of cow’s milk, is actually added later in any case. And, importantly, because this is a common argument, no change in lipid profiles appears to be findable either.

In science, when something has been well-studied and there aren’t clear refutations by counterexample, and the weight of evidence is clearly very much tipped into one camp, that usually means that camp has it right.

Milk generally is good/bad for the health: True or False?

True or False, depending on what we want to look at. It’s definitely not good for inflammation, but the whole it seems to be cancer-neutral and only increases heart disease risk very slightly:

- Keep Inflammation At Bay ← short version is milk is bad, fermented milk products are fine in moderation

- Is Dairy Scary? ← short version is that milk is neither good nor terrible; fermented dairy products however are health-positive in numerous ways when consumed in moderation

You may be wondering…

…how this goes for the safety of dairy products when it comes to the bird flu currently affecting dairy cows, so:

Take care!

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails:

-

Nicotine Benefits (That We Don’t Recommend)!

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

It’s Q&A Day at 10almonds!

Have a question or a request? We love to hear from you!

In cases where we’ve already covered something, we might link to what we wrote before, but will always be happy to revisit any of our topics again in the future too—there’s always more to say!

As ever: if the question/request can be answered briefly, we’ll do it here in our Q&A Thursday edition. If not, we’ll make a main feature of it shortly afterwards!

So, no question/request too big or small

❝Does nicotine have any benefits at all? I know it’s incredibly addictive but if you exclude the addiction, does it do anything?❞

Good news: yes, nicotine is a stimulant and can be considered a performance enhancer, for example:

❝Compared with the placebo group, the nicotine group exhibited enhanced motor reaction times, grooved pegboard test (GPT) results on cognitive function, and baseball-hitting performance, and small effect sizes were noted (d = 0.47, 0.46 and 0.41, respectively).❞

Read in full: Acute Effects of Nicotine on Physiological Responses and Sport Performance in Healthy Baseball Players

However, another study found that its use as a cognitive enhancer was only of benefit when there was already a cognitive impairment:

❝Studies of the effects of nicotinic systems and/or nicotinic receptor stimulation in pathological disease states such as Alzheimer’s disease, Parkinson’s disease, attention deficit/hyperactivity disorder and schizophrenia show the potential for therapeutic utility of nicotinic drugs.

In contrast to studies in pathological states, studies of nicotine in normal-non-smokers tend to show deleterious effects.

This contradiction can be resolved by consideration of cognitive and biological baseline dependency differences between study populations in terms of the relationship of optimal cognitive performance to nicotinic receptor activity.

Although normal individuals are unlikely to show cognitive benefits after nicotinic stimulation except under extreme task conditions, individuals with a variety of disease states can benefit from nicotinic drugs❞

Read in full: Effects of nicotinic stimulation on cognitive performance

Bad news: its addictive qualities wipe out those benefits due to tolerance and thus normalization in short order. So you may get those benefits briefly, but then you’re addicted and also lose the benefits, as well as also ruining your health—making it a lose/lose/lose situation quite quickly.

As an aside, while nicotine is poisonous per se, in the quantities taken by most users, the nicotine itself is not usually what kills. It’s mostly the other stuff that comes with it (smoking is by far and away the worst of all; vaping is relatively less bad, but that’s not a strong statement in this case) that causes problems.

See also: Vaping: A Lot Of Hot Air?

However, this is still not an argument for, say, getting nicotine gum and thinking “no harmful effects” because then you’ll be get a brief performance boost yes before it runs out and being addicted to it and now being in a position whereby if you stop, your performance will be lower than before you started (since you now got used to it, and it became your new normal), before eventually recovering:

In summary

We recommend against using nicotine in the first place, and for those who are addicted, we recommend quitting immediately if not contraindicated (check with your doctor if unsure; there are some situations where it is inadvisable to take away something your body is dependent on, until you correct some other thing first).

For more on quitting in general, see:

Addiction Myths That Are Hard To Quit

Take care!

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails: