What does it mean to be transgender?

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Transgender media coverage has surged in recent years for a wide range of reasons. While there are more transgender television characters than ever before, hundreds of bills are targeting transgender people’s access to medical care, sports teams, gender-specific public spaces, and other institutions.

Despite the increase in conversation about the transgender community, public confusion around transgender identity remains.

Read on to learn more about what it means to be transgender and understand challenges transgender people may face.

What does it mean to be transgender?

Transgender—or “trans”—is an umbrella term for people whose gender identity or gender expression does not conform to their sex assigned at birth. People can discover they are trans at any age.

Gender identity refers to a person’s inner sense of being a woman, a man, neither, both, or something else entirely. Trans people who don’t feel like women or men might describe themselves as nonbinary, agender, genderqueer, or two-spirit, among other terms.

Gender expression describes the way a person communicates their gender through their appearance—such as their clothing or hairstyle—and behavior.

A person whose gender expression doesn’t conform to the expectations of their assigned sex may not identify as trans. The only way to know for sure if someone is trans is if they tell you.

Cisgender—or “cis”—describes people whose gender identities match the sex they were assigned at birth.

How long have transgender people existed?

Being trans isn’t new. Although the word “transgender” only dates back to the 1960s, people whose identities defy traditional gender expectations have existed across cultures throughout recorded history.

How many people are transgender?

A 2022 Williams Institute study estimates that 1.6 million people over the age of 13 identify as transgender in the United States.

Is being transgender a mental health condition?

No. Conveying and communicating about your gender in a way that feels authentic to you is a normal and necessary part of self-expression.

Social and legal stigma, bullying, discrimination, harassment, negative media messages, and barriers to gender-affirming medical care can cause psychological distress for trans people. This is especially true for trans people of color, who face significantly higher rates of violence, poverty, housing instability, and incarceration—but trans identity itself is not a mental health condition.

What is gender dysphoria?

Gender dysphoria describes a feeling of unease that some trans people experience when their perceived gender doesn’t match their gender identity, or their internal sense of gender. A 2021 study of trans adults pursuing gender-affirming medical care found that most participants started experiencing gender dysphoria by the time they were 7.

When trans people don’t receive the support they need to manage gender dysphoria, they may experience depression, anxiety, social isolation, suicidal ideation, substance use disorder, eating disorders, and self-injury.

How do trans people manage gender dysphoria?

Every trans person’s experience with gender dysphoria is unique. Some trans people may alleviate dysphoria by wearing gender-affirming clothing or by asking others to refer to them by a new name and use pronouns that accurately reflect their gender identity. The 2022 U.S. Trans Survey found that nearly all trans participants who lived as a different gender than the sex they were assigned at birth reported that they were more satisfied with their lives.

Some trans people may also manage dysphoria by pursuing medical transition, which may involve taking hormones and getting gender-affirming surgery.

Access to gender-affirming medical care has been shown to reduce the risk of depression and suicide among trans youth and adults.

To learn more about the trans community, visit resources from the National Center for Transgender Equality, the Trevor Project, PFLAG, and Planned Parenthood.

If you or anyone you know is considering suicide or self-harm or is anxious, depressed, upset, or needs to talk, call the Suicide & Crisis Lifeline at 988 or text the Crisis Text Line at 741-741. For international resources, here is a good place to begin.

This article first appeared on Public Good News and is republished here under a Creative Commons license.

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Recommended

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails:

-

Coughing/Wheezing After Dinner?

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

The After-Dinner Activities You Don’t Want

A quick note first: our usual medical/legal disclaimer applies here, and we are not here to diagnose you or treat you; we are not doctors, let alone your doctors. Do see yours if you have any reason to believe there may be cause for concern.

Coughing and/or wheezing after eating is more common the younger or older someone is. Lest that seem contradictory: it’s a U-shaped bell-curve.

It can happen at any age and for any of a number of reasons, but there are patterns to the distribution:

Mostly affects younger people:

Allergies, asthma

Young people are less likely to have a body that’s fully adapted to all foods yet, and asthma can be triggered by certain foods (for example sulfites, a common preservative additive):

Adverse reactions to the sulphite additives

Foods/drinks that commonly contain sulfites include soft drinks, wines and beers, and dried fruit

As for the allergies side of things, you probably know the usual list of allergens to watch out for, e.g: dairy, fish, crustaceans, eggs, soy, wheat, nuts.

However, that’s far from an exhaustive list, so it’s good to see an allergist if you suspect it may be an allergic reaction.

Affects young and old people equally:

Again, there’s a dip in the middle where this doesn’t tend to affect younger adults so much, but for young and old people:

Dysphagia (difficulty swallowing)

For children, this can be a case of not having fully got used to eating yet if very small, and when growing, can be a case of “this body is constantly changing and that makes things difficult”.

For older people, this can can come from a variety of reasons, but common culprits include neurological disorders (including stroke and/or dementia), or a change in saliva quality and quantity—a side-effect of many medications:

Hyposalivation in Elderly Patients

(particularly useful in the article above is the table of drugs that are associated with this problem, and the various ways they may affect it)

Managing this may be different depending on what is causing your dysphagia (as it could be anything from antidepressants to cancer), so this is definitely one to see your doctor about. For some pointers, though:

NHS Inform | Dysphagia (swallowing problems)

Affects older people more:

Gastroesophagal reflux disease (GERD)

This is a kind of acid reflux, but chronic, and often with a slightly different set of symptoms.

GERD has no known cure once established, but its symptoms can be managed (or avoided in the first place) by:

- Healthy eating (Mediterranean diet is, as usual, great)

- Weight loss (if and only if obese)

- Avoiding trigger foods

- Eating smaller meals

- Practicing mindful eating

- Staying upright for 3–4 hours after eating

And of course, don’t smoke, and ideally don’t drink alcohol.

You can read more about this (and the different ways it can go from there), here:

NICE | Gastro-oesophageal reflux disease

Note: this above page refers to it as “GORD”, because of the British English spelling of “oesophagus” rather than “esophagus”. It’s the exact same organ and condition, just a different spelling.

Take care!

Share This Post

-

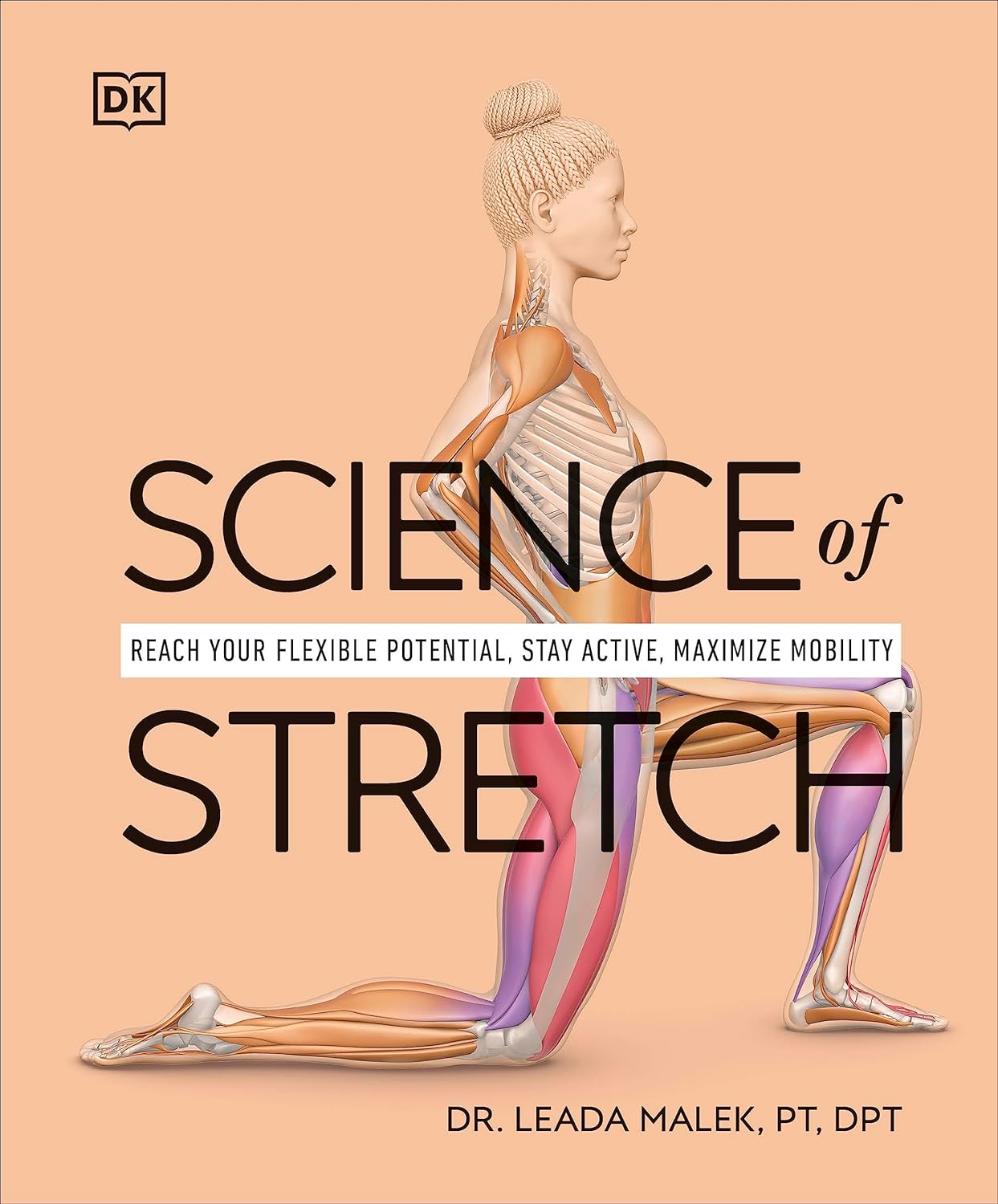

Science of Stretch – by Dr. Leada Malek

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

This book is part of a “Science of…” series, of which we’ve reviewed some others before (Yoga | HIIT | Pilates), and needless to say, we like them.

You may be wondering: is this just that thing where a brand releases the same content under multiple names to get more sales, and no, it’s not (long-time 10almonds readers will know: if it were, we’d say so!).

While flexibility and mobility are indeed key benefits in yoga and Pilates, they looked into the science of what was going on in yoga asanas and Pilates exercises, stretchy or otherwise, so the stretching element was not nearly so deep as in this book.

In this one, Dr. Malek takes us on a wonderful tour of (relevant) human anatomy and physiology, far deeper than most pop-science books go into when it comes to stretching, so that the reader can really understand every aspect of what’s going on in there.

This is important, because it means busting a lot of myths (instead of busting tendons and ligaments and things), understanding why certain things work and (critically!) why certain things don’t, how certain stretching practices will sabotage our progress, things like that.

It’s also beautifully clearly illustrated! The cover art is a fair representation of the illustrations inside.

Bottom line: if you want to get serious about stretching, this is a top-tier book and you won’t regret it.

Click here to check out Science of Stretching, and learn what you can do and how!

Share This Post

-

Super-Nutritious Shchi

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Today we have a recipe we’ve mentioned before, but now we have standalone recipe pages for recipes, so here we go. The dish of the day is shchi—which is Russian cabbage soup, which sounds terrible, and looks as bad as it sounds. But it tastes delicious, is an incredible comfort food, and is famous (in Russia, at least) for being something one can eat for many days in a row without getting sick of it.

It’s also got an amazing nutritional profile, with vitamins A, B, C, D, as well as lots of calcium, magnesium, and iron (amongst other minerals), and a healthy blend of carbohydrates, proteins, and fats, plus an array of anti-inflammatory phytochemicals, and of course, water.

You will need

- 1 large white cabbage, shredded

- 1 cup red lentils

- ½ lb tomatoes, cut into eighths (as in: halve them, halve the halves, and halve the quarters)

- ½ lb mushrooms sliced (or halved, if they are baby button mushrooms)

- 1 large onion, chopped finely

- 1 tbsp rosemary, chopped finely

- 1 tbsp thyme, chopped finely

- 1 tbsp black pepper, coarse ground

- 1 tsp cumin, ground

- 1 tsp yeast extract

- 1 tsp MSG, or 2 tsp low-sodium salt

- A little parsley for garnishing

- A little fat for cooking; this one’s a tricky and personal decision. Butter is traditional, but would make this recipe impossible to cook without going over the recommended limit for saturated fat. Avocado oil is healthy, relatively neutral in taste, and has a high smoke point for caramelizing the onions. Extra virgin olive oil is also a healthy choice, but not as neutral in flavor and does have a lower smoke point. Coconut oil has far too strong a taste and a low smoke point. Seed oils are very heart-unhealthy. All in all, avocado oil is a respectable choice from all angles except tradition.

Note: with regard to the seasonings, the above is a basic starting guide; feel free to add more per your preference—however, we do not recommend adding more cumin (it’ll overpower it) or more salt (there’s enough sodium in here already).

Method

(we suggest you read everything at least once before doing anything)

1) Cook the lentils until soft (a rice cooker is great for this, but a saucepan is fine); be generous with the water; we are making a soup, after all. Set them aside without draining.

2) Sauté the cabbage, and put it in a big stock pot or similar large pan (not yet on the heat)

3) Fry the mushrooms, and add them to the big pot (still not yet on the heat)

4) Use a stick blender to blend the lentils in the water you cooked them in, and then add to the big pot too.

5) Turn the heat on low, and if necessary, add more water to make it into a rich soup

6) Add the seasonings (rosemary, thyme, cumin, black pepper, yeast extract, MSG-or-salt) and stir well. Keep the temperature on low; you can just let it simmer now because the next step is going to take a while:

7) Caramelize the onion (keep an eye on the big pot, stirring occasionally) and set it aside

8) Fry the tomatoes quickly (we want them cooked, but just barely) and add them to the big pot

9) Serve! The caramelized onion is a garnish, so put a little on top of each bowl of shchi. Add a little parsley too.

Enjoy!

Want to learn more?

For those interested in some of the science of what we have going on today:

- Level-Up Your Fiber Intake! (Without Difficulty Or Discomfort)

- The Magic Of Mushrooms: “The Longevity Vitamin” (That’s Not A Vitamin)

- Easily Digestible Vegetarian Protein Sources

- The Bare-Bones Truth About Osteoporosis

- Some Surprising Truths About Hunger And Satiety

Take care!

Share This Post

Related Posts

-

What You Don’t Know Can Kill You

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Knowledge Is Power!

This is Dr. Simran Malhotra. She’s triple board-certified (in lifestyle medicine, internal medicine, and palliative care), and is also a health and wellness coach.

What does she want us to know?

Three things:

Wellness starts with your mindset

Dr. Malhotra shifted her priorities a lot during the initial and perhaps most chaotic phase of the COVID pandemic:

❝My husband, a critical care physician, was consumed in the trenches of caring for COVID patients in the ICU. I found myself knee-deep in virtual meetings with families whose loved ones were dying of severe COVID-related illnesses. Between the two of us, we saw more trauma, suffering, and death, than we could have imagined.

The COVID-19 pandemic opened my eyes to how quickly life can change our plans and reinforced the importance of being mindful of each day. Harnessing the power to make informed decisions is important, but perhaps even more important is focusing on what is in our control and taking action, even if it is the tiniest step in the direction we want to go!❞

~ Dr. Simran Malhotra

We can only make informed decisions if we have good information. That’s one of the reasons we try to share as much information as we can each day at 10almonds! But a lot will always depend on personalized information.

There are one-off (and sometimes potentially life-saving) things like health genomics:

The Real Benefit Of Genetic Testing

…but also smaller things that are informative on an ongoing basis, such as keeping track of your weight, your blood pressure, your hormones, and other metrics. You can even get fancy:

Track Your Blood Sugars For Better Personalized Health

Lifestyle is medicine

It’s often said that “food is medicine”. But also, movement is medicine. Sleep is medicine. In short, your lifestyle is the most powerful medicine that has ever existed.

Lifestyle encompasses very many things, but fortunately, there’s an “80:20 rule” in play that simplifies it a lot because if you take care of the top few things, the rest will tend to look after themselves:

These Top Few Things Make The Biggest Difference To Overall Health

Gratitude is better than fear

If we receive an unfavorable diagnosis (and let’s face it, most diagnoses are unfavorable), it might not seem like something to be grateful for.

But it is, insofar as it allows us to then take action! The information itself is what gives us our best chance of staying safe. And if that’s not possible e.g. in the worst case scenario, a terminal diagnosis, (bearing in mind that one of Dr. Malhotra’s three board certifications is in palliative care, so she sees this a lot), it at least gives us the information that allows us to make the best use of whatever remains to us.

See also: Managing Your Mortality

Which is very important!

…and/but possibly not the cheeriest note on which to end, so when you’ve read that, let’s finish today’s main feature on a happier kind of gratitude:

How To Get Your Brain On A More Positive Track (Without Toxic Positivity)

Want to hear more from Dr. Malhotra?

Showing how serious she is about how our genes do not determine our destiny and knowledge is power, here she talks about her “previvor’s journey”, as she puts it, with regard to why she decided to have preventative cancer surgery in light of discovering her BRCA1 genetic mutation:

Click Here If The Embedded Video Doesn’t Load Automatically

Take care!

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails:

-

One More Reason To Prioritize Sleep To Fight Cognitive Decline

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

We’ve talked sometimes at 10almonds about how important sleep is for many aspects of health, including for brain health, and including in later life.

There’s a common myth that older people require less sleep; the reality is that sleeping less and not dying of it does not equate to needing less.

See also: Sleep: Yes, You Really Do Still Need It!

And: How Sleep-Deprived Are You, Really?

Quantity is not everything though; quality absolutely matters too. We’ve written about that here:

The 6 Dimensions Of Sleep (And Why They Matter) ← duration is just one dimension out of the six

We’ve even gone into some more obscure, but still very important things, such as: How Your Sleep Position Changes Dementia Risk

We’ve also talked about the role of sleep in memory (and forgetting): How Your Brain Chooses What To Remember

With that in mind…

Some more recent science

This study was about spatial memory, but what’s important (in our opinion) is that it’s about solidifying recent learning.

Researchers measured brain activity in rats for up to 20 hours of sleep following spatial learning tasks. Initially, the neuronal patterns observed during sleep mirrored those from the learning phase. However, as sleep progressed, these patterns transformed to resemble the activity seen when the rats later recalled the locations of food rewards. Interestingly, this reorganization happened during non-REM sleep, which means it wasn’t just a case of “the rats were dreaming about their day” (which is a well-established way in which memories do get encoded), but rather, the newly-learned experiences were being actively encoded in the rest of sleep.

This is critical, because in age-related cognitive decline, it’s very common for very long-term memory (VLTM) to remain intact, while LTM and short-term memory (STM) crumble. For example, someone may remember many details of their life from 20 years ago, but forget where they currently live, or what happened in the conversation two minutes ago.

In other words, the biggest problem is not the storage of memories, but rather the encoding of them in the first place.

Which sleep facilitates!

And it’s also important to note that part about it being the rest of sleep, because when the brain is sleep-deprived, it’ll tend to prioritize REM sleep, which is important, but that means cutting back on other phases of sleep, and from this study, we can see that memory & learning will be amongst the things adversely affected by such cuts.

Here’s the paper, for those interested:

Sleep stages antagonistically modulate reactivation drift

And for those who prefer lighter reading, here’s a pop-science article about the same study, which explains it in more words than we can here:

But wait, there’s more!

Sleep resets neurons for new memories the next day, study finds

So, once again… It is absolutely critical to prioritize good sleep.

Want to know more?

Check out:

Calculate (And Enjoy) The Perfect Night’s Sleep

Take care!

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails:

-

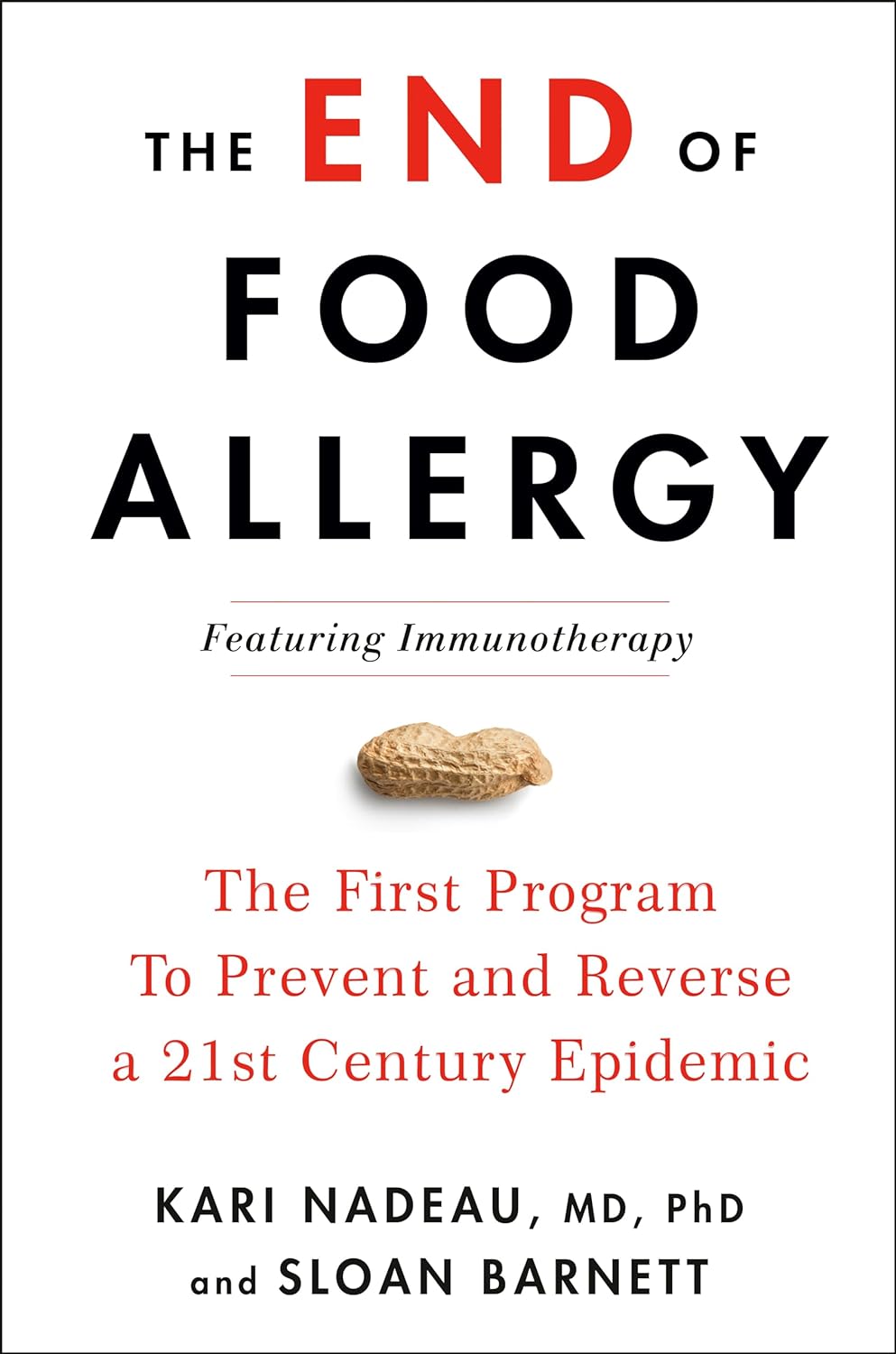

The End of Food Allergy – by Dr. Kari Nadeau & Sloan Barnett

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

We don’t usually mention author credentials beyond their occupation/title. However, in this case it bears acknowledging at least the first line of the author bio:

❝Kari Nadeau, MD, PhD, is the director of the Sean N. Parker Center for Allergy and Asthma Research at Stanford University and is one of the world’s leading experts on food allergy❞

We mention this, because there’s a lot of quack medicine out there [in general, but especially] when it comes to things such as food allergies. So let’s be clear up front that Dr. Nadeau is actually a world-class professional at the top of her field.

This book is, by the way, about true allergies—not intolerances or sensitivities. It does touch on those latter two, but it’s not the main meat of the book.

In particular, most of the research cited is around peanut allergies, though the usual other common allergens are all discussed too.

The authors’ writing style is that of a science educator (Dr. Nadeau’s co-author, Sloan Barnett, is lawyer and health journalist). We get a clear explanation of the science from real-world to clinic and back again, and are left with a strong understanding, not just a conclusion.

The titular “End of Food Allergy” is a bold implicit claim; does the book deliver? Yes, actually.

The book lays out guidelines for safely avoiding food allergies developing in infants, and yes, really, how to reverse them in adults. But…

Big caveat:

The solution for reversing severe food allergies (e.g. “someone nearby touched a peanut three hours ago and now I’m in anaphylactic shock”), drug-assisted oral immunotherapy, takes 6–24 months of weekly several-hour-long clinic visits, relies on having a nearby clinic offering the service, and absolutely 100% cannot be done at home (on pain of probable death).

Bottom line: it’s by no means a magic bullet, but yes, it does deliver.

Click here to check out The End of Food Allergy to learn more!

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails: