Spirulina vs Nori – Which is Healthier?

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Our Verdict

When comparing spirulina to nori, we picked the nori.

Why?

In the battle of the seaweeds, if spirulina is a superfood (and it is), then nori is a super-dooperfood. So today is one of those “a very nutritious food making another very nutritious food look bad by standing next to it” days. With that in mind…

In terms of macros, they’re close to identical. They’re both mostly water with protein, carbs, and fiber. Technically nori is higher in carbs, but we’re talking about 2.5g/100g difference.

In the category of vitamins, spirulina has more vitamin B1, while nori has a lot more of vitamins A, B2, B3, B5, B6, B9, C, E, K, and choline.

When it comes to minerals, it’s a little closer but still a clear win for nori; spirulina has more copper, iron, and magnesium, while nori has more calcium, manganese, phosphorus, potassium, and zinc.

Want to try some nori? Here’s an example product on Amazon 😎

Want to learn more?

You might like to read:

21% Stronger Bones in a Year at 62? Yes, It’s Possible (No Calcium Supplements Needed!) ← nori was an important part of the diet enjoyed here

Take care!

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Recommended

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails:

-

Managing Jealousy

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Jealousy is often thought of as a young people’s affliction, but it can affect us at any age—whether we are the one being jealous, or perhaps a partner.

And, the “green-eyed monster” can really ruin a lot of things; relationships, friendships, general happiness, physical health even (per stress and anxiety and bad sleep), and more.

The thing is, jealousy looks like one thing, but is actually mostly another.

Jealousy is a Scooby-Doo villain

That is to say: we can unmask it and see what much less threatening thing is underneath. Which is usually nothing more nor less than: insecurities

- Insecurity about losing one’s partner

- Insecurity about not being good enough

- Insecurity about looking bad socially

…etc. The latter, by the way, is usually the case when one’s partner is socially considered to be giving cause for jealousy, but the primary concern is not actually relational loss or any kind of infidelity, but rather, looking like one cannot keep one’s partner’s full attention romantically/sexually. This drives a lot of people to act on jealousy for the sake of appearances, in situations where they might otherwise, if they didn’t feel like they’d be adversely judged for it, be considerably more chill.

Thus, while monogamy certainly has its fine merits, there can also be a kind of “toxic monogamy” at hand, where a relationship becomes unhealthy because one partner is just trying to live up to social expectations of keeping the other partner in check.

This, by the way, is something that people in polyamorous and/or open relationships typically handle quite neatly, even if a lot of the following still applies. But today, we’re making the statistically safe assumption of a monogamous relationship, and talking about that!

How to deal with the social aspect

If you sit down with your partner and work out in advance the acceptable parameters of your relationship, you’ll be ahead of most people already. For example…

- What counts as cheating? Is it all and any sex acts with all and any people? If not, where’s the line?

- What about kissing? What about touching other body parts? If there are boundaries that are important to you, talk about them. Nothing is “too obvious” because it’s astonishing how many times it will happen that later someone says (in good faith or not), “but I thought…”

- What about being seen in various states of undress? Or seeing other people in various states of undress?

- Is meaningless flirting between friends ok, and if so, how do we draw the line with regard to what is meaningless? And how are we defining flirting, for that matter? Talk about it and ensure you are both on the same page.

- If a third party is possibly making moves on one of us under the guise of “just being friendly”, where and how do we draw the line between friendliness and romantic/sexual advances? What’s the difference between a lunch date with a friend and a romantic meal out for two, and how can we define the difference in a way that doesn’t rely on subjective “well I didn’t think it was romantic”?

If all this seems like a lot of work, please bear in mind, it’s a lot more fun to cover this cheerfully as a fun couple exercise in advance, than it is to argue about it after the fact!

See also: Boundary-Setting Beyond “No”

How to deal with the more intrinsic insecurities

For example, when jealousy is a sign of a partner fearing not being good enough, not measuring up, or perhaps even losing their partner.

The key here might not shock you: communication

Specifically, reassurance. But critically, the correct reassurance!

A partner who is jealous will often seek the wrong reassurance, for example wanting to read their partner’s messages on their phone, or things like that. And while a natural desire when experiencing jealousy, it’s not actually helpful. Because while incriminating messages could confirm infidelity, it’s impossible to prove a negative, and if nothing incriminating is found, the jealous partner can just go on fearing the worst regardless. After all, their partner could have a burner phone somewhere, or a hidden app for cheating, or something else like that. So, no reassurance can ever be given/gained by such requests (which can also become unpleasantly controlling, which hopefully nobody wants).

A quick note on “if you have nothing to fear, you have nothing to hide”: rhetorically that works, but practically it doesn’t.

Writer’s example: when my late partner and I formalized our relationship, we discussed boundaries, and I expressed “so far as I am concerned, I have no secrets from you, except secrets that are not mine to share. For example, if someone has confided in me and asked that I not share it, I won’t. Aside from that, you have access-all-areas in my life; me being yours has its privileges” and this policy itself would already pre-empt any desire to read my messages.

Now indeed, I had nothing to hide. I am by character devoted to a fault. But my friends may well sometimes have things they don’t want me to share, which made that a necessary boundary to highlight (which my partner, an absolute angel by the way and not prone to unhealthy manifestations of jealousy in any case, understood completely).

So, it is best if the partner of a jealous person can explain the above principles as necessary, and offer the correct reassurance instead. Which could be any number of things, but for example:

- I am yours, and nobody else has a chance

- I fully intend to stay with you for life

- You are the best partner I have ever had

- Being with you makes my life so much better

…etc. Note that none of these are “you don’t have to worry about so-and-so”, or “I am not cheating on you”, etc, because it’s about yours and your partner’s relationship. If they ask for reassurances with regard to other people or activities, by all means state them as appropriate, but try to keep the focus on you two.

And if your partner (or you, if it’s you who’s jealous) can express the insecurity in the format…

“I’m afraid of _____ because _____”

…then the “because” will allow for much more specific reassurance. We all have insecurities, we all have reasons we might fear not being good enough for our partner, or losing their affection, and the best thing we can do is choose to trust our partners at least enough to discuss those fears openly with each other.

See also: Save Time With Better Communication ← this can avoid a lot of time-consuming arguments

What about if the insecurity is based in something demonstrably correct?

By this we mean, something like a prior history of cheating, or other reasons for trust issues. In such a case, the jealous partner may well have a reason for their jealousy that isn’t based on a personal insecurity.

In our previous article about boundaries, we talked about relationships (romantic or otherwise) having a “price of entry”. In this case, you each have a “price of entry”:

- The “price of entry” to being with the person who has previously cheated (or similar), is being able to accept that.

- And for the person who cheated (or similar), very likely their partner will have the “price of entry” of “don’t do that again, and also meanwhile accept in good grace that I might be jittery about it”.

And, if the betrayal of trust was something that happened between the current partners in the current relationship, most likely that was also traumatic for the person whose trust was betrayed. Many people in that situation find that trust can indeed be rebuilt, but slowly, and the pain itself may also need treatment (such as therapy and/or couples therapy specifically).

See also: Relationships: When To Stick It Out & When To Call It Quits ← this covers both sides

And finally, to finish on a happy note:

Only One Kind Of Relationship Promotes Longevity This Much!

Take care!

Share This Post

-

Five Advance Warnings of Multiple Sclerosis

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Five Advance Warnings of Multiple Sclerosis

First things first, a quick check-in with regard to how much you know about multiple sclerosis (MS):

- Do you know what causes it?

- Do you know how it happens?

- Do you know how it can be fixed?

If your answer to the above questions is “no”, then take solace in the fact that modern science doesn’t know either.

What we do know is that it’s an autoimmune condition, and that it results in the degradation of myelin, the “insulator” of nerves, in the central nervous system.

- How exactly this is brought about remains unclear, though there are several leading hypotheses including autoimmune attack of myelin itself, or disruption to the production of myelin.

- Treatments look to reduce/mitigate inflammation, and/or treat other symptoms (which are many and various) on an as-needed basis.

If you’re wondering about the prognosis after diagnosis, the scientific consensus on that is also “we don’t know”:

Read: Personalized medicine in multiple sclerosis: hope or reality?

this paper, like every other one we considered putting in that spot, concludes with basically begging for research to be done to identify biomarkers in a useful fashion that could help classify many distinct forms of MS, rather than the current “you have MS, but who knows what that will mean for you personally because it’s so varied” approach.

The Five Advance Warning Signs

Something we do know! First, we’ll quote directly the researchers’ conclusion:

❝We identified 5 health conditions associated with subsequent MS diagnosis, which may be considered not only prodromal but also early-stage symptoms.

However, these health conditions overlap with prodrome of two other autoimmune diseases, hence they lack specificity to MS.❞

So, these things are a warning, five alarm bells, but not necessarily diagnostic criteria.

Without further ado, the five things are:

- depression

- sexual disorders

- constipation

- cystitis

- urinary tract infections

❝This association was sufficiently robust at the statistical level for us to state that these are early clinical warning signs, probably related to damage to the nervous system, in patients who will later be diagnosed with multiple sclerosis.

The overrepresentation of these symptoms persisted and even increased over the five years after diagnosis.❞

Read the paper for yourself:

Hot off the press! Published only yesterday!

Want to know more about MS?

Here’s a very comprehensive guide:

National clinical guideline for diagnosis and management of multiple sclerosis

Take care!

Share This Post

-

Their First Baby Came With Medical Debt. These Illinois Parents Won’t Have Another.

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

JACKSONVILLE, Ill. — Heather Crivilare was a month from her due date when she was rushed to an operating room for an emergency cesarean section.

The first-time mother, a high school teacher in rural Illinois, had developed high blood pressure, a sometimes life-threatening condition in pregnancy that prompted doctors to hospitalize her. Then Crivilare’s blood pressure spiked, and the baby’s heart rate dropped. “It was terrifying,” Crivilare said.

She gave birth to a healthy daughter. What followed, though, was another ordeal: thousands of dollars in medical debt that sent Crivilare and her husband scrambling for nearly a year to keep collectors at bay.

The Crivilares would eventually get on nine payment plans as they juggled close to $5,000 in bills.

“It really felt like a full-time job some days,” Crivilare recalled. “Getting the baby down to sleep and then getting on the phone. I’d set up one payment plan, and then a new bill would come that afternoon. And I’d have to set up another one.”

Crivilare’s pregnancy may have been more dramatic than most. But for millions of new parents, medical debt is now as much a hallmark of having children as long nights and dirty diapers.

About 12% of the 100 million U.S. adults with health care debt attribute at least some of it to pregnancy or childbirth, according to a KFF poll.

These people are more likely to report they’ve had to take on extra work, change their living situation, or make other sacrifices.

Overall, women between 18 and 35 who have had a baby in the past year and a half are twice as likely to have medical debt as women of the same age who haven’t given birth recently, other KFF research conducted for this project found.

“You feel bad for the patient because you know that they want the best for their pregnancy,” said Eilean Attwood, a Rhode Island OB-GYN who said she routinely sees pregnant women anxious about going into debt.

“So often, they may be coming to the office or the hospital with preexisting debt from school, from other financial pressures of starting adult life,” Attwood said. “They are having to make real choices, and what those real choices may entail can include the choice to not get certain services or medications or what may be needed for the care of themselves or their fetus.”

Best-Laid Plans

Crivilare and her husband, Andrew, also a teacher, anticipated some of the costs.

The young couple settled in Jacksonville, in part because the farming community less than two hours north of St. Louis was the kind of place two public school teachers could afford a house. They saved aggressively. They bought life insurance.

And before Crivilare got pregnant in 2021, they enrolled in the most robust health insurance plan they could, paying higher premiums to minimize their deductible and out-of-pocket costs.

Then, two months before their baby was due, Crivilare learned she had developed preeclampsia. Her pregnancy would no longer be routine. Crivilare was put on blood pressure medication, and doctors at the local hospital recommended bed rest at a larger medical center in Springfield, about 35 miles away.

“I remember thinking when they insisted that I ride an ambulance from Jacksonville to Springfield … ‘I’m never going to financially recover from this,’” she said. “‘But I want my baby to be OK.’”

For weeks, Crivilare remained in the hospital alone as covid protocols limited visitors. Meanwhile, doctors steadily upped her medications while monitoring the fetus. It was, she said, “the scariest month of my life.”

Fear turned to relief after her daughter, Rita, was born. The baby was small and had to spend nearly two weeks in the neonatal intensive care unit. But there were no complications. “We were incredibly lucky,” Crivilare said.

When she and Rita finally came home, a stack of medical bills awaited. One was already past due.

Crivilare rushed to set up payment plans with the hospitals in Jacksonville and Springfield, as well as the anesthesiologist, the surgeon, and the labs. Some providers demanded hundreds of dollars a month. Some settled for monthly payments of $20 or $25. Some pushed Crivilare to apply for new credit cards to pay the bills.

“It was a blur of just being on the phone constantly with all the different people collecting money,” she recalled. “That was a nightmare.”

Big Bills, Big Consequences

The Crivilares’ bills weren’t unusual. Parents with private health coverage now face on average more than $3,000 in medical bills related to a pregnancy and childbirth that aren’t covered by insurance, researchers at the University of Michigan found.

Out-of-pocket costs are even higher for families with a newborn who needs to stay in a neonatal ICU, averaging $5,000. And for 1 in 11 of these families, medical bills related to pregnancy and childbirth exceed $10,000, the researchers found.

“This forces very difficult trade-offs for families,” said Michelle Moniz, a University of Michigan OB-GYN who worked on the study. “Even though they have insurance, they still have these very high bills.”

Nationwide polls suggest millions of these families end up in debt, with sometimes devastating consequences.

About three-quarters of U.S. adults with debt related to pregnancy or childbirth have cut spending on food, clothing, or other essentials, KFF polling found.

About half have put off buying a home or delayed their own or their children’s education.

These burdens have spurred calls to limit what families must pay out-of-pocket for medical care related to pregnancy and childbirth.

In Massachusetts, state Sen. Cindy Friedman has proposed legislation to exempt all these bills from copays, deductibles, and other cost sharing. This would parallel federal rules that require health plans to cover recommended preventive services like annual physicals without cost sharing for patients. “We want … healthy children, and that starts with healthy mothers,” Friedman said. Massachusetts health insurers have warned the proposal will raise costs, but an independent state analysis estimated the bill would add only $1.24 to monthly insurance premiums.

Tough Lessons

For her part, Crivilare said she wishes new parents could catch their breath before paying down medical debt.

“No one is in the right frame of mind to deal with that when they have a new baby,” she said, noting that college graduates get such a break. “When I graduated with my college degree, it was like: ‘Hey, new adult, it’s going to take you six months to kind of figure out your life, so we’ll give you this six-month grace period before your student loans kick in and you can get a job.’”

Rita is now 2. The family scraped by on their payment plans, retiring the medical debt within a year, with help from Crivilare’s side job selling resources for teachers online.

But they are now back in debt, after Rita’s recurrent ear infections required surgery last year, leaving the family with thousands of dollars in new medical bills.

Crivilare said the stress has made her think twice about seeing a doctor, even for Rita. And, she added, she and her husband have decided their family is complete.

“It’s not for us to have another child,” she said. “I just hope that we can put some of these big bills behind us and give [Rita] the life that we want to give her.”

About This Project

“Diagnosis: Debt” is a reporting partnership between KFF Health News and NPR exploring the scale, impact, and causes of medical debt in America.

The series draws on original polling by KFF, court records, federal data on hospital finances, contracts obtained through public records requests, data on international health systems, and a yearlong investigation into the financial assistance and collection policies of more than 500 hospitals across the country.

Additional research was conducted by the Urban Institute, which analyzed credit bureau and other demographic data on poverty, race, and health status for KFF Health News to explore where medical debt is concentrated in the U.S. and what factors are associated with high debt levels.

The JPMorgan Chase Institute analyzed records from a sampling of Chase credit card holders to look at how customers’ balances may be affected by major medical expenses. And the CED Project, a Denver nonprofit, worked with KFF Health News on a survey of its clients to explore links between medical debt and housing instability.

KFF Health News journalists worked with KFF public opinion researchers to design and analyze the “KFF Health Care Debt Survey.” The survey was conducted Feb. 25 through March 20, 2022, online and via telephone, in English and Spanish, among a nationally representative sample of 2,375 U.S. adults, including 1,292 adults with current health care debt and 382 adults who had health care debt in the past five years. The margin of sampling error is plus or minus 3 percentage points for the full sample and 3 percentage points for those with current debt. For results based on subgroups, the margin of sampling error may be higher.

Reporters from KFF Health News and NPR also conducted hundreds of interviews with patients across the country; spoke with physicians, health industry leaders, consumer advocates, debt lawyers, and researchers; and reviewed scores of studies and surveys about medical debt.

KFF Health News is a national newsroom that produces in-depth journalism about health issues and is one of the core operating programs at KFF—an independent source of health policy research, polling, and journalism. Learn more about KFF.

Subscribe to KFF Health News’ free Morning Briefing.

Share This Post

Related Posts

-

The Best Exercise to Stop Your Legs From Giving Out

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Dr. Doug Weiss, seniors-specialist physio, has an exercise that stops your knees from being tricked into collapsing (which is very common) by a misfiring (also common) reflex.

Step up…

Setup to step up thus:

- Use a sturdy support like a countertop or chair.

- Have an aerobic step or similar firm surface to step onto.

When you’re ready:

- Stand facing away from the step.

- Place one hand on the support for stability.

- Step backwards up onto the step with your right leg, then your left leg, so both feet are on the step.

- Step forward to come back down.

Once you’re confident of the series of movements, do it without the support, and do it for a few minutes each day. Don’t worry about how easy it becomes; this is not, first and foremost, a strength-training exercise; you don’t have to start adding weights or anything (although of course you can if you want).

How it works: there’s a part of you called the Golgi tendon organ, and it can trigger a Golgi tendon reflex, which is one of the body’s equivalents of a steam valve. However, instead of letting off steam to avoid a boiler explosion, it collapses a joint to save it from overload. However, if not exercised regularly, it can get overly sensitive, causing it to mistake your mere bodyweight for an overload. So, it collapses, thinking it is saving you from snapping a tendon, but it’s not. By exercising in the way described, the Golgi tendon reflex will go back to only being triggered by an actual overload, not the mere act of stepping.

Writer’s note: this one’s interesting to me as I have a) a strong lower body b) hypermobile joints that thus occasionally just fold like laundry regardless. Could it be that this will fix that? I guess I’ll find out 🙂

Meanwhile, for more on all of the above plus a visual demonstration, enjoy:

Click Here If The Embedded Video Doesn’t Load Automatically!

Want to learn more?

You might also like:

What Nobody Teaches You About Strengthening Your Knees

Take care!

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails:

-

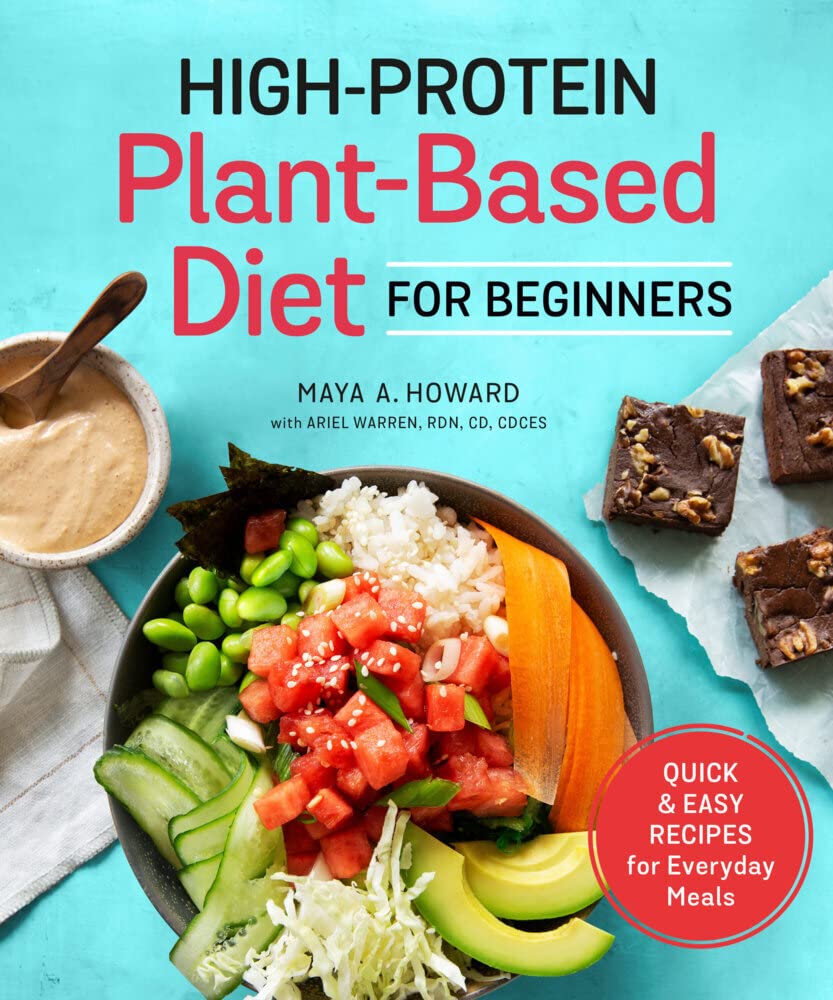

High-Protein Plant-Based Diet for Beginners – by Maya Howard with Ariel Warren

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Seasoned vegans (well-seasoned vegans?) will know that getting enough protein from a plant-based diet is really not the challenge that many think it is, but for those just embarking on cutting out the meat, it’s not useful to say “it’s easy!”; it’s useful to show how.

That’s what this book does. And not just by saying “these foods” and leaving people to wonder if they need to eat a pound of tofu each day to get their protein in. Instead, recipes. Enough for a 4-week meal plan, and the idea is that after a month of eating that way, it won’t be nearly so mysterious.

The recipes are very easy to execute, while still having plenty of flavor (which is what happens when one uses a lot of flavorsome main ingredients and then seasons them well too). The ingredients are not obscure, and you should be able to find everything easily in any medium-sized supermarket.

As for the well-roundedness of the diet, we’ll mention that the “with Ariel Warren” in the by-line means that while the book was principally authored by Maya Howard (who is, at time of writing, a nutritionist-in-training), she had input throughout from Ariel Warren (a Registered Dietician Nutritionist) to ensure she didn’t go off-piste anyway and it gets the professional stamp of approval.

Bottom line: if you’d like to cook plant based while still prioritizing protein and you’re not sure how to make that exciting and fun instead of a chore, then this book will show you how to please your taste buds and improve your body composition at the same time.

Click here to check out High-Protein Plant-Based Diet for Beginners, and dig in!

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails:

-

Can We Do Fat Redistribution?

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

The famous answer: no

The truthful answer: yes, and we are doing it all the time whether we want to or not, so we might as well know what things affect our fat distribution in various body parts.

There’s a kernel of truth in the “no”, though, and where that comes from is that we cannot exclusively put fat on in a certain area only, and nor can we do “spot reduction”, i.e., intentionally lose fat from only one place.

How, then, do we do fat redistribution?

Your body is a living organism, not a statue

It’s easy to think “I’ve been carrying this fat in this place for 20 years”, but during that time the fat has been replaced several times and moved often; in fact, the cells containing the fat have even been replaced. Because: fat can seem like a substance that’s alien to your body because it doesn’t respond like muscles, isn’t controllable like muscles, doesn’t have the same sensibility as muscles, etc. But, every bit of fat stored in your body is stored inside a fat cell; it’s not one big unit of fat; it’s lots of tiny ones.

In reality, any given bit of fat on your body has probably been there for 18–24 months at most:

Fat turnover in obese slower than average

…and there are assorted factors that can modify the rate at which our body deals with fat storage:

Human white adipose tissue: A highly dynamic metabolic organ

So, how do I get rid of this tummy?

There are plenty of stories of people who try to lose weight from one part of their body, and lose it from somewhere else instead. Say, a person wants to lose weight from her hips, and with careful diet and exercise, she loses weight—by dropping a couple of bra cup sizes while keeping the hips.

So, we must figure out: why is fat stored in certain places? And the main driving factors are:

- hormones

- metabolic health

- stress

Hormones affect fat distribution insofar as estrogen and progesterone will favor the hips, thighs, butt, breasts, and testosterone will favor a more central (but still subcutaneous, not visceral) distribution. Additionally, estrogen and progesterone will favor a higher body fat percentage, while testosterone will favor a lower one.

This is particularly relevant later in life, when suddenly the hormone(s) you’ve been relying on to keep your shape, are now declining, meaning your shape does too. This goes for everyone regardless of sex.

See:

- What You Should Have Been Told About The Menopause Beforehand

- The BAT-pause! ← this is about the conversion of white adipose tissue to brown adipose tissue, and how estrogen helps this happen

- Topping Up Testosterone?

Metabolic health affects fat distribution insofar as poor metabolic health will result in more fat being stored in the viscera, rather than in the usual subcutaneous places. This is a serious health risk.

See: Visceral Belly Fat & How To Lose It

Stress affects fat distribution insofar as chronically elevated cortisol levels see more fat sent to the stomach, face, and neck. This fat redistribution isn’t dangerous itself, but it can be indicative of the chronic stress, which does pose more of a general threat to health.

See: Lower Your Cortisol! (Here’s Why & How)

What this means in practical terms

Assuming that you would like the fat distribution that says “this is a healthy woman” or “this is a healthy man”, respectively, then you might want to:

- Check your sex hormone levels and get them adjusted if appropriate

- Improve your overall metabolic health—without necessarily trying to lose weight, just, take care of your blood sugars for example, and they will take care of you in terms of fat storage.

- Manage your stress (which includes any stress you are experiencing about your body not being how you’d like it to be).

If you are doing these things, and you don’t have any major untreated medical abnormalities that affect these things, then your fat will go to the places generally considered healthiest.

Can we speed it up?

Yes, we can! Firstly, we can speed up our overall metabolism:

Let’s Burn! Metabolic Tweaks And Hacks

Secondly, we can encourage our body to “move” fat by intentionally “yo-yoing”, something usually considered bad in dieting when people just want to lose weight and instead are going up and down, but: if you lose weight healthily, it comes off everywhere evenly, and if you gain weight healthily, it goes mostly to the places where it should be.

So, a sequence of lose-gain-lose-gain might look like “lose a bit from everywhere, put it back in the good place, lose a bit more from everywhere, put it back in the good place”, etc.

So, you might want to gently cycle these a few months apart, for example:

How To Lose Fat (Healthily!) | How To Gain Fat (Healthily!)

You can also cheat a little, if it suits your purpose! By this we mean: if you’d like a little extra where you already have a little fat, then you can put muscle on underneath it, it will pad it up, and (because of the layer of actual fat on top) nobody will know the difference unless you flex it with their hand on it.

Let’s put it this way: people doing squats for a bubble-butt aren’t doing it to put on fat; they’re putting muscle on under the fat they have.

So, check out: How To Gain Muscle (Healthily!)

And finally, for all your body-sculpting needs, we present these excellent books:

Women’s Strength Training Anatomy Workouts – by Frédéric Delavier

Strength Training Anatomy (For Men) – by Frédéric Delavier

Enjoy!

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails: