Castor Oil: All-Purpose Life-Changer, Or Snake Oil?

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

As “trending” health products go, castor oil is enjoying a lot of popularity presently, lauded as a life-changing miracle-worker, and social media is abuzz with advice to put it everywhere from your eyes to your vagina.

But:

- what things does science actually say it’s good for,

- what things lack evidence, and

- what things go into the category of “wow definitely do not do that”?

We don’t have the space to go into all of its proposed uses (there are simply far too many), but we’ll examine some common ones:

To heal/improve the skin barrier

Like most oils, it’s functional as a moisturizer. In particular, its high (90%!) ricinoleic fatty acid content does indeed make it good at that, and furthermore, has properties that can help reduce skin inflammation and promote wound healing:

Bioactive polymeric formulations for wound healing ← there isn’t a conveniently quotable summary we can just grab here, but you can see the data and results, from which we can conclude:

- formulations with ricinoleic acid (such as with castor oil) performed very well for topical anti-inflammatory purposes

- they avoided the unwanted side effects associated with some other contenders

- they consistently beat other preparations in the category of wound-healing

To support hair growth and scalp health

There is no evidence that it helps. We’d love to provide a citation for this, but it’s simply not there. There’s also no evidence that it doesn’t help. For whatever reason, despite its popularity, peer-reviewed science has simply not been done for this, or if it has, it wasn’t anywhere publicly accessible.

It’s possible that if a person is suffering hair loss specifically as a result of prostaglandin D2 levels, that ricinoleic acid will inhibit the PGD2, reversing the hair loss, but even this is hypothetical so far, as the science is currently only at the step before that:

However, due to some interesting chemistry, the combination of castor oil and warm water can result in acute (and irreversible) hair felting, in other words, the strands of hair suddenly glue together to become one mass which then has to be cut off:

“Castor Oil” – The Culprit of Acute Hair Felting

👆 this is a case study, which is generally considered a low standard of evidence (compared to high-quality Randomized Controlled Trials as the highest standard of evidence), but let’s just say, this writer (hi, it’s me) isn’t risking her butt-length hair on the off-chance, and doesn’t advise you to, either. There are other hair-oils out there; argan oil is great, coconut oil is totally fine too.

As a laxative

This time, there’s a lot of evidence, and it’s even approved for this purpose by the FDA, but it can be a bit too good, insofar as taking too much can result in diarrhea and uncomfortable cramping (the cramps are a feature not a bug; the mechanism of action is stimulatory, i.e. it gets the intestines squeezing, but again, it can result in doing that too much for comfort):

Castor Oil: FDA-Approved Indications

To soothe dry eyes

While putting oil in your eyes may seem dubious, this is another one where it actually works:

❝Castor oil is deemed safe and tolerable, with strong anti-microbial, anti-inflammatory, anti-nociceptive, analgesic, antioxidant, wound healing and vasoconstrictive properties.

These can supplement deficient physiological tear film lipids, enabling enhanced lipid spreading characteristics and reducing aqueous tear evaporation.

Studies reveal that castor oil applied topically to the ocular surface has a prolonged residence time, facilitating increased tear film lipid layer thickness, stability, improved ocular surface staining and symptoms.❞

Source: Therapeutic potential of castor oil in managing blepharitis, meibomian gland dysfunction and dry eye

Against candidiasis (thrush)

We couldn’t find science for (or against) castor oil’s use against vaginal candidiasis, but here’s a study that investigated its use against oral candidiasis:

…in which castor oil was the only preparation that didn’t work against the yeast.

Summary

We left a lot unsaid today (so many proposed uses, it feels like a shame to skip them), but in few words: it’s good for skin (including wound healing) and eyes; but we’d give it a miss for hair, candidiasis, and digestive disorders.

Want to try some?

We don’t sell it, but here for your convenience is an example product on Amazon 😎

Take care!

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Recommended

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails:

-

Measles, Memory, & Mouths

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Three important items from this week’s health news:

It’s not about obesity

This news is based on a rodent study, so we don’t know for sure if it’s applicable to humans yet, but there’s no reason to expect that it won’t be.

The crux of the matter is that while it’s long been assumed that when it comes to diet and cognitive decline, obesity is the main driver of problems, it turns out that rats fed a high fat diet—for three days or three months—did much worse in memory tests.

This was observed in older rats, but not in younger ones—the researchers hypothesized that the younger rats benefited from their ability to activate compensatory anti-inflammatory responses, which the older rats could not.

Notably, the three-day window of high-fat diet wasn’t sufficient to cause any metabolic problems or obesity yet, but markers of neuroinflammation skyrocketed immediately, and memory test scores declined at the same rate:

Read in full: High-fat diet could cause memory problems in older adults after just a few days

Related: Can Saturated Fats Be Healthy?

Vax, Lies, & Mortality Rates

Measles is making a comeback in the US.

100 cases were reported in Gaines county, TX, recently, with 1 death there so far (an unvaccinated child). And of course, it’s spreading; in the neighboring Lea county, NM, they now have an outbreak of 30 confirmed cases, and 1 death there so far (an unvaccinated adult).

This comes with the rise of the anti-vax movement which comes with a lot of misleading rhetoric (and some things that are simply factually incorrect), and an increase in “measles parties” whereby children are deliberately exposed to measles in order to “get it out of the way” and confer later immunity. That technically does work if everyone survives, but the downside is your child may die:

Read in full: New Mexico reports 30 measles cases a day after second US death in decade

Related: 4 Ways Vaccine Skeptics Mislead You on Measles and More

What your gums say about your hormones

Times of hormonal change (so, including menopause) can show in one’s gums,

❝Recent research shows that 84% of women over 50 did not know that menopause could affect their oral health; 70% of menopausal women reported at least one new oral health symptom (like dry mouth or sensitive gums), yet only 2% had discussed these issues with their dentist.❞

Because gum disease can progress painlessly for a long while, it’s very important to stay on top of any changes, and look for the cause (enlisting the help of your doctor and/or dentist), lest you find yourself very far into periodontal disease when it could have been stopped and reversed much more easily before getting that bad.

Different life stages’ hormonal changes have different effects; the article we’ll link below also list puberty, menstrual variations, and pregnancy, but for brevity we’ll just quote what they say about menopause:

❝Menopause: the hormonal changes of menopause—primarily the drop in estrogen—can lead to oral health issues. Many menopausal women experience dry mouth, which increases the risk of cavities and gum disease, since saliva helps protect teeth. Gums may also recede or become more sensitive, and some women feel burning sensations in the mouth or changes in taste.❞

As for the rest…

Read in full: Gum health: A key indicator of women’s overall well-being

Related: How To Regrow Receding Gums

Take care!

Share This Post

-

The Insider’s Guide To Making Hospital As Comfortable As Possible

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Nobody Likes Surgery, But Here’s How To Make It Much Less Bad

This is Dr. Chris Bonney. He’s an anesthesiologist. If you have a surgery, he wants you to go in feeling calm, and make a quick recovery afterwards, with minimal suffering in between.

Being a patient in a hospital is a bit like being a passenger in an airplane:

- Almost nobody enjoys the thing itself, but we very much want to get to the other side of the experience.

- We have limited freedoms and comforts, and small things can make a big difference between misery and tolerability.

- There are professionals present to look after us, but they are busy and have a lot of other people to tend to too.

So why is it that there are so many resources available full of “tips for travelers” and so few “tips for hospital patients”?

Especially given the relative risks of each, and likelihood, or even near-certainty of coming to at least some harm… One would think “tips for patients” would be more in demand!

Tips for surgery patients, from an insider expert

First, he advises us: empower yourself.

Empowering yourself in this context means:

- Relax—doctors really want you to feel better, quickly. They’re on your side.

- Research—knowledge is power, so research the procedure (and its risks!). Dr. Bonney, himself an anesthesiologist, particularly recommends you learn what specific anesthetic will be used (there are many, and they’re all a bit different!), and what effects (and/or after-effects) that may have.

- Reframe—you’re not just a patient; you’re a customer/client. Many people suffer from MDeity syndrome, and view doctors as authority figures, rather than what they are: service providers.

- Request—if something would make you feel better, ask for it. If it’s information, they will be not only obliged, but also enthusiastic, to give it. If it’s something else, they’ll oblige if they can, and the worst case scenario is something won’t be possible, but you won’t know if you don’t ask.

Next up, help them to help you

There are various ways you can be a useful member of your own care team:

- Go into surgery as healthy as you can. If there’s ever a time to get a little fitter, eat a little healthier, prioritize good quality sleep more, the time approaching your surgery is the time to do this.

- This will help to minimize complications and maximize recovery.

- Take with you any meds you’re taking, or at least have an up-to-date list of what you’re taking. Dr. Bonney has very many times had patients tell him such things as “Well, let me see. I have two little pink ones and a little white one…” and when asked what they’re for they tell him “I have no idea, you’d need to ask my doctor”.

- Help them to help you; have your meds with you, or at least a comprehensive list (including: medication name, dosage, frequency, any special instructions)

- Don’t stop taking your meds unless told to do so. Many people have heard that one should stop taking meds before a surgery, and sometimes that’s true, but often it isn’t. Keep taking them, unless told otherwise.

- If unsure, ask your surgical team in advance (not your own doctor, who will not be as familiar with what will or won’t interfere with a surgery).

Do any preparatory organization well in advance

Consider the following:

- What do you need to take with you? Medications, clothes, toiletries, phone charger, entertainment, headphones, paperwork, cash for the vending machine?

- Will the surgeons need to shave anywhere, and if so, might you prefer doing some other form of depilation (e.g. waxing etc) yourself in advance?

- Is your list of medications ready?

- Who will take you to the hospital and who will bring you back?

- Who will stay with you for the first 24 hours after you’re sent home?

- Is someone available to look after your kids/pets/plants etc?

Be aware of how you do (and don’t) need to fast before surgery

The American Society of Anesthesiologists gives the following fasting guidelines:

- Non-food liquids: fast for at least 2 hours before surgery

- Food liquids or light snacks: fast for at least 6 hours before surgery

- Fried foods, fatty foods, meat: fast for at least 8 hours before surgery

(see the above link for more details)

Dr. Bonney notes that many times he’s had patients who’ve had the worst thirst, or caffeine headache, because of abstaining unnecessarily for the day of the surgery.

Unless told otherwise by your surgical team, you can have black coffee/tea up until two hours before your surgery, and you can and should have water up until two hours before surgery.

Hydration is good for you and you will feel the difference!

Want to know more?

Dr. Bonney has his own website and blog, where he offers lots of advice, including for specific conditions and specific surgeries, with advice for before/during/after your hospital stay.

He also has a book with many more tips like those we shared today:

Calm For Surgery: Supertips For A Smooth Recovery

Take good care of yourself!

Share This Post

-

Is Chiropractic All It’s Cracked Up To Be?

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Is Chiropractic All It’s Cracked Up To Be?

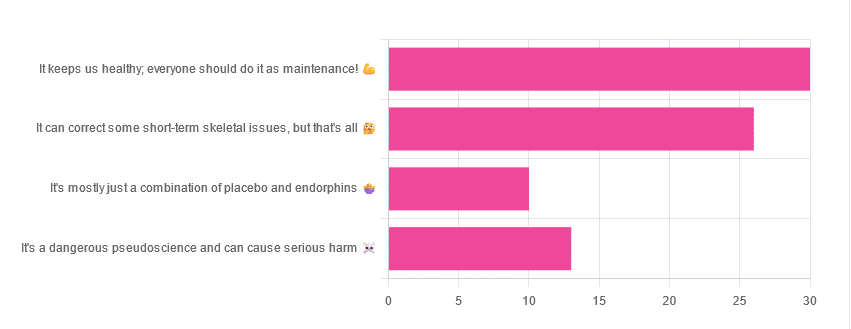

Yesterday, we asked you for your opinions on chiropractic medicine, and got the above-depicted, below-described set of results:

- 38% of respondents said it keeps us healthy, and everyone should do it as maintenance

- 33% of respondents said it can correct some short-term skeletal issues, but that’s all

- 16% of respondents said that it’s a dangerous pseudoscience and can cause serious harm

- 13% of respondents said that it’s mostly just a combination of placebo and endorphins

Respondents also shared personal horror stories of harm done, personal success stories of things cured, and personal “it didn’t seem to do anything for me” stories.

What does the science say?

It’s a dangerous pseudoscience and can cause harm: True or False?

False and True, respectively.

That is to say, chiropractic in its simplest form that makes the fewest claims, is not a pseudoscience. If somebody physically moves your bones around, your bones will be physically moved. If your bones were indeed misaligned, and the chiropractor is knowledgeable and competent, this will be for the better.

However, like any form of medicine, it can also cause harm; in chiropractic’s case, because it more often than not involves manipulation of the spine, this can be very serious:

❝Twenty six fatalities were published in the medical literature and many more might have remained unpublished.

The reported pathology usually was a vascular accident involving the dissection of a vertebral artery.

Conclusion: Numerous deaths have occurred after chiropractic manipulations. The risks of this treatment by far outweigh its benefit.❞

Source: Deaths after chiropractic: a review of published cases

From this, we might note two things:

- The abstract doesn’t note the initial sample size; we would rather have seen this information expressed as a percentage. Unfortunately, the full paper is not accessible, and nor are many of the papers it cites.

- Having a vertebral artery fatally dissected is nevertheless not an inviting prospect, and is certainly a very reasonable cause for concern.

It’s mostly just a combination of placebo and endorphins: True or False?

True or False, depending on what you went in for:

- If you went in for a regular maintenance clunk-and-click, then yes, you will get your clunk-and-click and feel better for it because you had a ritualized* experience and endorphins were released.

- If you went in for something that was actually wrong with your skeletal alignment, to get it corrected, and this correction was within your chiropractor’s competence, then yes, you will feel better because a genuine fault was corrected.

*this is not implying any mysticism, by the way. Rather it means simply that placebo effect is strongest when there is a ritual associated with it. In this case it means going to the place, sitting in a pleasant waiting room, being called in, removing your shoes and perhaps some other clothes, getting the full attention of a confident and assured person for a while, this sort of thing.

With regard to its use to combat specifically spinal pain (i.e., perhaps the most obvious thing to treat by chiropractic spinal manipulation), evidence is slightly in favor, but remains unclear:

❝Due to the low quality of evidence, the efficacy of chiropractic spinal manipulation compared with a placebo or no treatment remains uncertain. ❞

Source: Clinical Effectiveness and Efficacy of Chiropractic Spinal Manipulation for Spine Pain

It can correct some short-term skeletal issues, but that’s all: True or False?

Probably True.

Why “probably”? The effectiveness of chiropractic treatment for things other than short-term skeletal issues has barely been studied. From this, we may wish to keep an open mind, while also noting that it can hardly claim to be evidence-based—and it’s had hundreds of years to accumulate evidence. In all likelihood, publication bias has meant that studies that were conducted and found inconclusive or negative results were simply not published—but that’s just a hypothesis on our part.

In the case of using chiropractic to treat migraines, a very-related-but-not-skeletal issue, researchers found:

❝Pre-specified feasibility criteria were not met, but deficits were remediable. Preliminary data support a definitive trial of MCC+ for migraine.❞

Translating this: “it didn’t score as well as we hoped, but we can do better. We got some positive results, and would like to do another, bigger, better trial; please fund it”

Source: Multimodal chiropractic care for migraine: A pilot randomized controlled trial

Meanwhile, chiropractors’ claims for very unrelated things have been harshly criticized by the scientific community, for example:

Misinformation, chiropractic, and the COVID-19 pandemic

About that “short-term” aspect, one of our subscribers put it quite succinctly:

❝Often a skeletal correction is required for initial alignment but the surrounding fascia and muscles also need to be treated to mobilize the joint and release deep tissue damage surrounding the area. In combination with other therapies chiropractic support is beneficial.❞

This is, by the way, very consistent with what was said in the very clinically-dense book we reviewed yesterday, which has a chapter on the short-term benefits and limitations of chiropractic.

A truism that holds for many musculoskeletal healthcare matters, holds true here too:

❝In a battle between muscle and bone, muscle will always win❞

In other words…

Chiropractic can definitely help put misaligned bones back where they should be. However, once they’re there, if the cause of their misalignment is not treated, they will just re-misalign themselves shortly after you walking out of your session.

This is great for chiropractors, if it keeps you coming back for endless appointments, but it does little for your body beyond give you a brief respite.

So, by all means go to a chiropractor if you feel so inclined (and you do not fear accidental arterial dissection etc), but please also consider going to a physiotherapist, and potentially other medical professions depending on what seems to be wrong, to see about addressing the underlying cause.

Take care!

Share This Post

Related Posts

-

Mung Beans vs Black Gram – Which is Healthier?

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Our Verdict

When comparing mung beans to black gram, we picked the black gram.

Why?

Both are great, and it was close!

In terms of macros, the main difference is that mung beans have slightly more fiber, while black gram has slightly more protein. So, it comes down to which we prioritize out of those two, and we’re going to call it fiber and thus hand the win in this category to mung beans—but it’s very close in either case.

In the category of vitamins, mung beans have more of vitamins B1, B6, and B9, while black gram has more of vitamins A, B2, B3, and B5. They’re equal on vitamins C, E, K, and choline. So, a marginal victory by the numbers for black gram here.

When it comes to minerals, mung beans have more copper and potassium, while black gram has more calcium, iron, magnesium, manganese, and phosphorus. They’re equal on selenium and zinc. Another win for black gram.

Adding up the sections makes for an overall win for black gram, but by all means enjoy either or both; diversity is good!

Want to learn more?

You might like to read:

What’s Your Plant Diversity Score?

Enjoy!

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails:

-

Strength training has a range of benefits for women. Here are 4 ways to get into weights

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Picture a gym ten years ago: the weights room was largely a male-dominated space, with women mostly doing cardio exercise. Fast-forward to today and you’re likely to see women of all ages and backgrounds confidently navigating weights equipment.

This is more than just anecdotal. According to data from the Australian Sports Commission, the number of women participating in weightlifting (either competitively or not) grew nearly five-fold between 2016 and 2022.

Women are discovering what research has long shown: strength training offers benefits beyond sculpted muscles.

John Arano/Unsplash Health benefits

Osteoporosis, a disease in which the bones become weak and brittle, affects more women than men. Strength training increases bone density, a crucial factor for preventing osteoporosis, especially for women negotiating menopause.

Strength training also improves insulin sensitivity, which means your body gets better at using insulin to manage blood sugar levels, reducing the risk of type 2 diabetes. Regular strength training contributes to better heart health too.

There’s a mental health boost as well. Strength training has been linked to reduced symptoms of depression and anxiety.

Strength training can have a variety of health benefits. Ground Picture/Shutterstock Improved confidence and body image

Unlike some forms of exercise where progress can feel elusive, strength training offers clear and tangible measures of success. Each time you add more weight to a bar, you are reminded of your ability to meet your goals and conquer challenges.

This sense of achievement doesn’t just stay in the gym – it can change how women see themselves. A recent study found women who regularly lift weights often feel more empowered to make positive changes in their lives and feel ready to face life’s challenges outside the gym.

Strength training also has the potential to positively impact body image. In a world where women are often judged on appearance, lifting weights can shift the focus to function.

Instead of worrying about the number on the scale or fitting into a certain dress size, women often come to appreciate their bodies for what they can do. “Am I lifting more than I could last month?” and “can I carry all my groceries in a single trip?” may become new measures of physical success.

Strength training can have positive effects on women’s body image. Drazen Zigic/Shutterstock Lifting weights can also be about challenging outdated ideas of how women “should” be. Qualitative research I conducted with colleagues found that, for many women, strength training becomes a powerful form of rebellion against unrealistic beauty standards. As one participant told us:

I wanted something that would allow me to train that just didn’t have anything to do with how I looked.

Society has long told women to be small, quiet and not take up space. But when a woman steps up to a barbell, she’s pushing back against these outdated rules. One woman in our study said:

We don’t have to […] look a certain way, or […] be scared that we can lift heavier weights than some men. Why should we?

This shift in mindset helps women see themselves differently. Instead of worrying about being objects for others to look at, they begin to see their bodies as capable and strong. Another participant explained:

Powerlifting changed my life. It made me see myself, or my body. My body wasn’t my value, it was the vehicle that I was in to execute whatever it was that I was executing in life.

This newfound confidence often spills over into other areas of life. As one woman said:

I love being a strong woman. It’s like going against the grain, and it empowers me. When I’m physically strong, everything in the world seems lighter.

Feeling inspired? Here’s how to get started

1. Take things slow

Begin with bodyweight exercises like squats, lunges and push-ups to build a foundation of strength. Once you’re comfortable, add external weights, but keep them light at first. Focus on mastering compound movements, such as deadlifts, squats and overhead presses. These exercises engage multiple joints and muscle groups simultaneously, making your workouts more efficient.

2. Prioritise proper form

Always prioritise proper form over lifting heavier weights. Poor technique can lead to injuries, so learning the correct way to perform each exercise is crucial. To help with this, consider working with an exercise professional who can provide personalised guidance and ensure you’re performing exercises correctly, at least initially.

Bodyweight exercises, such as lunges, are a good way to get started before lifting weights. antoniodiaz/Shutterstock 3. Consistency is key

Like any fitness regimen, consistency is key. Two to three sessions a week are plenty for most women to see benefits. And don’t be afraid to occupy space in the weights room – remember you belong there just as much as anyone else.

4. Find a community

Finally, join a community. There’s nothing like being surrounded by a group of strong women to inspire and motivate you. Engaging with a supportive community can make your strength-training journey more enjoyable and rewarding, whether it’s an in-person class or an online forum.

Are there any downsides?

Gym memberships can be expensive, especially for specialist weightlifting gyms. Home equipment is an option, but quality barbells and weightlifting equipment can come with a hefty price tag.

Also, for women juggling work and family responsibilities, finding time to get to the gym two to three times per week can be challenging.

If you’re concerned about getting too “bulky”, it’s very difficult for women to bulk up like male bodybuilders without pharmaceutical assistance.

The main risks come from poor technique or trying to lift too much too soon – issues that can be easily avoided with some guidance.

Erin Kelly, Lecturer and PhD Candidate, Discipline of Sport and Exercise Science, University of Canberra

This article is republished from The Conversation under a Creative Commons license. Read the original article.

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails:

-

Why do some people’s hair and nails grow quicker than mine?

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Throughout recorded history, our hair and nails played an important role in signifying who we are and our social status. You could say, they separate the caveman from businessman.

It was no surprise then that many of us found a new level of appreciation for our hairdressers and nail artists during the COVID lockdowns. Even Taylor Swift reported she cut her own hair during lockdown.

So, what would happen if all this hair and nail grooming got too much for us and we decided to give it all up. Would our hair and nails just keep on growing?

The answer is yes. The hair on our head grows, on average, 1 centimeter per month, while our fingernails grow an average of just over 3 millimetres.

When left unchecked, our hair and nails can grow to impressive lengths. Aliia Nasyrova, known as the Ukrainian Rapunzel, holds the world record for the longest locks on a living woman, which measure an impressive 257.33 cm.

When it comes to record-breaking fingernails, Diana Armstrong from the United States holds that record at 1,306.58 cm.

Most of us, however, get regular haircuts and trim our nails – some with greater frequency than others. So why do some people’s hair and nails grow more quickly?

Jari Lobo/Pexels Remind me, what are they made out of?

Hair and nails are made mostly from keratin. Both grow from matrix cells below the skin and grow through different patterns of cell division.

Nails grow steadily from the matrix cells, which sit under the skin at the base of the nail. These cells divide, pushing the older cells forward. As they grow, the new cells slide along the nail bed – the flat area under the fingernail which looks pink because of its rich blood supply.

Nails, like hair, are made mostly of keratin. Scott Gruber/Unsplash A hair also starts growing from the matrix cells, eventually forming the visible part of the hair – the shaft. The hair shaft grows from a root that sits under the skin and is wrapped in a sac known as the hair follicle.

This sac has a nerve supply (which is why it hurts to pull out a hair), oil-producing glands that lubricate the hair and a tiny muscle that makes your hair stand up when it’s cold.

At the follicle’s base is the hair bulb, which contains the all-important hair papilla that supplies blood to the follicle.

Matrix cells near the papilla divide to produce new hair cells, which then harden and form the hair shaft. As the new hair cells are made, the hair is pushed up above the skin and the hair grows.

But the papilla also plays an integral part in regulating hair growth cycles, as it sends signals to the stem cells to move to the base of the follicle and form a hair matrix. Matrix cells then get signals to divide and start a new growth phase.

Unlike nails, our hair grows in cycles

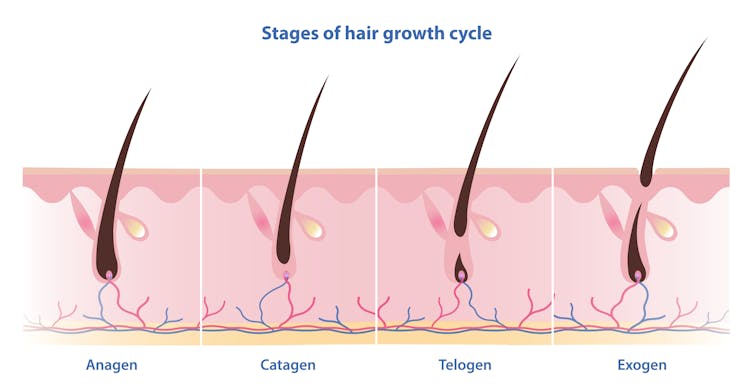

Scientists have identified four phases of hair growth, the:

- anagen or growth phase, which lasts between two and eight years

- catagen or transition phase, when growth slows down, lasting around two weeks

- telogen or resting phase, when there is no growth at all. This usually lasts two to three months

- exogen or shedding phase, when the hair falls out and is replaced by the new hair growing from the same follicle. This starts the process all over again.

Hair follicles enter these phases at different times so we’re not left bald. Mosterpiece/Shutterstock Each follicle goes through this cycle 10–30 times in its lifespan.

If all of our hair follicles grew at the same rate and entered the same phases simultaneously, there would be times when we would all be bald. That doesn’t usually happen: at any given time, only one in ten hairs is in the resting phase.

While we lose about 100–150 hairs daily, the average person has 100,000 hairs on their head, so we barely notice this natural shedding.

So what affects the speed of growth?

Genetics is the most significant factor. While hair growth rates vary between individuals, they tend to be consistent among family members.

Nails are also influenced by genetics, as siblings, especially identical twins, tend to have similar nail growth rates.

Genetics have the biggest impact on growth speed. Cottonbro Studio/Pexels But there are also other influences.

Age makes a difference to hair and nail growth, even in healthy people. Younger people generally have faster growth rates because of the slowing metabolism and cell division that comes with ageing.

Hormonal changes can have an impact. Pregnancy often accelerates hair and nail growth rates, while menopause and high levels of the stress hormone cortisol can slow growth rates.

Nutrition also changes hair and nail strength and growth rate. While hair and nails are made mostly of keratin, they also contain water, fats and various minerals. As hair and nails keep growing, these minerals need to be replaced.

That’s why a balanced diet that includes sufficient nutrients to support your hair and nails is essential for maintaining their health.

Nutrition can impact hair and nail growth. Cottonbro Studio/Pexels Nutrient deficiencies may contribute to hair loss and nail breakage by disrupting their growth cycle or weakening their structure. Iron and zinc deficiencies, for example, have both been linked to hair loss and brittle nails.

This may explain why thick hair and strong, well-groomed nails have long been associated with perception of good health and high status.

However, not all perceptions are true.

No, hair and nails don’t grow after death

A persistent myth that may relate to the legends of vampires is that hair and nails continue to grow after we die.

In reality, they only appear to do so. As the body dehydrates after death, the skin shrinks, making hair and nails seem longer.

Morticians are well aware of this phenomenon and some inject tissue filler into the deceased’s fingertips to minimise this effect.

So, it seems that living or dead, there is no escape from the never-ending task of caring for our hair and nails.

Michelle Moscova, Adjunct Associate Professor, Anatomy, UNSW Sydney

This article is republished from The Conversation under a Creative Commons license. Read the original article.

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails: