Taking A Trip Through The Evidence On Psychedelics

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

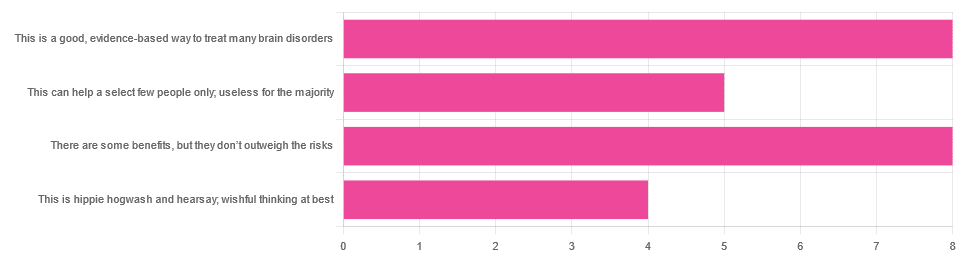

In Tuesday’s newsletter, we asked you for your opinions on the medicinal use of psychedelics, and got the above-depicted, below-described, set of responses:

- 32% said “This is a good, evidence-based way to treat many brain disorders”

- 32% said “There are some benefits, but they don’t outweigh the risks”

- 20% said “This can help a select few people only; useless for the majority”

- 16% said “This is hippie hogwash and hearsay; wishful thinking at best”

Quite a spread of answers, so what does the science say?

This is hippie hogwash and hearsay; wishful thinking at best! True or False?

False! We’re tackling this one first, because it’s easiest to answer:

There are some moderately-well established [usually moderate] clinical benefits from some psychedelics for some people.

If that sounds like a very guarded statement, it is. Part of this is because “psychedelics” is an umbrella term; perhaps we should have conducted separate polls for psilocybin, MDMA, ayahuasca, LSD, ibogaine, etc, etc.

In fact: maybe we will do separate main features for some of these, as there is a lot to say about each of them separately.

Nevertheless, looking at the spread of research as it stands for psychedelics as a category, the answers are often similar across the board, even when the benefits/risks may differ from drug to drug.

To speak in broad terms, if we were to make a research summary for each drug it would look approximately like this in each case:

- there has been research into this, but not nearly enough, as “the war on drugs” may well have manifestly been lost (the winner of the war being: drugs; still around and more plentiful than ever), but it did really cramp science for a few decades.

- the studies are often small, heterogenous (often using moderately wealthy white student-age population samples), and with a low standard of evidence (i.e. the methodology often has some holes that leave room for reasonable doubt).

- the benefits recorded are often small and transient.

- in their favor, though, the risks are also generally recorded as being quite low, assuming proper safe administration*.

*Illustrative example:

Person A takes MDMA in a club, dances their cares away, has had only alcohol to drink, sweats buckets but they don’t care because they love everyone and they see how we’re all one really and it all makes sense to them and then they pass out from heat exhaustion and dehydration and suffer kidney damage (not to mention a head injury when falling) and are hospitalized and could die;

Person B takes MDMA in a lab, is overwhelmed with a sense of joy and the clarity of how their participation in the study is helping humanity; they want to hug the researcher and express their gratitude; the researcher reminds them to drink some water.

Which is not to say that a lab is the only safe manner of administration; there are many possible setups for supervised usage sites. But it does mean that the risks are often as much environmental as they are risks inherent to the drug itself.

Others are more inherent to the drug itself, such as adverse cardiac events for some drugs (ibogaine is one that definitely needs medical supervision, for example).

For those who’d like to see numbers and clinical examples of the bullet points we gave above, here you go; this is a great (and very readable) overview:

NIH | Evidence Brief: Psychedelic Medications for Mental Health and Substance Use Disorders

Notwithstanding the word “brief” (intended in the sense of: briefing), this is not especially brief and is rather an entire book (available for free, right there!), but we do recommend reading it if you have time.

This can help a select few people only; useless for the majority: True or False?

True, technically, insofar as the evidence points to these drugs being useful for such things as depression, anxiety, PTSD, addiction, etc, and estimates of people who struggle with mental health issues in general is often cited as being 1 in 4, or 1 in 5. Of course, many people may just have moderate anxiety, or a transient period of depression, etc; many, meanwhile, have it worth.

In short: there is a very large minority of people who suffer from mental health issues that, for each issue, there may be one or more psychedelic that could help.

This is a good, evidence-based way to treat many brain disorders: True or False?

True if and only if we’re willing to accept the so far weak evidence that we discussed above. False otherwise, while the jury remains out.

One thing in its favor though is that while the evidence is weak, it’s not contradictory, insofar as the large preponderance of evidence says such therapies probably do work (there aren’t many studies that returned negative results); the evidence is just weak.

When a thousand scientists say “we’re not completely sure, but this looks like it helps; we need to do more research”, then it’s good to believe them on all counts—the positivity and the uncertainty.

This is a very different picture than we saw when looking at, say, ear candling or homeopathy (things that the evidence says simply do not work).

We haven’t been linking individual studies so far, because that book we linked above has many, and the number of studies we’d have to list would be:

n = number of kinds of psychedelic drugs x number of conditions to be treated

e.g. how does psilocybin fare for depression, eating disorders, anxiety, addiction, PTSD, this, that, the other; now how does ayahuasca fare for each of those, and so on for each drug and condition; at least 25 or 30 as a baseline number, and we don’t have that room.

But here are a few samples to finish up:

- Psilocybin as a New Approach to Treat Depression and Anxiety in the Context of Life-Threatening Diseases—A Systematic Review and Meta-Analysis of Clinical Trials

- Therapeutic Use of LSD in Psychiatry: A Systematic Review of Randomized-Controlled Clinical Trials

- Efficacy of Psychoactive Drugs for the Treatment of Posttraumatic Stress Disorder: A Systematic Review of MDMA, Ketamine, LSD and Psilocybin

- Changes in self-rumination and self-compassion mediate the effect of psychedelic experiences on decreases in depression, anxiety, and stress.

- Psychedelic Treatments for Psychiatric Disorders: A Systematic Review and Thematic Synthesis of Patient Experiences in Qualitative Studies

- Repeated lysergic acid diethylamide (LSD) reverses stress-induced anxiety-like behavior, cortical synaptogenesis deficits and serotonergic neurotransmission decline

In closing…

The general scientific consensus is presently “many of those drugs may ameliorate many of those conditions, but we need a lot more research before we can say for sure”.

On a practical level, an important take-away from this is twofold:

- drugs, even those popularly considered recreational, aren’t ontologically evil, generally do have putative merits, and have been subject to a lot of dramatization/sensationalization, especially by the US government in its famous war on drugs.

- drugs, even those popularly considered beneficial and potentially lifechangingly good, are still capable of doing great harm if mismanaged, so if putting aside “don’t do drugs” as a propaganda of the past, then please do still hold onto “don’t do drugs alone”; trained professional supervision is a must for safety.

Take care!

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Recommended

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails:

-

Beetroot vs Pumpkin – Which is Healthier?

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Our Verdict

When comparing beetroot to pumpkin, we picked the beetroot.

Why?

It was close! And an argument could be made for either.

In terms of macros, beetroot has about 3x more protein and about 3x more fiber, as well as about 2x more carbs, making it the “more food per food” option. While both have a low glycemic index, we picked the beetroot here for its better numbers overall.

In the category of vitamins, beetroot has more of vitamins B6 and B9, while pumpkin has more of vitamins A, B2, B3, B5, E, and K. So, a fair win for pumpkin this time.

When it comes to minerals, though, beetroot has more calcium, iron, magnesium, manganese, phosphorus, potassium, selenium, and zinc, while pumpkin has a tiny bit more copper. An easy win for beetroot here.

In short, both are great, and although pumpkin shines in the vitamin category, beetroot wins on overall nutritional density.

Want to learn more?

You might like to read:

No, beetroot isn’t vegetable Viagra. But here’s what it can do

Take care!

Share This Post

-

Ageless – by Dr. Andrew Steele

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

So, yet another book with “The new science of…” in the title; does this one deliver new science?

Actually, yes, this time! The author was originally a physicist before deciding that aging was the number one problem that needed solving, and switched tracks to computational biology, and pioneered a lot of research, some of the fruits of which can be found in this book, in amongst a more general history of the (very young!) field of biogerontology.

Downside: most of this is not very practical for the lay reader; most of it is explanations of how things happen on a cellular and/or genetic level, and how we learned that. A lot also pertains to what we can learn from animals that either age very slowly, or are biologically immortal (in other words, they can still be killed, but they don’t age and won’t die of anything age-related), or are immune to cancer—and how we might borrow those genes for gene therapy.

However, there are also chapters on such things as “running repairs”, “reprogramming aging”, and “how to live long enough to live even longer”.

The style is conversational pop science; in the prose, he simply states things without reference, but at the back, there are 40 pages of bibliography, indexed in the order in which they occurred and prefaced with the statement that he’s referencing in each case. It’s an odd way to do citations, but it works comfortably enough.

Bottom line: if you’d like to understand aging on the cellular level, and how we know what we know and what the likely future possibilities are, then this is a great book; it’s also simply very enjoyable to read, assuming you have an interest in the topic (as this reviewer does).

Click here to check out Ageless, and understand the science of getting older without getting old!

Share This Post

-

Serotonin vs Dopamine (Know The Differences)

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Of the various neurotransmitters that people confuse with each other, serotonin and dopamine are the two highest on the list (with oxytocin coming third as people often attribute its effects to serotonin). But, for all they are both “happiness molecules”, serotonin and dopamine are quite different, and are even opposites in some ways:

More than just happiness

Let’s break it down:

Similarities:

- Both are neurotransmitters, neuromodulators, and monoamines.

- Both impact cognition, mood, energy, behavior, memory, and learning.

- Both influence social behavior, though in different ways.

Differences (settle in; there are many):

- Chemical structure:

- Dopamine: catecholamine (derived from phenylalanine and tyrosine)

- Serotonin: indoleamine (derived from tryptophan)

- Derivatives:

- Dopamine → noradrenaline and adrenaline (stress and alertness)

- Serotonin → melatonin (sleep and circadian rhythm)

- Effects on mental state:

- Dopamine: drives action, motivation, and impulsivity.

- Serotonin: promotes calmness, behavioral inhibition, and cooperation.

- Role in memory and learning:

- Dopamine: key in attention and working memory

- Serotonin: crucial for hippocampus activation and long-term memory

Symptoms of imbalance:

- Low dopamine:

- Loss of motivation, focus, emotion, and activity

- Linked to Parkinson’s disease and ADHD

- Low serotonin:

- Sadness, irritability, poor sleep, and digestive issues

- Linked to PTSD, anxiety, and OCD

- High dopamine:

- Excessive drive, impulsivity, addictions, psychosis

- High serotonin:

- Nervousness, nausea, and in extreme cases, serotonin syndrome (which can be fatal)

Brain networks:

- Dopamine: four pathways controlling movement, attention, executive function, and hormones.

- Serotonin: widely distributed across the cortex, partially overlapping with dopamine systems.

Speed of production:

- Dopamine: can spike and deplete quickly; fatigues faster with overuse.

- Serotonin: more stable, releasing steadily over longer periods.

Illustrative examples:

- Coffee boosts dopamine but loses its effect with repeated use.

- Sunlight helps maintain serotonin levels over time.

If you remember nothing else, remember this:

- Dopamine: action, motivation, and alertness.

- Serotonin: contentment, happiness, and calmness.

For more on all of the above, enjoy:

Click Here If The Embedded Video Doesn’t Load Automatically!

Want to learn more?

You might also like to read:

Take care!

Share This Post

Related Posts

-

The 5 Resets – by Dr. Aditi Nerurkar

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

What this book isn’t: an advice to go on a relaxing meditation retreat, or something like that.

What this is: a science-based guide to what actually works.

There’s no need to be mysterious, so we’ll mention that the titular “5 resets” are:

- What matters most

- Quiet in a noisy world

- Leveraging the brain-body connection

- Coming up for air (regaining perspective)

- Bringing your best self forward

All of these are things we can easily lose sight of in the hustle and bustle of daily life, so having a system for keeping them on track can make a huge difference!

The style is personable and accessible, while providing a lot of strongly science-backed tips and tricks along the way.

Bottom line: if life gets away from you a little too often for comfort, this book can help you keep on top of things with a lot less stress.

Click here to check out “The 5 Resets”, and take control with conscious calm!

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails:

-

4 Critical Things Female Runners Should Know

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

When it comes to keeping up performance in the face of menopause, Shona Hendricks has advice:

Don’t let menopause run you down

- Prioritize recovery! Overtraining without adequate recovery just leads to decreased performance in the long term, and remember, you may not recover as quickly as you used to. If you’re still achey from your previous run, give it another day, or at least make it a lighter run.

- Slow down in easy and long runs! This isn’t “taking the easy way out”; it will improve your overall performance, reducing muscle damage, allowing for quicker recovery and ultimately better fitness gains.

- Focus on nutrition! And that means carbs too. A lot of people fighting menopausal weight gain reduce their intake of food, but without sufficient energy availability, you will not be able to run well. In particular, carbohydrates are vital for energy. Consume them sensibly and with fiber and proteins and fats rather than alone, but do consume them.

- Incorporate strength training! Your run is not “leg day” by itself. Furthermore, do whole-body strength training, to prevent injuries and improve overall performance. A strong core is particularly important.

For more on each of these (and some bonus comments about mobility training for runners), enjoy:

Click Here If The Embedded Video Doesn’t Load Automatically!

Want to learn more?

You might also like to read:

Take care!

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails:

-

Terminal lucidity: why do loved ones with dementia sometimes ‘come back’ before death?

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Dementia is often described as “the long goodbye”. Although the person is still alive, dementia slowly and irreversibly chips away at their memories and the qualities that make someone “them”.

Dementia eventually takes away the person’s ability to communicate, eat and drink on their own, understand where they are, and recognise family members.

Since as early as the 19th century, stories from loved ones, caregivers and health-care workers have described some people with dementia suddenly becoming lucid. They have described the person engaging in meaningful conversation, sharing memories that were assumed to have been lost, making jokes, and even requesting meals.

It is estimated 43% of people who experience this brief lucidity die within 24 hours, and 84% within a week.

Why does this happen?

Terminal lucidity or paradoxical lucidity?

In 2009, researchers Michael Nahm and Bruce Greyson coined the term “terminal lucidity”, since these lucid episodes often occurred shortly before death.

But not all lucid episodes indicate death is imminent. One study found many people with advanced dementia will show brief glimmers of their old selves more than six months before death.

Lucidity has also been reported in other conditions that affect the brain or thinking skills, such as meningitis, schizophrenia, and in people with brain tumours or who have sustained a brain injury.

Moments of lucidity that do not necessarily indicate death are sometimes called paradoxical lucidity. It is considered paradoxical as it defies the expected course of neurodegenerative diseases such as dementia.

But it’s important to note these episodes of lucidity are temporary and sadly do not represent a reversal of neurodegenerative disease.

Sadly, these episodes of lucidity are only temporary. Pexels/Kampus Production Why does terminal lucidity happen?

Scientists have struggled to explain why terminal lucidity happens. Some episodes of lucidity have been reported to occur in the presence of loved ones. Others have reported that music can sometimes improve lucidity. But many episodes of lucidity do not have a distinct trigger.

A research team from New York University speculated that changes in brain activity before death may cause terminal lucidity. But this doesn’t fully explain why people suddenly recover abilities that were assumed to be lost.

Paradoxical and terminal lucidity are also very difficult to study. Not everyone with advanced dementia will experience episodes of lucidity before death. Lucid episodes are also unpredictable and typically occur without a particular trigger.

And as terminal lucidity can be a joyous time for those who witness the episode, it would be unethical for scientists to use that time to conduct their research. At the time of death, it’s also difficult for scientists to interview caregivers about any lucid moments that may have occurred.

Explanations for terminal lucidity extend beyond science. These moments of mental clarity may be a way for the dying person to say final goodbyes, gain closure before death, and reconnect with family and friends. Some believe episodes of terminal lucidity are representative of the person connecting with an afterlife.

Why is it important to know about terminal lucidity?

People can have a variety of reactions to seeing terminal lucidity in a person with advanced dementia. While some will experience it as being peaceful and bittersweet, others may find it deeply confusing and upsetting. There may also be an urge to modify care plans and request lifesaving measures for the dying person.

Being aware of terminal lucidity can help loved ones understand it is part of the dying process, acknowledge the person with dementia will not recover, and allow them to make the most of the time they have with the lucid person.

For those who witness it, terminal lucidity can be a final, precious opportunity to reconnect with the person that existed before dementia took hold and the “long goodbye” began.

Yen Ying Lim, Associate Professor, Turner Institute for Brain and Mental Health, Monash University and Diny Thomson, PhD (Clinical Neuropsychology) Candidate and Provisional Psychologist, Monash University

This article is republished from The Conversation under a Creative Commons license. Read the original article.

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails: