MSG vs. Salt: Sodium Comparison

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

It’s Q&A Day at 10almonds!

Q: Is MSG healthier than salt in terms of sodium content or is it the same or worse?

Great question, and for that matter, MSG itself is a great topic for another day. But your actual question, we can readily answer here and now:

- Firstly, by “salt” we’re assuming from context that you mean sodium chloride.

- Both salt and MSG do contain sodium. However…

- MSG contains only about a third of the sodium that salt does, gram-for-gram.

- It’s still wise to be mindful of it, though. Same with sodium in other ingredients!

- Baking soda contains about twice as much sodium, gram for gram, as MSG.

Wondering why this happens?

Salt (sodium chloride, NaCl) is equal parts sodium and chlorine, by atom count, but sodium’s atomic mass is lower than chlorine’s, so 100g of salt contains only 39.34g of sodium.

Baking soda (sodium bicarbonate, NaHCO₃) is one part sodium for one part hydrogen, one part carbon, and three parts oxygen. Taking each of their diverse atomic masses into account, we see that 100g of baking soda contains 27.4g sodium.

MSG (monosodium glutamate, C₅H₈NO₄Na) is only one part sodium for 5 parts carbon, 8 parts hydrogen, 1 part nitrogen, and 4 parts oxygen… And all those other atoms put together weigh a lot (comparatively), so 100g of MSG contains only 12.28g sodium.

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Recommended

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails:

Cardiac Failure Explained – by Dr. Warrick Bishop

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

The cover of this book makes it look like it’ll be a flashy semi-celebrity doctor keen to sell his personalized protocol, along with eleventy-three other books, but actually, what’s inside this one is very different:

We (hopefully) all know the basics of heart health, but this book takes it a lot further. Starting with the basics, then the things that it’s easy to feel like you should know but actually most people don’t, then into much more depth.

The format is much more like a university textbook than most pop-science books, and everything about the way it’s written is geared for maximum learning. The one thing it does keep in common with pop-science books as a genre is heavy use of anecdotes to illustrate points—but he’s just as likely to use tables, diagrams, callout boxes, emboldening of key points, recap sections, and so forth. And for the most part, this book is very information-dense.

Dr. Bishop also doesn’t just stick to what’s average, and talks a lot about aberrations from the norm, what they mean and what they do and yes, what to do about them.

On the one hand, it’s more information dense than the average reader can reasonably expect to need… On the other hand, isn’t it great to finish reading a book feeling like you just did a semester at medical school? No longer will you be baffled by what is going on in your (or perhaps a loved one’s) cardiac health.

Bottom line: if you’d like to know cardiac health inside out, this book is an excellent place to start.

Click here to check out Cardiac Failure Explained, and get to the heart of things!

Share This Post

Get The Right Help For Your Pain

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

How Much Does It Hurt?

Sometimes, a medical professional will ask us to “rate your pain on a scale of 1–10”.

It can be tempting to avoid rating one’s pain too highly, because if we say “10” then where can we go from there? There is always a way to make pain worse, after all.

But that kind of thinking, however logical, is folly—from a practical point of view. Instead of risking having to give an 11 later, you have now understated your level-10 pain as a “7” and the doctor thinks “ok, I’ll give Tylenol instead of morphine”.

A more useful scale

First, know this:

Zero is not “this is the lowest level of pain I get to”.

Zero is “no pain”.

As for the rest…

- My pain is hardly noticeable.

- I have a low level of pain; I am aware of my pain only when I pay attention to it.

- My pain bothers me, but I can ignore it most of the time.

- I am constantly aware of my pain, but can continue most activities.

- I think about my pain most of the time; I cannot do some of the activities I need to do each day because of the pain.

- I think about my pain all of the time; I give up many activities because of my pain.

- I am in pain all of the time; It keeps me from doing most activities.

- My pain is so severe that it is difficult to think of anything else. Talking and listening are difficult.

- My pain is all that I can think about; I can barely move or talk because of my pain.

- I am in bed and I can’t move due to my pain; I need someone to take me to the emergency room because of my pain.

10almonds tip: are you reading this on your phone? Screenshot the above, and keep it for when you need it!

One extra thing to bear in mind…

Medical staff will be more likely to believe a pain is being overstated, on a like-for-like basis, if you are a woman, or not white, or both.

There are some efforts to compensate for this:

A new government inquiry will examine women’s pain and treatment. How and why is it different?

Some other resources of ours:

- The 7 Approaches To Pain Management ← a pain specialist discusses the options available

- Managing Chronic Pain (Realistically!) ← when there’s no quick fix, but these things can buy you some hours’ relief at least / stop the pain from getting worse in the moment

- Science-Based Alternative Pain Relief ← for when you’re maxxed out on painkillers, and need something more/different, these are the things the science says will work

Take care!

Share This Post

Play Bold – by Magnus Penker

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

This book is very different to what you might expect, from the title.

We often see: “play bold, believe in yourself, the universe rewards action” etc… Instead, this one is more: “play bold, pay attention to the data, use these metrics, learn from what these businesses did and what their results were”, etc.

We often see: “here’s an anecdote about a historical figure and/or celebrity who made a tremendous bluff and it worked out well so you should too” etc… Instead, this one is more: “see how what we think of as safety is actually anything but! And how by embracing change quickly (or ideally: proactively), we can stay ahead of disaster that may otherwise hit us”.

Penker’s background is also relevant here. He has decades of experience, having “launched 10 start-ups and acquired, turned around, and sold over 30 SMEs all over Europe”. Importantly, he’s also “still in the game”… So, unlike many authors whose last experience in the industry was in the 1970s and who wonder why people aren’t reaping the same rewards today!

Penker is the therefore opposite of many who advocate to “play bold” but simply mean “fail fast, fail often”… While quietly relying on their family’s capital and privilege to leave a trail of financial destruction behind them, and simultaneously gloating about their imagined business expertise.

In short: boldness does not equate to foolhardiness, and foolhardiness does not equate to boldness.

As for telling the difference? Well, for that we recommend reading the book—It’s a highly instructive one.

Take The First Bold Step Of Checking Out This Book On Amazon!

Share This Post

Related Posts

Why We Get Fat: And What to Do About It – by Gary Taubes

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

We’ve previously reviewed Taubes’ “The Case Against Sugar“. What does this one bring differently?

Mostly, it’s a different focus. Unsurprisingly, Taubes’ underlying argument is the same: sugar is the biggest dietary health hazard we face. However, this book looks at it specifically through the lens of weight loss, or avoiding weight gain.

Taubes argues for low-carb in general; he doesn’t frame it specifically as the ketogenic diet here, but that is what he is advocating. However, he also acknowledges that not all carbs are created equal, and looks at several categories that are relatively better or worse for our insulin response, and thus, fat management.

If the book has a fault it’s that it does argue a bit too much for eating large quantities of meat, based on Weston Price’s outdated and poorly-conducted research. However, if one chooses to disregard that, the arguments for a low-carb diet for weight management remain strong.

Bottom line: if you’d like to cut some fat without eating less (or exercising more), this book offers a good, well-explained guide for doing so.

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails:

Endure – by Alex Hutchinson

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

Life is a marathon, not a sprint. For most of us, at least. But how do we pace ourselves to go the distance, without falling into complacency along the way?

According to our author Alex Hutchinson, there’s a lot more to it than goal-setting and strategy.

Hutchinson set out to write a running manual, and ended up writing a manual for life. To be clear, this is still mostly centered around the science of athletic endurance, but covers the psychological factors as much as the physical… and notes how the capacity to endure is the key trait that underlies great performance in every field.

The writing style is both personal and personable, and parts read like a memoir (Hutchinson himself being a runner and sports journalist), while others are scientific in nature.

As for the science, the kind of science examined runs the gamut from case studies to clinical studies. We examine not just the science of physical endurance, but the science of psychological endurance too. We learn about such things as:

- How perception of ease/difficulty plays its part

- What factors make a difference to pain tolerance

- How mental exhaustion affects physical performance

- What environmental factors increase or lessen our endurance

- …and many other elements that most people don’t consider

Bottom line: whether you want to run a marathon in under two hours, or just not quit after one minute forty seconds on the exercise bike, or to get through a full day’s activities while managing chronic pain, this book can help.

Click here to check out Endure, and find out what you are capable of when you move your limits!

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails:

Mental illness, psychiatric disorder or psychological problem. What should we call mental distress?

10almonds is reader-supported. We may, at no cost to you, receive a portion of sales if you purchase a product through a link in this article.

We talk about mental health more than ever, but the language we should use remains a vexed issue.

Should we call people who seek help patients, clients or consumers? Should we use “person-first” expressions such as person with autism or “identity-first” expressions like autistic person? Should we apply or avoid diagnostic labels?

These questions often stir up strong feelings. Some people feel that patient implies being passive and subordinate. Others think consumer is too transactional, as if seeking help is like buying a new refrigerator.

Advocates of person-first language argue people shouldn’t be defined by their conditions. Proponents of identity-first language counter that these conditions can be sources of meaning and belonging.

Avid users of diagnostic terms see them as useful descriptors. Critics worry that diagnostic labels can box people in and misrepresent their problems as pathologies.

Underlying many of these disagreements are concerns about stigma and the medicalisation of suffering. Ideally the language we use should not cast people who experience distress as defective or shameful, or frame everyday problems of living in psychiatric terms.

Our new research, published in the journal PLOS Mental Health, examines how the language of distress has evolved over nearly 80 years. Here’s what we found.

Engin Akyurt/Pexels Generic terms for the class of conditions

Generic terms – such as mental illness, psychiatric disorder or psychological problem – have largely escaped attention in debates about the language of mental ill health. These terms refer to mental health conditions as a class.

Many terms are currently in circulation, each an adjective followed by a noun. Popular adjectives include mental, mental health, psychiatric and psychological, and common nouns include condition, disease, disorder, disturbance, illness, and problem. Readers can encounter every combination.

These terms and their components differ in their connotations. Disease and illness sound the most medical, whereas condition, disturbance and problem need not relate to health. Mental implies a direct contrast with physical, whereas psychiatric implicates a medical specialty.

Mental health problem, a recently emerging term, is arguably the least pathologising. It implies that something is to be solved rather than treated, makes no direct reference to medicine, and carries the positive connotations of health rather than the negative connotation of illness or disease.

Is ‘mental health problem’ actually less pathologising? Monkey Business Images/Shutterstock Arguably, this development points to what cognitive scientist Steven Pinker calls the “euphemism treadmill”, the tendency for language to evolve new terms to escape (at least temporarily) the offensive connotations of those they replace.

English linguist Hazel Price argues that mental health has increasingly come to replace mental illness to avoid the stigma associated with that term.

How has usage changed over time?

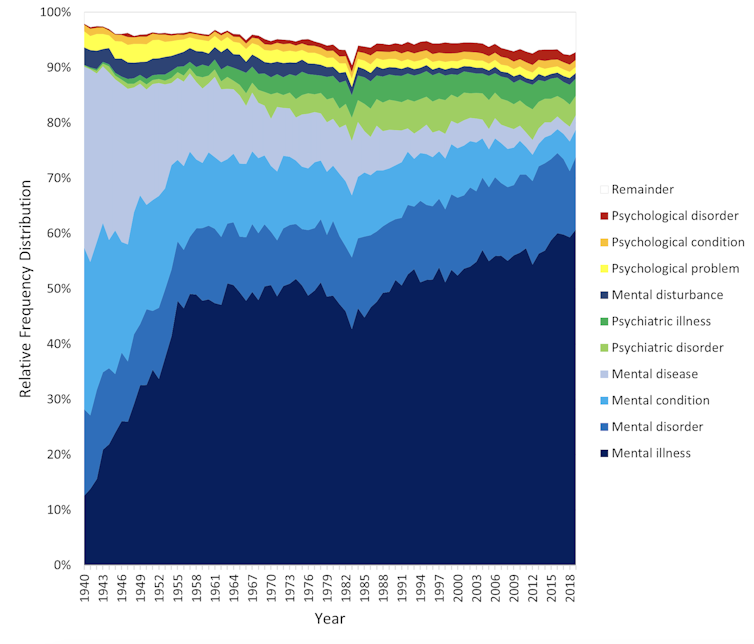

In the PLOS Mental Health paper, we examine historical changes in the popularity of 24 generic terms: every combination of the nouns and adjectives listed above.

We explore the frequency with which each term appears from 1940 to 2019 in two massive text data sets representing books in English and diverse American English sources, respectively. The findings are very similar in both data sets.

The figure presents the relative popularity of the top ten terms in the larger data set (Google Books). The 14 least popular terms are combined into the remainder.

Relative popularity of alternative generic terms in the Google Books corpus. Haslam et al., 2024, PLOS Mental Health. Several trends appear. Mental has consistently been the most popular adjective component of the generic terms. Mental health has become more popular in recent years but is still rarely used.

Among nouns, disease has become less widely used while illness has become dominant. Although disorder is the official term in psychiatric classifications, it has not been broadly adopted in public discourse.

Since 1940, mental illness has clearly become the preferred generic term. Although an assortment of alternatives have emerged, it has steadily risen in popularity.

Does it matter?

Our study documents striking shifts in the popularity of generic terms, but do these changes matter? The answer may be: not much.

One study found people think mental disorder, mental illness and mental health problem refer to essentially identical phenomena.

Other studies indicate that labelling a person as having a mental disease, mental disorder, mental health problem, mental illness or psychological disorder makes no difference to people’s attitudes toward them.

We don’t yet know if there are other implications of using different generic terms, but the evidence to date suggests they are minimal.

The labels we use may not have a big impact on levels of stigma. Pixabay/Pexels Is ‘distress’ any better?

Recently, some writers have promoted distress as an alternative to traditional generic terms. It lacks medical connotations and emphasises the person’s subjective experience rather than whether they fit an official diagnosis.

Distress appears 65 times in the 2022 Victorian Mental Health and Wellbeing Act, usually in the expression “mental illness or psychological distress”. By implication, distress is a broad concept akin to but not synonymous with mental ill health.

But is distress destigmatising, as it was intended to be? Apparently not. According to one study, it was more stigmatising than its alternatives. The term may turn us away from other people’s suffering by amplifying it.

So what should we call it?

Mental illness is easily the most popular generic term and its popularity has been rising. Research indicates different terms have little or no effect on stigma and some terms intended to destigmatise may backfire.

We suggest that mental illness should be embraced and the proliferation of alternative terms such as mental health problem, which breed confusion, should end.

Critics might argue mental illness imposes a medical frame. Philosopher Zsuzsanna Chappell disagrees. Illness, she argues, refers to subjective first-person experience, not to an objective, third-person pathology, like disease.

Properly understood, the concept of illness centres the individual and their connections. “When I identify my suffering as illness-like,” Chappell writes, “I wish to lay claim to a caring interpersonal relationship.”

As generic terms go, mental illness is a healthy option.

Nick Haslam, Professor of Psychology, The University of Melbourne and Naomi Baes, Researcher – Social Psychology/ Natural Language Processing, The University of Melbourne

This article is republished from The Conversation under a Creative Commons license. Read the original article.

Don’t Forget…

Did you arrive here from our newsletter? Don’t forget to return to the email to continue learning!

Learn to Age Gracefully

Join the 98k+ American women taking control of their health & aging with our 100% free (and fun!) daily emails: